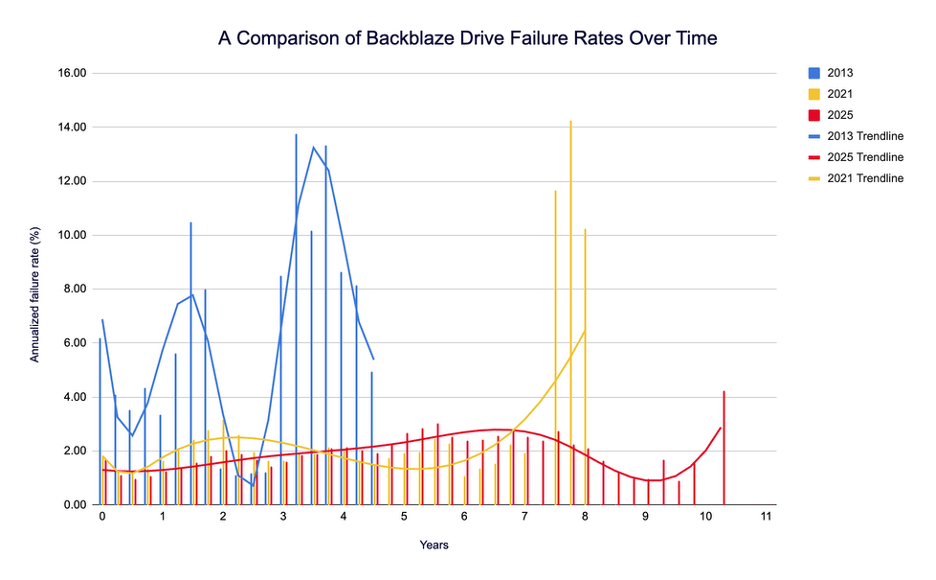

For decades, anyone exploring reliability engineering encountered the same diagram: a “bath tub curve.” Initially, it showed a small bump of early failures; then a steady plateau; and at the end of the lifespan, a rebound as hardware wears out. Simple, elegant, reassuring. The problem is, with real, large-scale data at hand, it no longer fits. That’s what Backblaze—known for releasing quarterly failure databases—asserts after 13 years of continuous telemetry and hundreds of thousands of monitored units in their data centers: hard drives are performing better and lasting longer, and the failure pattern they observe isn’t the classic U-shape from textbooks.

The company has revisited three historical snapshots: 2013, 2021, and 2025. The contrast is striking. In 2013, the peak annualized failure rate (AFR) was around 13.73% at 3 years and 3 months (with another close maximum, 13.30% at 3 years and 9 months). In 2021, the maximum went up to 14.24%, but much later, at 7 years and 9 months. And by 2025… the “wall” is delayed even further and becomes much lower: a peak of 4.25% at 10 years and 3 months. That’s three times lower than the peaks seen in 2013 and 2021 and clearly near the end of the units’ life. Conversely, the “start” of the curve has also improved: from 0 to 1 year of age, it barely exceeds 1.30% AFR (the most recent quarterly AFR is at 1.36%).

What the “bath tub curve” is (and isn’t)

The bath tub curve is a visual shortcut: devices fail more early (manufacturing defects manifesting quickly), less during their midlife (constant failure rate), and more at the end due to wear and tear. It has educational value but simplifies: it treats time as the only variable and assumes that environment, models, firmware, workload profiles, and operational processes don’t change. In a real data center, those conditions never stay the same. Operators try to standardize temperature, vibrations, power supply, and load, but there are cohorts of purchase, different models, updates, and operational changes that influence the final picture.

Backblaze has tested this with its own records. In 2013, they analyzed a much smaller fleet with more variability—around 35,000 disks at that time, with over 100 PB in production by September 2014—which included many reused consumer units in chassis (the so-called drive farming, removing disks from proprietary enclosures to mount in racks), adding potential for operational shocks. By 2021, the dataset was much larger—about 206,928 disks—thanks to expanding the Sacramento data center, opening Phoenix and Amsterdam, launching Backblaze B2, and going public. In 2025, the count neared 317,230 disks (Q2-2025 closing, with the usual exclusions from the report).

The larger the sample size, the fewer the “statistical sawtooth” variations… unless there’s a real problem with a model or the units are approaching the end of life. And with increasing operational maturity, the fleet’s hygiene improves: larger batch purchases, more refined decommissioning criteria, different acceptance standards. All these shape the curve away from the idealized model, but the overall trend is clear: average reliability has improved, and the sharp peaks of AFR now arrive later and lower.

How data has changed (and why it matters)

Backblaze itself recognizes that comparing 2013, 2021, and 2025 requires context:

- Fleet size and composition. In 2013, fewer disks with more dispersion (including many consumer-grade units). By 2021 and 2025, much larger fleets that are more homogeneous for data center use. Fewer disks mean each failure weighs more and peaks are noisier.

- Purchase cohorts. Bulk buying means many units of the same model enter service simultaneously. If a model is “lemon,” failures cluster in a spike; if it’s robust, the curve flattens out over years.

- Different decommissioning strategies. Current strategies may retire disks that are still performing well (for risk management or capacity growth) before they fail, reducing the population without the typical “failure spike” expected if units were used until the end.

- Methodology. To estimate the age of early disks without detailed daily logs (as in 2013), the company calculated the time of deployment by combining hours powered-on (SMART 9) and the first known date; from there, they cross-reference failures vs. age to derive AFR. More traceability leads to more precise calculations.

This combination of factors explains why the shape of the 2021 and 2025 curves resembles each other, but the levels have improved: lower AFR, stable over most of the lifespan, with a late rebound transforming from a peak into a more subdued step.

What 2025 suggests (and hints at 2029)

Breaking down the numbers:

- 2013 peak: 13.73% AFR at 3 years and 3 months

- 2021 peak: 14.24% at 7 years and 9 months

- 2025 peak: 4.25% at 10 years and 3 months

- AFR Year 0–1 (2025): approximately 1.30%; recent quarterly AFR: 1.36%

Translation: longer life span and greater predictability. Backblaze itself commits to reviewing this analysis in 2029 to see if the failure peak shifts even further right (and whether it diminishes further).

Has the classic curve been wrong? Not entirely, but it’s incomplete

The bath tub curve is not “false”; it’s incomplete. It’s useful as intuition when time is the dominant factor and the environment is fairly uniform. But in a modern data center, these exist:

- Variability by model and batch (quality control, firmware, density).

- Operational changes (new data centers, racks, improved cooling).

- Decommissioning with risk criteria.

- Load profiles that shift over time (more sequential vs. random, peaks vs. sustained loads).

These nuances flatten the central part of the curve and shift the final rebound. What truly matters in 2025 is not that the “U” has disappeared, but that the final peak has been delayed (toward the units’ expected lifespan) and reduced (by about a third of what it was years ago). For infrastructure managers, that means fewer surprises and more useful years out of each device.

Implications for data centers (and what not to forget)

- Models, not brands. Variability model to model persists. The good news: overall, longevity improves. Caution: monitor each SKU with metrics such as RMA/AFR and cohort analysis.

- Buying strategy. Spacing out procurements into waves mitigates lot spikes. Buying everything at once simplifies logistics but concentrates failures if a model is weak.

- Smart decommissioning. Removing disks early reduces visible failures. Letting disks run until they break down might seem efficient, but raises the risk of a final failure spike. The best approach depends on your service profile and your platform’s RTO/RPO.

- SMART is not a crystal ball. It helps, but does not detect all imminent failures. Useful telemetry includes retry rates, I/O errors, latencies, temperatures, and vibrations per rack.

- Stable environment = better AFR. The data center’s focus on temperature, humidity, vibration, and power adds up. It reduces “noise” and makes it easier to spot when a model or batch deviates.

A key insight: what “doesn’t fail” also counts

An important methodological point: with early decommissioning, some disks leave the fleet working. This lowers the failure population at the tail end without producing the expected failure peaks if units were used to the very last byte. It’s not cheating; it’s real operation. Comparing curves from different years requires reading “between the lines”: knowing what disk was bought, when, how it was used, and when it was retired.

And what about the average user? Quick summary

- Modern hard drives in data centers last longer than those a decade ago.

- The probability of early failure is low (AFR ~1.30% in the first year).

- The failure rebound appears, on average, much later (by 2025, beyond the ten-year mark) and at a significantly lower level.

This does not guarantee that your home disk will last x years: it is not subjected to the same loads, temperatures, vibrations, or operations as in a data center. But as a trend, it’s good news for anyone still relying on spinning disks for digital storage.

Frequently Asked Questions

What exactly is the “annualized failure rate” (AFR), and why does it matter?

AFR is the annual probability that a disk in a given population will fail. It allows comparison of cohorts and ages with a consistent metric. In 2025, Backblaze reports a quarterly AFR of 1.36% and a peak of 4.25% at 10 years and 3 months of median life.

If drives are getting better, can I forget about the bath tub curve?

Not entirely. The classic curve is useful as intuition, but incomplete. Modern data centers show patterns that depend on model, environment, cohorts, firmware, and operation. The trend in 2025 suggests: fewer early failures, a long plateau, and a delayed, gentle bounce-back.

What has changed from 2013 through 2021 and 2025 to improve the curve so much?

Three main factors: more data (from 206,928 and 317,230 disks versus about 35,000 initially), better operation (more sophisticated purchasing and decommissioning), and a more robust model park suited for data center use. These reduce noise, postpone the peak, and lower its height.

What can system teams do to leverage this trend?

Monitor model-specific metrics, buy in waves, align decommissioning with your RTO/RPO, track beyond SMART (latency, I/O, errors, temperature, vibration), and test your refresh and recovery plans. Drives last longer, but the platform design still makes a difference.