The American startup Groq, founded in 2016, has developed an innovative Language Processing Unit (LPU) that promises to transform the field of generative artificial intelligence. This advanced technology generates content almost instantly, outpacing the traditional GPUs (Graphics Processing Units) from Nvidia in terms of speed.

An Alternative to Nvidia GPUs

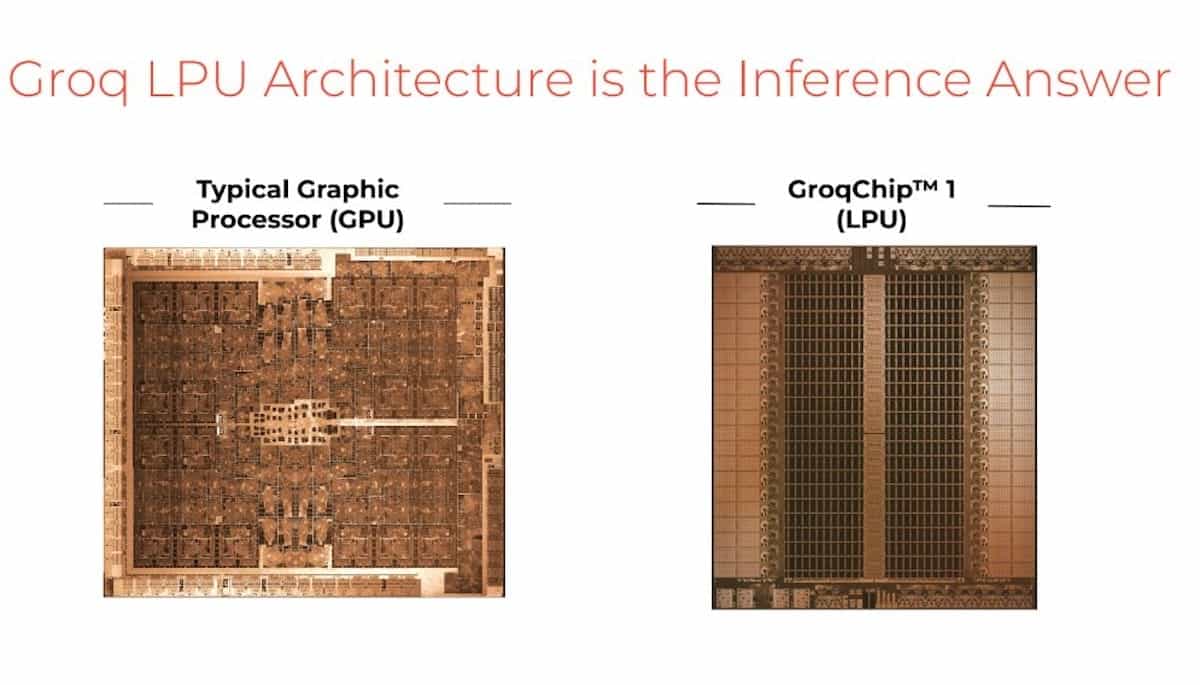

Groq has been in the spotlight recently due to the rise of generative artificial intelligence. The company has created LPUs (Language Processing Units), processors specifically designed for inferring AI models with billions of parameters. Unlike GPUs, which are more suitable for training AI models, Groq’s LPUs are optimized for the fast and efficient execution of these models.

Jonathan Ross, founder of Groq and former Google engineer, highlights that “people don’t have patience on mobile devices.” With Groq’s LPUs, the aim is to make interaction with AI almost instantaneous, eliminating the feeling of conversing with a machine.

Impressive Technical Features

GroqChips, as these LPUs are called, are capable of performing a quadrillion operations per second, generating up to 400 words per second, compared to the 100 words per second that Nvidia GPUs can handle. This superior speed could position Groq as a leader in the generative AI market.

Despite Groq’s LPUs achieving a performance of 188 Tflops, considerably lower than Nvidia H100 GPUs’ 1,000 Tflops, their simplified architecture and energy efficiency make them ideal for language model inference. The LPUs consume significantly less energy, with a Groq PCIe card consuming ten times less energy than a similar Nvidia card.

Innovative Design for Efficiency

The architecture of Groq’s LPUs resembles an assembly of several mini-DSPs (digital signal processors) mounted in series. This structure allows each circuit to specialize in a single function, maximizing energy efficiency and processing speed. This modular and specialized approach drastically reduces energy consumption compared to GPUs, which were originally designed for graphic processing.

Focus on Inference and Efficiency

Groq emphasizes that their chips are not intended for training AI models but for executing already trained models. “We have developed a chip that accelerates the execution of these models by ten times. This means that when you ask a question to an AI running on a server equipped with our chip, you get a real-time response,” explains Ross.

The Future of Inference with Groq

The Groq LPU™ Inference Engine is an inference acceleration system designed to offer substantial performance, efficiency, and accuracy in a simple design. With the ability to run models like Llama-2 70B at over 300 tokens per second per user, Groq’s LPUs promise to set a new standard in the AI experience.

Conclusion

Groq is redefining how we interact with artificial intelligence, offering technology that is not only faster but also more energy-efficient. With its focus on inference and efficient execution of language models, Groq positions itself as a formidable competitor in the field of generative AI.

For more information about Groq and its innovative LPUs, visit Groq.