Google Cloud has introduced Ironwood, its seventh-generation TPU, along with new Axion instances based on ARM Neoverse — N4A (in preview) and C4A metal (bare metal ARM, “coming soon” in preview) — in a move that confirms a shift in the AI cycle: from a focus on training to pressure to provide fast, cost-effective, orchestrated inference in agentic workflows. The company asserts that this productivity leap is only possible through custom silicon and system co-design (hardware, networks, and software working as a unified whole), under its supercomputing umbrella of AI Hypercomputer.

In practice, Ironwood is designed to serve state-of-the-art models with minimal latency and full data center elasticity, while Axion expands general-purpose computing with better price-performance for the backbone supporting those applications: microservices, data ingestion and preparation, APIs, databases, and orchestration control.

Ironwood: a TPU built for massive inference (without sacrificing large-scale training)

Ironwood is presented as Google’s most powerful and efficient TPU to date. The company reports up to a 10× increase in peak performance over TPU v5p, and more than 4× better performance per chip in both training and inference compared to TPU v6e (Trillium). The message is clear: serving generative models and large-scale agents requires a leap in bandwidth, effective memory, and inter-chip connectivity.

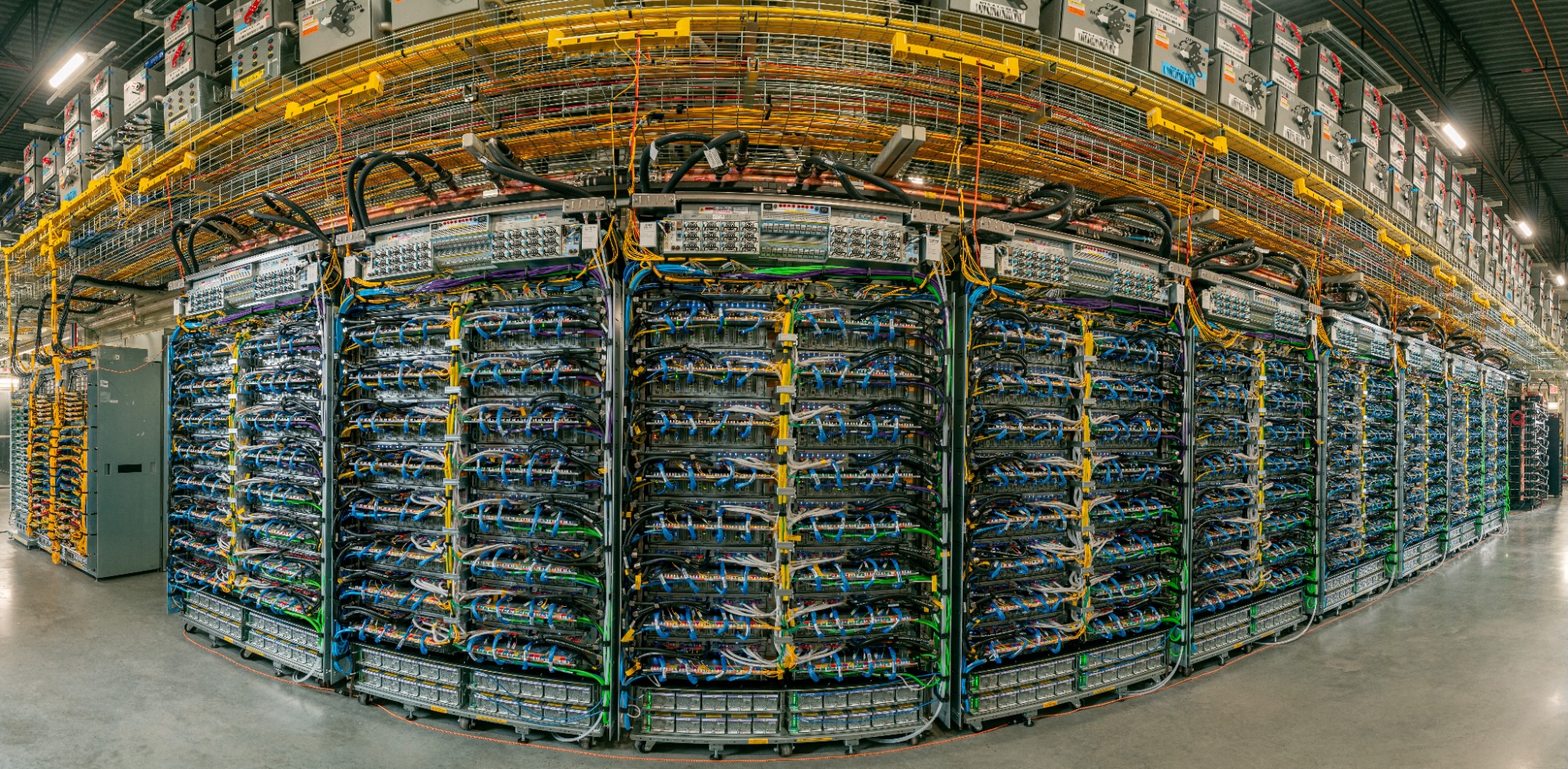

Ironwood’s design centers on a “system-first” approach. Each deployment groups TPUs into pods, which are then assembled into superpods interconnected with ICI (Inter-Chip Interconnect) at 9.6 Tb/s. This topology allows a superpod to connect up to 9,216 TPUs within a single domain with 1.77 PB of shared HBM, reducing bottlenecks for large models. Beyond that, the Jupiter network enables cabling of superpods into clusters of hundreds of thousands of TPUs when load demands it.

For resilience, Google incorporates optical switching (OCS) as a reconfigurable fabric that reroutes traffic during interruptions without service downtime. At the physical layer, it supports large-scale liquid cooling, a well-established feature in its data centers, maintaining high densities with five nines availability since 2020.

Beyond raw power, the key lies in how it is used. Ironwood coexists with co-designed software to maximize architecture efficiency and ease engineering:

- GKE with Cluster Director offers advanced maintenance and topology-aware planning for more resilient clusters.

- MaxText, an open high-performance framework for LLMs, features improvements for supervised fine-tuning (SFT) and RL optimizations like GRPO.

- vLLM already supports TPU, with easy toggling between GPU and TPU with minimal configuration changes.

- GKE Inference Gateway introduces an intelligent load balancing layer across TPU servers that, according to Google, can reduce time-to-first-token by up to 96% and lower serving costs by up to 30%.

The ultimate goal is to close the training → tuning → serving loop within a cohesive operational experience, where data, model, and serving operate more efficiently and with less friction.

Early market indicators: from Anthropic to Lightricks and Essential AI

Early references shed light on the ambition behind the launch. Anthropic —which is scaling its Claude family— plans to access up to 1,000,000 TPUs, positioning Ironwood as a lever to accelerate the transition from lab to large-scale service for millions of users. Lightricks particularly values the potential in quality and fidelity of image and video for creative products; Essential AI emphasizes the ease of integration of the platform to get their teams up and running without delays. In all cases, the common thread is the price-performance ratio and the capacity to scale without sacrificing reliability.

Axion: ARM CPUs for everyday tasks that make AI possible

The other side of the announcement is Axion, Google Cloud’s Neoverse ARM CPU lineup. The expansion comes in two parts:

- N4A (preview): the most cost-efficient VM in the N series to date, with up to 64 vCPU, 512 GB of DDR5 memory, and 50 Gbps network. Compatible with Custom Machine Types and Hyperdisk Balanced/Throughput storage, it targets microservices, containers, open-source databases, batch, and analytics, as well as development environments and web servers. Google claims up to a 2× better price-performance compared to current x86 VMs.

- C4A metal (bare metal ARM, coming soon in preview): up to 96 vCPU, 768 GB of DDR5, Hyperdisk, and up to 100 Gbps network. Designed for hypervisors, native ARM development (including Android/automotive), strict licensing software, or complex test farms and simulations.

Complementing the well-known C4A (VM) — up to 72 vCPU, 576 GB DDR5, 100 Gbps network, up to 6 TB of Titanium SSD local storage, and advanced maintenance controls — the Axion portfolio covers both latency-sensitive workloads and needs for sustained capacity, driven by a core principle: match resources to actual load to lower costs without changing operational models.

Initial customer tests reinforce this thesis. Vimeo reports over 30% performance increase for transcoding compared to comparable x86 VMs; ZoomInfo notes over 60% better price-performance in Dataflow and Java services in GKE; and Rise combines C4A and Hyperdisk to cut compute consumption and latency in its advertising platform, while testing N4A for critical workloads that require flexibility.

The key combination: AI accelerators + efficient CPUs for everything else

The current landscape, characterized by model architecture shifts every few months, emerging techniques (with agents that plan and act in complex environments), and unpredictable demand spikes, demands careful placement of workloads. Google’s approach favors a marriage of:

- Specialized accelerators (Ironwood TPU) for training and serving large models with enough bandwidth and memory, supported by a resilient network.

- Axion CPUs for everything else: applications, APIs, data ingestion/preparation, ETL, queues, web services, and microservices orchestrating AI workflows. This general-purpose layer largely influences the total cost and, if efficient, frees budget for acceleration where truly needed.

For platform teams, this results in operational flexibility: the ability to mix accelerators and CPUs based on the phase (pre-training, tuning, inference) and the profile of each workload. With support from GKE, vLLM, and MaxText, the promise is to minimize code changes and reduce friction when shifting workloads between GPU and TPU, or reconfiguring components in Axion.

Key questions for decision-making

While the announcement clarifies a clear direction, organizations should ground it with a pragmatic checklist:

- Availability by region and quotas: Which regions can Ironwood be reserved in? What limits and SLOs accompany N4A and C4A metal in preview?

- Compatibility and portability: Which tooling (vLLM, MaxText, popular frameworks) is supported out of the box? What changes are needed to migrate from GPU to TPU?

- Costs and packaging: How will superpods and intensive usage window be budgeted? How does Hyperdisk align with IOPS profiles and sizes in Axion?

- Resilience and governance: How is the OCS plane and Jupiter monitored and audited? What metrics for TTFT, MTTR, and cost per token does the serving stack provide?

- Adoption roadmap: What low-risk pilots can validate improvements (e.g., migrating an inference service to Ironwood or transferring a data pipeline to N4A) before larger investments?

Providing figures — not just technical specs — will differentiate an attractive promise from a real competitive advantage.

What this means for the industry

The announcement positions Google at the center of a debate that’s no longer “who trains the biggest models,” but “who serves better and cheaper” in production. Ironwood directly addresses the serving bottleneck with mass interconnectivity, shared memory, and smart load balancing; Axion targets cost containment in daily operations, where the profitability of AI products is often decided. For clients, the advantage isn’t just in watts per token, but in system discipline: orchestrating a pipeline that smoothly transitions from experiment to global service.

If co-optimization persists and the software ecosystem evolves accordingly, this combo could become a playbook for many platform teams: TPU for what truly needs bandwidth and memory, and ARM for what drives daily business. Ultimately, it’s an investment in the whole.

Frequently Asked Questions (FAQ)

What exactly is Ironwood, and what workloads is it designed for?

It’s Google’s seventh-generation TPU, built for training large models, reinforcement learning (RL), and especially large-scale inference and serving with very low latency. Its strength lies in interconnecting thousands of chips (up to 9,216 in a superpod) and accessing 1.77 PB of shared HBM to prevent bottlenecks.

What differentiates N4A, C4A, and C4A metal from Axion?

N4A emphasizes cost-performance and flexibility (up to 64 vCPU, 512 GB, 50 Gbps), ideal for microservices, OSS databases, and analytics. C4A (VM) offers sustained performance (up to 72 vCPU, 576 GB, 100 Gbps, and local Titanium SSD of up to 6 TB). C4A metal is bare metal (up to 96 vCPU, 768 GB, up to 100 Gbps) for hypervisors, native ARM development (including Android/automotive), and complex workloads with strict licensing or hardware access.

How do software tools (GKE, vLLM, MaxText) help leverage Ironwood?

GKE includes Cluster Director for topology-aware planning and maintenance. vLLM eases migration or mixing of GPU/TPU with minimal changes. MaxText speeds up fine-tuning and RL techniques. Additionally, GKE Inference Gateway can significantly reduce TTFT and serving costs through intelligent load balancing across TPU servers.

When will Ironwood and the new Axion instances be available?

Ironwood is expected to reach general availability in the coming weeks, while N4A is in preview, and C4A metal will be in coming soon. Regional availability and quotas will expand gradually.

via: cloud.google