Google concluded the machine learning sessions at Hot Chips with a significant announcement: Ironwood, the new generation of their Tensor Processing Units (TPUs), specifically designed for large-scale language model inference and reasoning.

Unlike previous generations focused mainly on training, Ironwood is built to run production-level large language models (LLMs), mixture-of-experts architectures, and reasoning models, where latency and reliability are critical factors.

Massive Scalability

The most remarkable advancement is the ability to scale up to 9,216 chips within a single node, achieving 42.5 exaflops of performance (FP8). This setup is supported by 1.77 petabytes of directly addressable HBM3e memory, shared through optical circuit switches (OCS), doubling the scale compared to TPUv4 (4,096 chips).

The design includes redundancy: instead of choosing an exact power-of-two number like 8,192, they opted for 9,216 to have spare racks in case of failures.

Energy Efficiency and Reliability

Google claims Ironwood delivers twice the performance per watt compared to Trillium and up to six times more efficiency than TPUv4, thanks to:

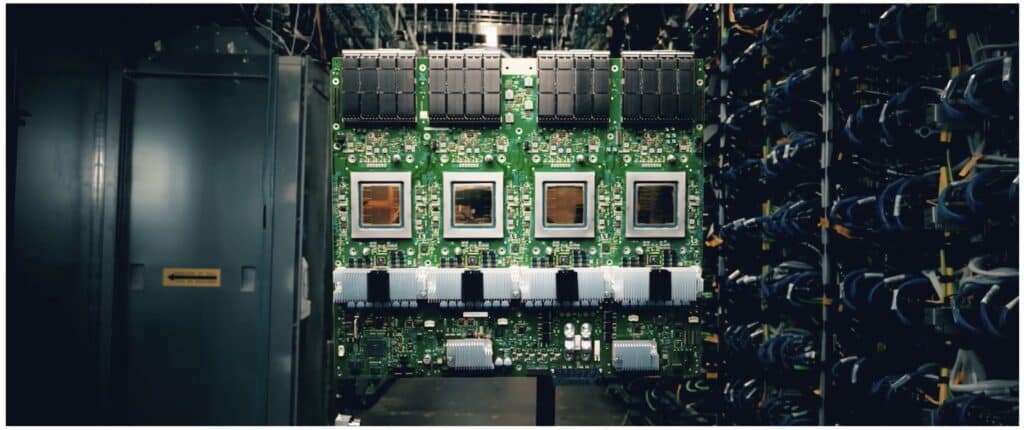

- A multi-chiplet architecture (two compute dies per chip)

- Eight stacks of HBM3e per chip, totaling 192 GB, with a bandwidth of 7.3 TB/s

- Third-generation liquid cooling with multiple circuits to keep heat exchangers clean

- Peak load control to stabilize consumption during megawatt-scale deployments

Reliability (RAS: Reliability, Availability, Serviceability) has been prioritized through:

- Automatic checkpointing for load reassignment if a node fails

- Detection of silent data corruption and in-flight arithmetic checks

- An integrated root of trust, secure boot, and support for confidential computing

AI-Designed Chip

Google explained that even the internal design of the chip was optimized using AI, applied specifically to ALU design and floorplanning, in collaboration with their AlphaChip team. This underscores a growing trend: leveraging AI not just for executing workloads but for designing hardware that will power the next generation of models.

SparseCore and New Features

Ironwood features the 4th generation of SparseCore accelerators, specialized for embeddings and collective operations, which are crucial in massive model architectures.

At the rack level:

- 64 TPUs per rack (16 trays of four TPUs each)

- 16 host CPU racks to coordinate operations

- Copper internal connections; the racks connect via OCS in a logical 3D mesh

A Key Piece for Google Cloud

Ironwood will not be sold as standalone hardware; it is exclusively available through Google Cloud, integrated into their Cloud TPU offerings. This aligns with Google’s model of designing their own infrastructure to serve third parties, as seen with YouTube, Gmail, and Search, where AI plays an overarching role.

For customers, Ironwood offers:

- Real-time inference for reasoning models

- Greater production stability, vital for generative AI agents

- Energy optimization and reliability at data center scale

Conclusion

With Ironwood, Google aims to strengthen its leadership in AI hardware during a time when NVIDIA’s GB300 and other proposals set the pace in AI computing. While NVIDIA pushes GPUs designed for both training and inference, Google opts for a proprietary accelerator optimized exclusively for massive inference, emphasizing reasoning and low latency.

The outcome is a super system capable of delivering up to 42.5 exaflops, with 1.77 PB of shared memory, prepared for the era of trillion-parameter models and reliable, efficient AI agents in production.

FAQs

How does Ironwood differ from earlier TPU generations?

It is specifically engineered for large-scale inference, whereas previous TPUs were more focused on training tasks.What role do Optical Circuit Switches (OCS) play?

They enable memory sharing across thousands of chips within a pod, scale up to 9,216 TPUs, and allow dynamic reconfiguration in case of failures.What memory improvements does Ironwood feature?

Each chip includes eight stacks of HBM3e (192 GB) with a bandwidth of 7.3 TB/s, essential for powering LLMs and reasoning.Can Ironwood be purchased outside Google Cloud?

No. Like previous generations, Ironwood is exclusive to Google Cloud TPU clients.

via: serveTheHome and Google