Google has officially unveiled Gemini 3, the third generation of its family of artificial intelligence models, with a clear message to the tech sector: the race is no longer just about writing texts or generating images, but about reasoning, tool usage, and acting as an autonomous agent in real-world environments.

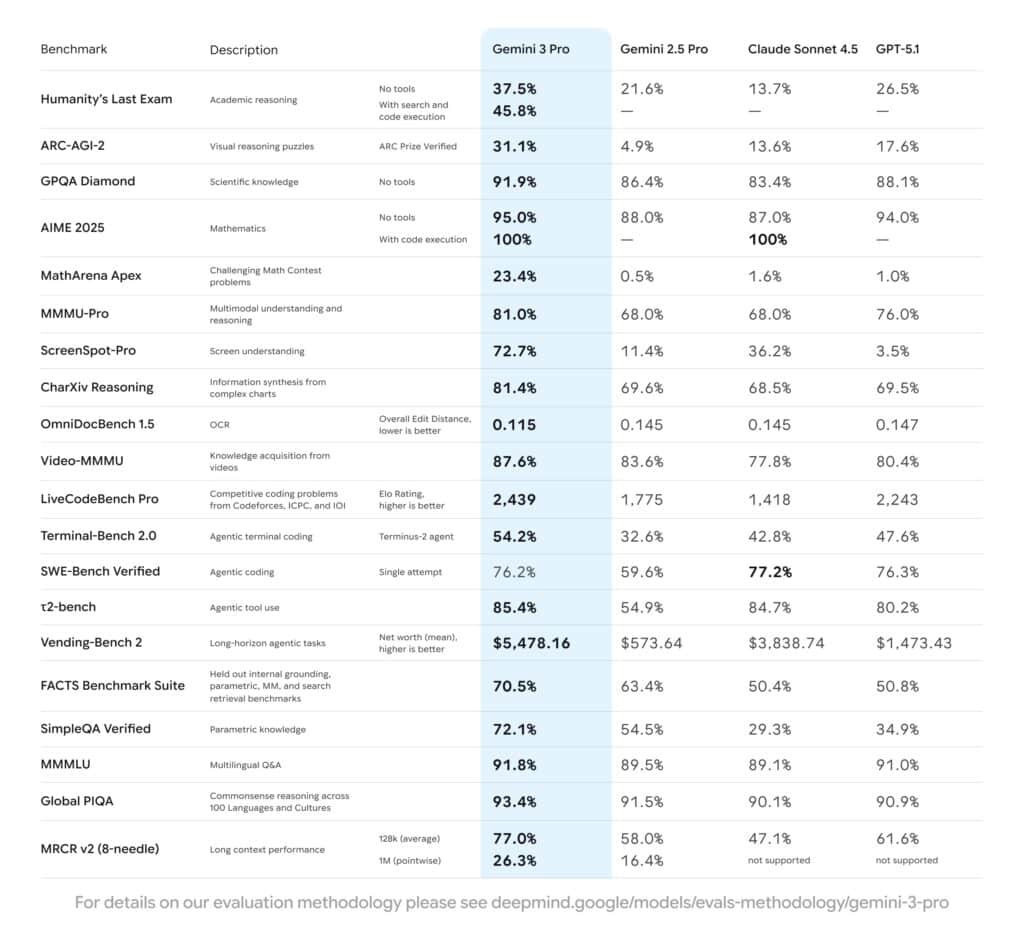

For a technical audience, the company has presented more than just promises. They published a very detailed benchmarks table and launched a new agent-based development platform, Google Antigravity, which positions Gemini 3 more as a “system operator” or “virtual engineer” rather than a simple conversational assistant.

From Gemini 1 to Gemini 3: two years of accelerated iteration

In less than two years, the Gemini line has evolved from Google’s response to early generalist generative models to a full family focused on agents and long-term planning.

- Gemini 1 introduced native multimodality and very wide context windows.

- Gemini 2 and 2.5 Pro built on that foundation with agent architecture, enabling models to chain actions and lead community rankings like LMArena for months.

- Gemini 3 presents itself as a synthesis of both stages, reinforcing reasoning, robust tool use, and the ability to plan complex workflows.

The deployment is immediate: Gemini 3 Pro is available from day one in Search’s AI Mode, the Gemini app, the API for developers via AI Studio and Vertex AI, and it becomes the core engine of the new Antigravity platform.

Benchmarks: where Gemini 3 Pro improves and where the race tightens

For anyone closely following the evolution of frontier models, the Gemini 3 Pro benchmark table is the most interesting part of the announcement. Google compared its model against Gemini 2.5 Pro, Claude Sonnet 4.5, and GPT-5.1 across reasoning, multimodality, tool use, and planning tasks.

Academic and scientific reasoning

In challenging reasoning tasks, Gemini 3 Pro makes notable differences:

- Humanity’s Last Exam (HLE), a doctoral-level reasoning test without tools: 37.5% correct versus 21.6% for Gemini 2.5 Pro, 13.7% for Claude Sonnet 4.5, and 26.5% for GPT-5.1.

- GPQA Diamond, focused on complex scientific knowledge: 91.9%, surpassing its closest rivals.

- ARC-AGI-2, visual reasoning puzzles verified by the ARC Prize: 31.1%, significantly higher than 4.9% for Gemini 2.5 Pro and 13–18% for other models.

For mathematics competitions, the picture is nuanced. In MathArena Apex, Gemini 3 Pro reaches 23.4%, compared to less than 2% for other models analyzed. However, in AIME 2025, another set of math problems, the results are closer: 95% without tools, outperforming 88–94% of its competitors, and 100% when code execution is allowed—tying with Claude Sonnet 4.5.

Advanced multimodality

In multimodal reasoning, a historically strong point for Google, Gemini 3 Pro boasts:

- 81.0% in MMMU-Pro, a test combining images and text across multiple disciplines, outperforming Gemini 2.5 Pro and Claude Sonnet, and surpassing GPT-5.1.

- 87.6% in Video-MMMU, measuring understanding from video information, once again outperforming other advanced models.

- 72.7% in ScreenSpot-Pro, understanding screens and interfaces, with a dramatic improvement over the 11.4% of Gemini 2.5 Pro.

For OCR and complex documents (OmniDocBench 1.5), the model achieves an average edit distance of 0.115, better than other compared models, indicating more robust reading of PDFs, captures, and scanned documents.

Coding, agents, and tool use

Where Gemini 3 Pro aims to clearly stand out is in the agent and coding realm:

- LiveCodeBench Pro, which assigns an ELO similar to coding competitions like Codeforces or ICPC: Gemini 3 Pro scores 2,439 points compared to 2,243 for GPT-5.1, with much lower values from others.

- Terminal-Bench 2.0, evaluating tool use from a simulated terminal: 54.2% success rate, versus 32.6% for Gemini 2.5 Pro and 42.8–47.6% for Claude and GPT-5.1.

- t2-bench, focused on agent-based tool use: 85.4%, above its rivals.

- Vending-Bench 2, long-term planning in managing a simulated vending machine business: average assets around $5,478, significantly higher than the $573 of Gemini 2.5 Pro, $3,838 for Claude, and $1,473 for GPT-5.1.

In SWE-Bench Verified, a key benchmark for code correction agents in real repositories, the race is nearly tied: Gemini 3 Pro at 76.2%, Claude Sonnet 4.5 at 77.2%, and GPT-5.1 at 76.3%. The leap from Gemini 2.5 Pro (59.6%) is clear, but no dominant winner among frontier models.

Factual knowledge, multilingualism, and long context

In factual accuracy and grounding, the model shows:

- 70.5% in the FACTS Benchmark Suite, an internal test suite combining grounding, parametric knowledge, multimodality, and retrieval with search.

- 72.1% in SimpleQA Verified, well above the roughly 30–35% of Claude and GPT-5.1.

For common sense and multilingual tasks:

- 91.8% in MMLU (multilingual Q&A) and 93.4% in Global PIQA, surpassing previous generations and slightly ahead of other advanced models.

And regarding long-context capacity:

- 77.0% in MRCR v2 (8-needle) at an average of 128,000 tokens of context.

- 26.3% in MRCR v2 at 1 million tokens, compared to 16.4% for Gemini 2.5 Pro and lacking support for some competitors at that length.

Overall, the data reinforces Google’s narrative: Gemini 3 Pro is not just an incremental step but an attempt to deliver a balanced model in reasoning, multimodality, and agent capabilities, with some areas where the competition remains on par or even slightly ahead.

Deep Think: more calculation steps for truly difficult cases

For cases where accuracy outweighs latency, Google introduces Gemini 3 Deep Think, a specialized mode allowing the model more internal reasoning steps before responding.

In this mode, additional improvements are reported in tests like Humanity’s Last Exam, GPQA Diamond, or ARC-AGI, reaching 45.1% with code execution. Deep Think is not yet generally available: the company is evaluating its safety and will first release it to Google AI Ultra subscribers.

Gemini 3 in products: search engine, app, cloud, and agents

Beyond the numbers, the deployment roadmap is aggressive:

- AI Mode in Search: Gemini 3 powers new dynamic interfaces with generative visual layouts, simulations, and interactive tools built “on the fly” based on the query.

- Gemini App: Google AI Pro and Ultra users will begin interacting with Gemini 3 Pro and, later, with Deep Think and advanced agent capabilities.

- AI Studio, Vertex AI, and Gemini Enterprise: developers and companies can integrate the model via API and managed services, focusing on agents, RAG, and business process automation.

- Google Antigravity: the new agent-centered development platform where Gemini 3 acts as a planning, coding, and browser/terminal management engine.

Antigravity is likely the most strategic component for the technical audience: it transforms AI assistance into something closer to an agent runtime that coordinates to complete complex software tasks, with direct access to IDE, command line, and browser for end-to-end testing.

Safety, alignment, and the less glamorous side of the announcement

Google claims that Gemini 3 is its safest model to date. It has undergone the company’s broadest internal evaluation set and external analyses by specialized partners and public organizations. Key improvements include:

- Fewer “pleased-to-help” responses when information is uncertain.

- Enhanced resistance to prompt injection and jailbreak techniques.

- Specific protections against malicious uses in cyberattacks.

For the tech press, a key detail is that the model has also been evaluated under Google’s own frontier model security framework, and that Deep Think will not be publicly released until it passes additional security filters.

Frequently Asked Questions for technical profiles about Gemini 3

What do the published benchmarks for Gemini 3 Pro really mean?

The benchmarks cover multiple facets: academic reasoning (Humanity’s Last Exam, GPQA), mathematics (AIME 2025, MathArena), multimodality (MMMU-Pro, Video-MMMU, ScreenSpot-Pro), factual accuracy (FACTS, SimpleQA), coding and agents (SWE-Bench, LiveCodeBench, Terminal-Bench, t2-bench, Vending-Bench), and long context (MRCR v2). These are recognized tests within the community, but results are from evaluations by Google itself; independent assessments will be needed as third parties gain access to the model.

How does Gemini 3 compare in coding and agents to other advanced models?

In competitive coding and tool use from terminal interfaces, Gemini 3 Pro clearly leads, with an ELO of 2,439 in LiveCodeBench Pro and a success rate of 54.2% in Terminal-Bench 2.0. In SWE-Bench Verified, the difference with Claude Sonnet 4.5 and GPT-5.1 is minimal, indicating the agent and coding domain remains highly competitive and no absolute winner has emerged yet.

What role does Google Antigravity play in the Gemini 3 ecosystem?

Antigravity is the layer that transforms Gemini 3 from a mere text model into a development platform centered on agents with direct access to editors, terminals, and browsers. Agents can plan complex software tasks, make code changes, run tests, and validate outputs under developer supervision. For engineering teams, this will be the key factor in whether Gemini 3 can truly boost productivity beyond incremental code snippet generation.

What should companies and developers watch for before deploying Gemini 3 in production?

Beyond raw performance, critical points include token-based cost in various modes (Pro and Deep Think), latency in agent workflows, alignment with internal policies and sector regulations, and compatibility with existing observability, security, and governance infrastructures. Independent evaluations of robustness, privacy, and security—especially in sensitive sectors like finance, health, or government—will also be essential.

via: Gemini 3