The inference war is no longer decided solely by “how many tokens per second” a GPU can spit out in a short prompt. In 2026, the new battlefield is long context: models that read entire codebases, maintain memory in flux streams, and respond with low latency as the prompt grows to sizes that recently seemed unfeasible in production.

In this scenario, LMSYS (the team behind highly regarded developments and evaluations within the serving ecosystem) has published performance results of DeepSeek running on NVIDIA GB300 NVL72 (Blackwell Ultra), comparing it to GB200 NVL72. The message is clear: in a long context use case (128,000 tokens input and 8,000 output), the system achieves 226.2 TPS per GPU, which is 1.53× higher than GB200 at peak performance. The improvement becomes even more remarkable when considering user experience and degradation under latency constraints—two critical points for agents and programming assistants.

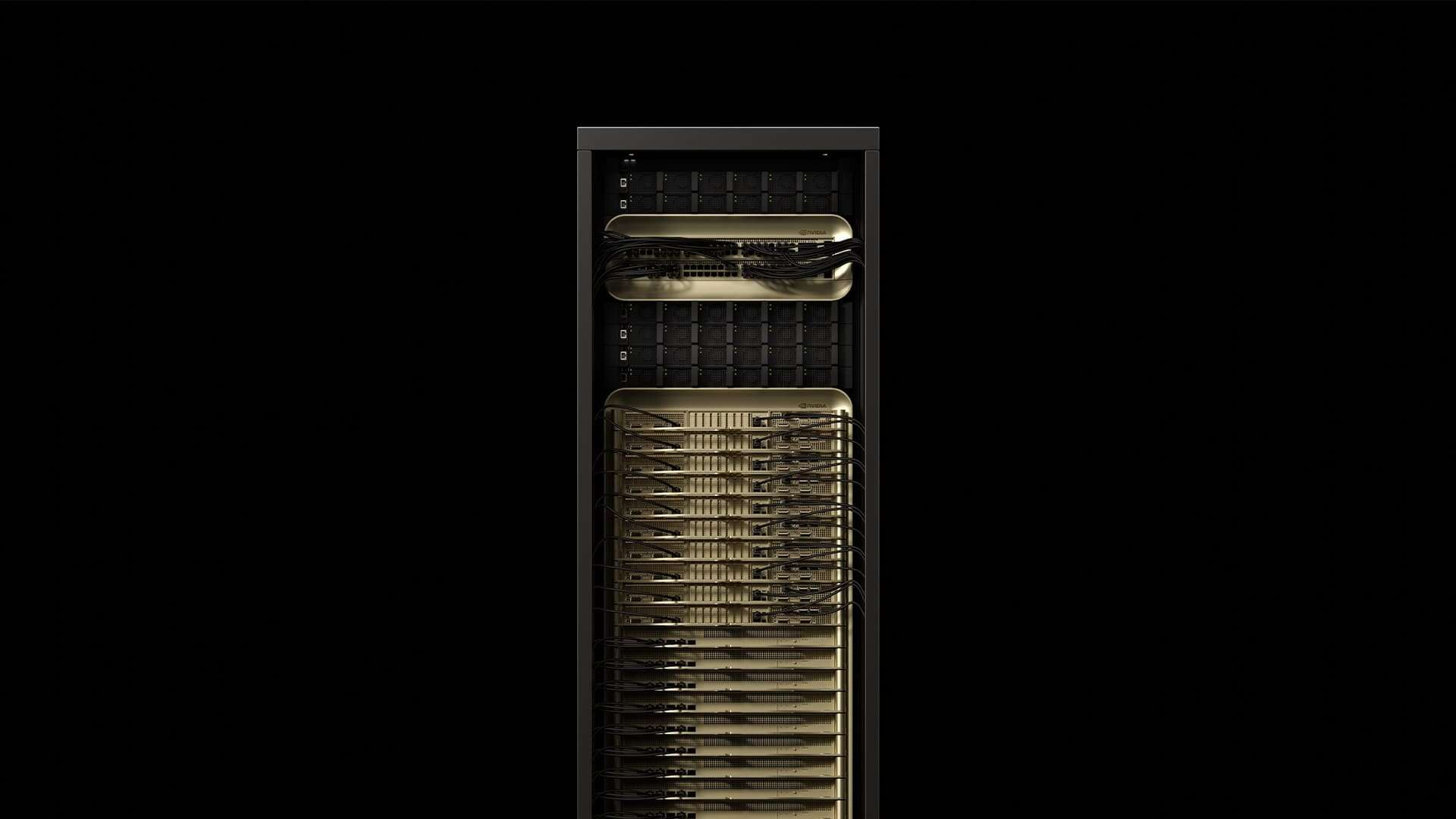

A full rack as a standard unit (not just a single GPU)

GB300 NVL72 isn’t an isolated card but a rack-scale system: 72 Blackwell Ultra GPUs coupled with 36 Grace CPUs, interconnected to operate as an inference “factory.” NVIDIA explicitly designs it as a platform to accelerate reasoning and multitask attention in demanding scenarios where memory and bandwidth are as crucial as the compute power.

This is precisely where long context shifts the game: bottlenecks often shift toward the KV cache (the memory used by the model to “remember” the context during generation) and the available HBM capacity (high-bandwidth memory) to support more simultaneous requests without losing state.

What LMSYS measured and why it matters

LMSYS evaluated DeepSeek-R1 in a typical long context serving pattern: huge input (ISL 128,000) and meaningful output (OSL 8,000). To support this, they applied techniques considered “manual” in modern serving, but tuned to maximize hardware efficiency:

- Prefill-Decode (PD) Disaggregation: separating the prefill (prompt processing) phase from the decode (token generation) phase to prevent a single node from becoming a bottleneck.

- Dynamic chunking: dividing prefill into blocks and overlapping work to reduce TTFT (Time To First Token), a metric indicating how “responsive” the assistant feels.

- MTP (Multi-Token Prediction): a technique to improve individual user latency without reducing overall throughput, especially valuable for fast responses in flux streams.

The numbers: peak, per-user, and latency-sensitive scenarios

In their “Highlights” section, LMSYS summarizes the most striking results: 226.2 TPS/GPU on GB300 without MTP, versus 147.9 TPS/GPU on GB200. With MTP enabled, total throughput stays high, but the real leap is in the metric most users care about: per-session perceived speed.

Table 1 — Key Results (long context 128,000/8,000)

| Metric | GB300 NVL72 | GB200 NVL72 | Difference |

|---|---|---|---|

| Peak without MTP (TPS/GPU) | 226.2 | 147.9 | 1.53× |

| With MTP (TPS/GPU) | 224.2 | 169.1 | 1.33× |

| TPS per user with MTP (TPS/User) | 43 | 23 | +87% |

LMSYS highlights another crucial point for production: when latency conditions are matched and configurations are comparable, GB300 delivers between 1.38× and 1.58× more TPS/GPU depending on the scenario, with greater advantage under latency-sensitive conditions (where degradation is more pronounced). In the “latency–throughput balanced” scenario, for example, the improvement without MTP reaches 1.58×.

Table 2 — Gains under latency constraints (representative scenarios)

| Scenario | Without MTP (GB300 vs GB200) | With MTP (GB300 vs GB200) |

|---|---|---|

| High throughput (relaxed latency) | +38.4% | +44.9% |

| Latency–throughput balance | 1.58× | 1.40× |

Why GB300 wins: more memory supports more “live” sessions

In long-context scenarios, the system is usually constrained not by pure compute power but by how many concurrent requests it can keep active in memory without losing context (retraction). The key difference LMSYS points out is that GB300 has 1.5× more HBM (288 GB vs. 192 GB), enabling higher decode batch sizes and supporting greater concurrency with less penalty.

In other words: it’s not just “faster,” it’s more resilient when real traffic demands serve many sessions simultaneously and context can’t fit into narrow schemes.

TTFT: improving time to first token

The prefill phase with 128,000 tokens is when many assistants become slow or “pause to think.” To fix this, LMSYS employs chunking and highlights a best case with 32,000 dynamic tokens per chunk, achieving 8.6 seconds TTFT. Without chunking, TTFT exceeds 15 seconds on both systems, explaining why these techniques have shifted from “optional” to “essential” in serious deployments.

Table 3 — TTFT for long prefill (128,000 tokens)

| Configuration | GB300 | GB200 | Note |

|---|---|---|---|

| No chunking | 15.2 s | 18.6 s | Greater difference without optimization |

| Best case (dynamic chunk 32,000) | 8.6 s | — | Significant TTFT reduction |

The elephant in the room: energy, costs, and the “rack price”

The comparison between GB300 and GB200 focuses on performance, but the market ultimately asks about total cost: energy, amortization, and deployment. Two parallel narratives emerge:

- NVIDIA claims, supported by SemiAnalysis InferenceX data and third-party analyses, that GB300 can offer up to 50× throughput per watt and up to 35× lower cost per token compared to Hopper in certain latency ranges, also noting better economics with long contexts.

- Meanwhile, even industry sources celebrating the performance boost exercise caution: there is still no fully public, comprehensive TCO comparison of GB300 vs. GB200, and deploying a rack of this scale involves non-trivial costs.

In summary, LMSYS data shows that Blackwell Ultra makes a strong entry into the more “agent-centric” (long context and latency) domain, but the real cost discussion will be settled with invoice figures and availability—not just graphs.

Frequently Asked Questions

What does “long context 128,000/8,000” mean in models like DeepSeek inference?

It means the system processes inputs up to 128,000 tokens and generates outputs of up to 8,000 tokens—a typical pattern in programming assistants that read extensive code before responding.

What is PD Disaggregation (Prefill-Decode), and why does it improve performance?

It separates prompt processing (prefill) from token generation (decode) across different nodes, reducing bottlenecks and enhancing scalability and latency.

What does MTP (Multi-Token Prediction) bring to production?

According to LMSYS, MTP can significantly boost per-user performance (TPS/User) while maintaining overall throughput, allowing each session to get faster responses without “stealing” capacity from the system.

Why is HBM memory so critical in long context?

Because the KV cache grows with the context, and if it doesn’t fit, the system must prune or expel state, degrading latency and throughput. More HBM enables more real concurrency without penalties.

via wccftech