Supported by NVIDIA, the startup launches a pioneering solution to reduce pressure on HBM memory and optimize inference costs in generative models

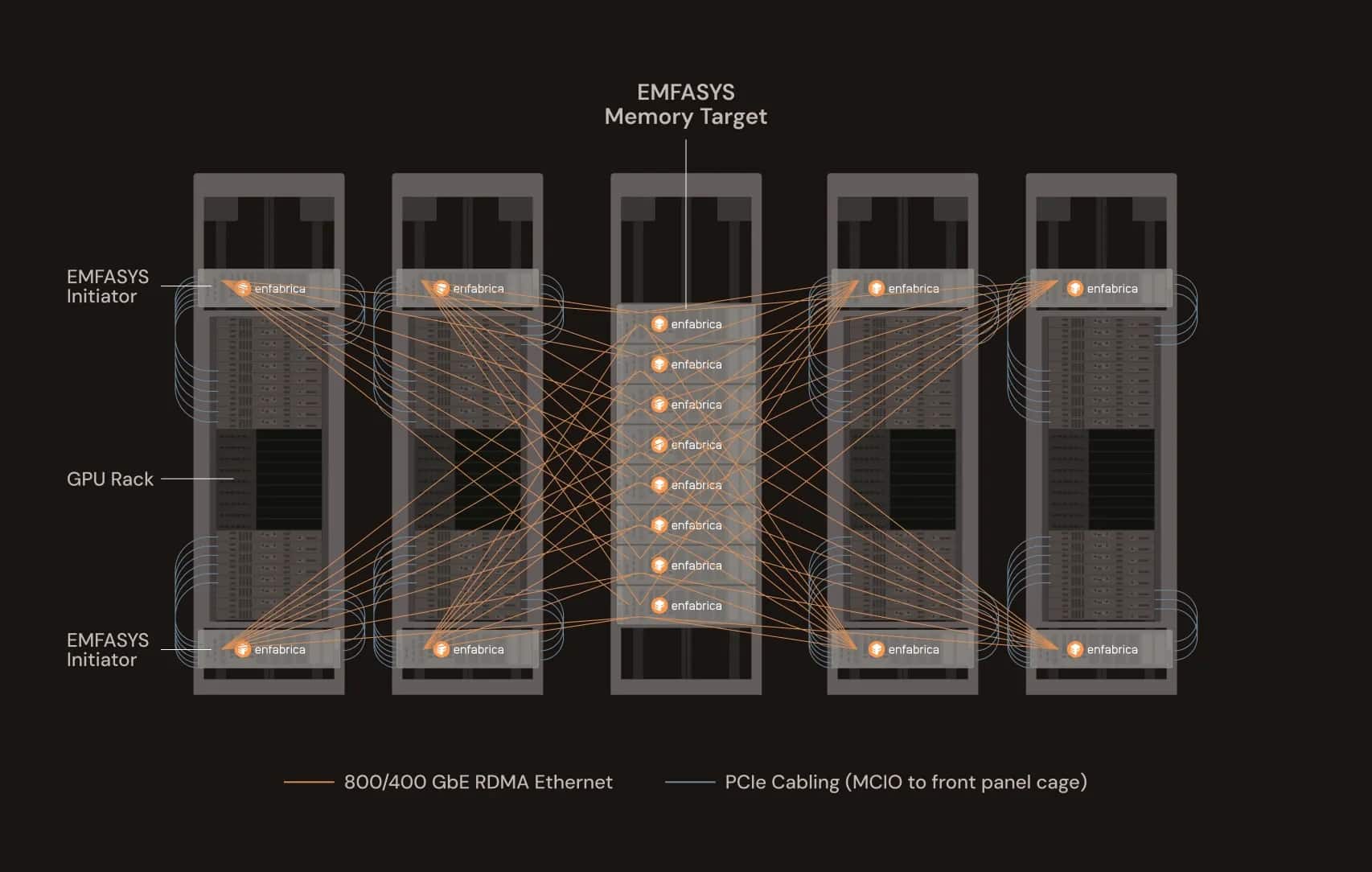

Enfabrica Corporation has announced the release of Emfasys, the first commercial elastic memory system for artificial intelligence entirely based on Ethernet. This new architecture promises to revolutionize efficiency in large-scale inference workloads, allowing GPU server memory capacity to be expanded through DDR5 DRAM connected via network, without altering the system’s infrastructure.

Designed to address scaling challenges posed by large language models (LLMs), Emfasys offers up to 18 TB of DDR5 memory per node, accessible through standard 400 and 800 GbE Ethernet connections, utilizing technologies such as RDMA and CXL. According to the company, this solution can cut token generation costs by 50%, especially in workloads with multiple turns, expanded context windows, or autonomous agent inference.

“AI inference faces a bandwidth scaling issue with memory and a margin stacking problem,” says Rochan Sankar, CEO of Enfabrica. “As models become more retentive and less conversational, memory access needs a new approach. Emfasys is our answer to that challenge.”

A New Paradigm in Memory Architecture

The technology is based on the ACF-S SuperNIC chip, delivering 3.2 Tbps performance and enabling interconnection of GPU servers with DDR5 memory via the CXL.mem protocol. This architecture acts as an “Ethernet memory controller,” where data is transferred without CPU intervention, with latencies measured in microseconds—far below what flash storage systems offer.

The system includes remote memory software compatible with RDMA and standard operating environments, facilitating deployment without redesigning existing hardware or software stacks. This approach maximizes computing resource utilization, reduces the waste of costly and limited HBM memory, and alleviates bottlenecks in multi-tenant or high-intensity environments.

Applications for the New Generation of Generative AI

The exponential growth of generative and agentic models, demanding 10 to 100 times more resources than previous LLM deployments, is straining global AI infrastructure. Enfabrica proposes Emfasys as a scalable solution for cloud environments where tokens per second and context memory determine total cost of ownership (TCO).

Early customers are already testing the technology. The company has not yet announced when it will be available to the general public, but the solution is currently in active evaluation and pilot phases. Additionally, Enfabrica is part of the Ultra Ethernet Consortium (UEC) and collaborates on the emerging Ultra Accelerator Link (UALink) standard.

A Startup with Strategic Backing

The announcement comes shortly after the company’s recent financial boost, closing a $125 million Series B funding round in 2023 with participation from Sutter Hill Ventures and NVIDIA. In April 2025, Enfabrica began distributing samples of its ACF-S chip, and in February, it opened a new R&D center in India to accelerate AI networking solutions.

With Emfasys, Enfabrica aims to lead a new segment of distributed memory infrastructure for generative AI, where efficient memory access could be the key difference between economic feasibility and resource collapse in the next wave of cognitive applications.

via: enFabrica