The proliferation of deepfakes has raised alarms in the financial and payment sectors. According to the report The Battle Against AI-Driven Identity Fraud by Signicat, attempts at fraud using deepfakes have increased by 2137% in the last three years, forcing companies to strengthen their security measures.

The study, based on surveys of over 1,200 security managers in seven European countries, including Spain, identifies account appropriation as the main risk for customers in the financial sector, followed by credit card fraud and phishing. For the first time, deepfakes are among the three main types of digital identity fraud.

Types of deepfake attacks: presentation and injection

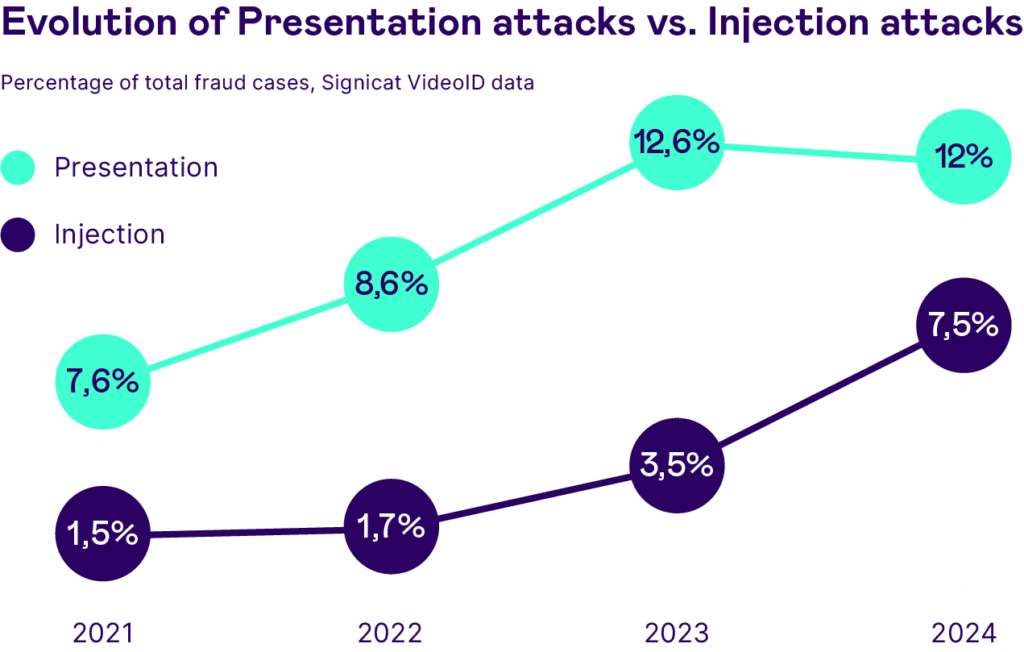

Cybercriminals have refined their techniques, dividing deepfake attacks into two categories:

- Presentation attacks: scammers use masks, makeup, or real-time screen recordings with a deepfake to impersonate someone and commit fraud, such as account takeovers or loan applications.

- Injection attacks: programs are manipulated using malware or pre-recorded video files, compromising identity verification processes at banks, fintechs, and telecommunications companies.

As these techniques become more sophisticated, traditional detection systems are struggling to combat this growing threat.

Deepfake: a key concern in financial fraud

The Signicat report reveals that 42.5% of financial fraud attempts are already utilizing artificial intelligence. In just three years, deepfakes have transitioned from being a rarity to becoming the primary technique for digital identity fraud.

Pinar Alpay, Chief Product & Marketing Officer at Signicat, emphasizes the gravity of the situation: “Three years ago, deepfake attacks accounted for only 0.1% of fraud attempts. Today, they reach 6.5%, meaning one in every 15 cases. It’s an alarming figure. Fraudsters are using AI in ways that traditional systems can no longer detect.”

Alpay highlights the need to implement advanced solutions: “Organizations must adopt a multi-layered protection strategy that combines artificial intelligence, biometric verification, and facial authentication. The coordination of these tools is key to combating these frauds.”

A dangerous lag in detection tools

Despite the rise in deepfake fraud, only 22% of financial institutions have implemented artificial intelligence-based detection tools. This shortcoming exposes many companies to increasingly sophisticated attacks.

The Signicat report urges financial and payment entities to strengthen their security measures by updating their detection systems, training employees and customers about the risks, and adopting advanced fraud prevention technologies.

A call to action to combat digital fraud

The growth of deepfakes is part of a broader trend of AI-driven identity fraud. Cybercriminals have found in these technologies an effective way to exploit vulnerabilities in financial systems.

Updating prevention tools, raising user awareness, and employing AI-based verification technologies are crucial steps to prevent this threat from continuing to expand. The fight against digital fraud requires a coordinated and technologically advanced response, able to adapt to the rapid development of deepfake attacks.