Artificial intelligence has changed the way we work, communicate, and even learn. But behind every technological advancement lies an increasingly heated debate: what happens to user data?. Now it’s Anthropic, the company behind Claude AI, that finds itself at the center of controversy after announcing changes to its privacy policies and terms of service.

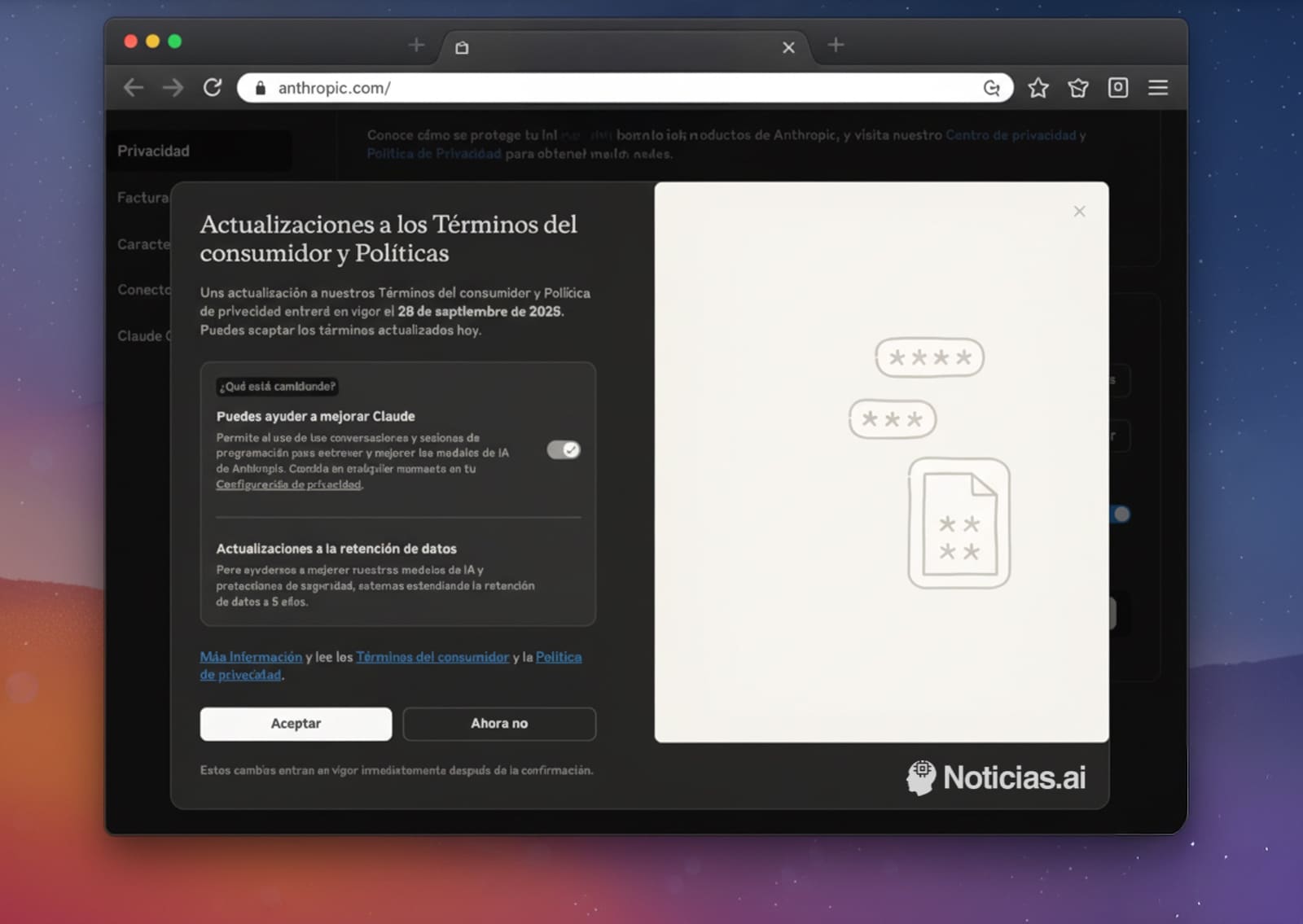

Starting September 28, 2025, all conversations with Claude may be used to train their AI models. And they will be by default, unless users manually disable this option in their privacy settings.

From Privacy Promise to Strategic Shift

Until now, Anthropic had distinguished itself from other major companies like OpenAI or Google by promising that Claude AI did not use user interactions as training material. That promise has now been broken.

The company explains that this change aims to “strengthen defenses” against abuse, fraud, and harmful content generation, while also improving the quality and safety of their models. However, the method of implementation — an opt-out system where users must manually prohibit data collection — raises concerns.

How Does Voluntary Opt-Out Work?

The new process is simple but not very visible. Users need to go to the privacy settings and uncheck the box labeled “You can help improve Claude,” which is enabled by default.

If users don’t do this, their data will be recorded and available to Anthropic for up to five years. This unusually long retention period has been one of the main points of criticism from digital privacy experts.

Who Is Affected by These Changes?

The new policy applies to Claude Free, Pro, and Max plans. It excludes enterprise services and contracts such as Claude for Work, Claude Gov, Claude for Education, or API integrations with third-party platforms, including Amazon Bedrock and Google Cloud Vertex AI.

In practice, millions of individual and professional users will be affected unless they explicitly choose to opt out.

A Step Back in Data Protection?

Anthropic’s move echoes other tech companies that have introduced similar changes under the guise of security. Google and Microsoft have been accused of “slipping in” AI features into their services with a similar approach, where active consent is absent.

The issue, according to digital law experts, is not that companies are improving their models but that they do so at the expense of users’ privacy and autonomy. “Opt-out systems rely on the inaction of the average user, who doesn’t read the fine print or lacks the technical knowledge to change settings,” explains a European data protection consultant.

Furthermore, the five-year retention period raises another concern. “Storing data for such a long time increases the risk of leaks or misuse, even if anonymization processes are applied,” adds the expert.

What Does Anthropic Say?

The company maintains that it does not sell user data to third parties and employs automated tools to filter or obscure sensitive information. “Our goal is to enhance the security and usefulness of Claude,” they state in their communications.

However, this argument does not convince everyone. Amid growing distrust of big tech firms, the move is seen as a step backward in building an ethical and transparent AI.

Contrasting Others’ Approaches

The debate is not occurring in isolation. While Anthropic introduces changes perceived as compromising privacy, Vivaldi, the alternative web browser, recently announced that it will not add generative AI features to its software. According to CEO Jon von Tetzchner, doing so would be “turning active web exploration into passive consumption.”

The comparison is inevitable: some companies strengthen their commitment to privacy, while others choose to monetize user data as fuel for AI development.

Legal Landscape: A Minefield

This shift comes amidst heightened regulation. In the European Union, the implementation of the AI Act and the long-standing GDPR require companies to justify their use of personal data and obtain informed consent.

In the United States, although there isn’t a strict federal framework, collective lawsuits against companies like OpenAI, Meta, and Anthropic point toward a future where courts could set important legal precedents.

If it turns out that the company previously used unlicensed books or content — as has been alleged in some cases — this new move could provide additional ammunition for critics.

Innovation or Unnecessary Risk?

The dilemma is clear: AI needs data to improve, but that data belongs to the people who generate it. Every message, conversation, and document shared with a chatbot is part of a user’s digital life.

For some, Anthropic’s decision seems like a logical step in the race to develop more powerful models. For others, it represents a dangerous abandonment of individual privacy.

What’s certain is that the battle over data control will shape AI’s evolution in the coming years. And what’s today an opt-out policy may become tomorrow’s unavoidable requirement.

Frequently Asked Questions (FAQ)

1. Can I prevent Claude from using my data for training?

Yes. You need to go to the privacy settings and manually disable the default option.

2. How long does Anthropic keep user data?

Up to five years, according to the new privacy policy.

3. Which Claude plans are affected by this?

All individual plans: Free, Pro, and Max. Enterprise plans or API integrations are excluded.

4. What are the risks of accepting the new policy?

Your conversations may be stored for an extended period and used to train AI. Although Anthropic claims to anonymize the data, there’s still a risk of leaks or misuse.