At the Hot Chips 2025 conference, the startup Celestial AI showcased one of the most anticipated advancements in AI infrastructure: the Photonic Fabric Module. This optical interconnection system promises to revolutionize how chiplets, large GPUs, and next-generation accelerators are connected.

Facing the physical and energy limits of traditional electronic interconnects, Celestial proposes transitioning to silicon photonics, offering terabytes of bandwidth with nanosecond latencies, lower energy consumption, and the ability to scale clusters of thousands of XPUs across multiple racks.

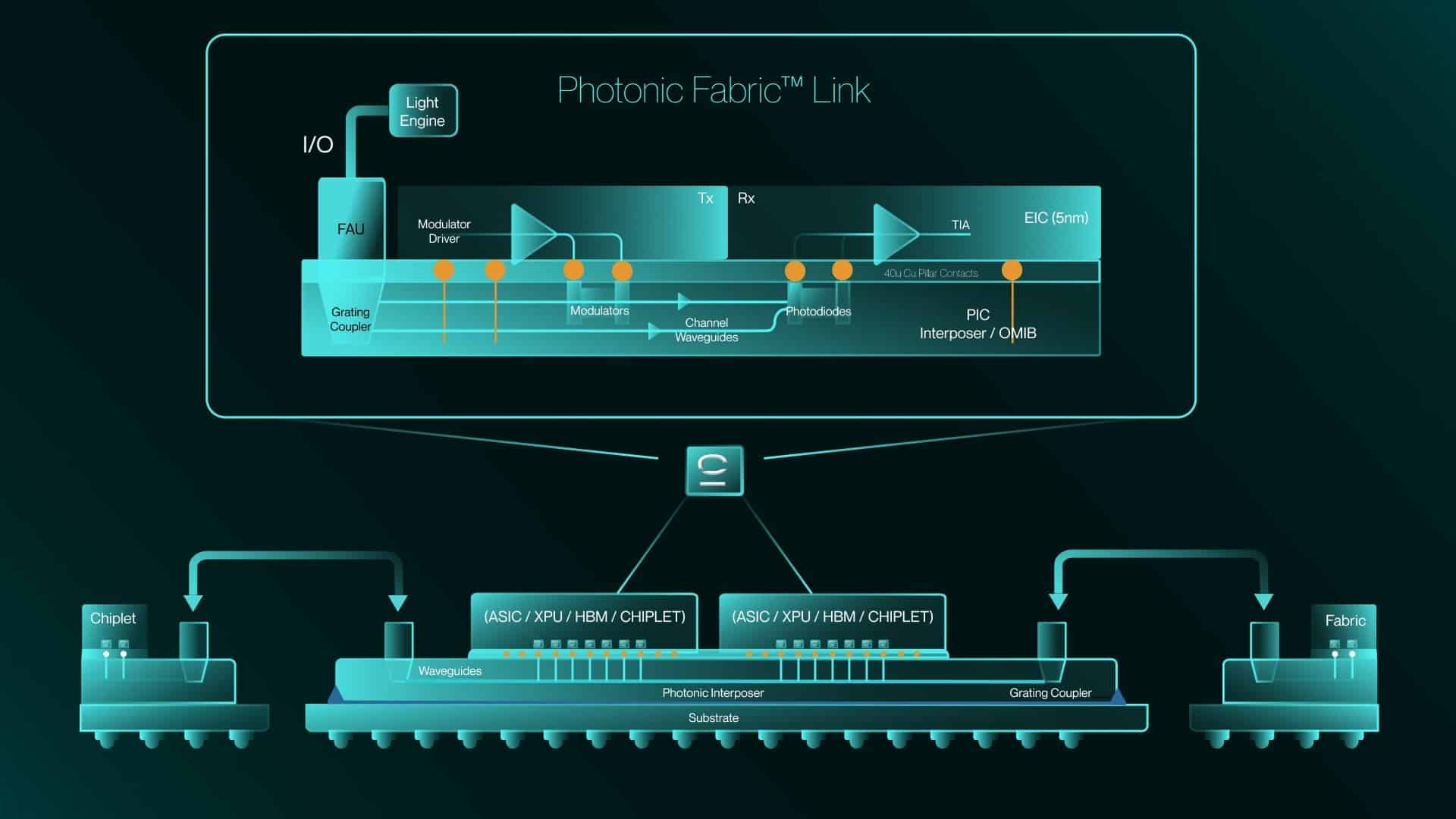

More than just traditional co-packaged optics solutions used in networking switches like Broadcom’s, Celestial AI’s approach integrates a silicon photonic layer with active and passive components. They design their own optical MAC (OMAC) for reliability and service functions, replace ring modulators with more thermally efficient EAM (Electro-Absorption Modulators), and position optical I/O at the center of the ASIC, freeing up front-side space for HBM memory and other electrical connections. This results in a more flexible and scalable packaging capable of housing large matrices of interconnected chiplets with integrated photonics.

Celestial demonstrated how their Photonic Fabric Link (PFLink) integrates into CoWoS-L configurations, connecting chiplets via an Optical Interconnect Multichip Bridge (OIMB). While manufacturing optical interfaces securely is challenging, the company claims to have developed specific packaging solutions. The Gen1 Photonic Fabric Module is already used in a 16-port switch with attached memory, having completed four successful tapeouts, indicating the technology is ready for real-world deployment.

Celestial’s vision addresses a critical issue: the mismatch between computational growth and interconnection capacity. While compute bandwidth grows exponentially, I/O outside the package scales linearly with perimeter. Photonic Fabric breaks this bottleneck, enabling the integration of dozens of chiplets and full rack connections as if they were a single “Super XPU.”

Key benefits include scaling AI models with longer contexts and larger training batches, more efficient inference with reduced energy costs and higher compute density, and lowering the total cost of ownership (TCO) in data centers—particularly important for making generative AI models more profitable.

With a strong financial foundation, Celestial AI closed a $250 million round in March 2025 to accelerate its Photonic Fabric deployment. It acquired Rockley Photonics’ patent portfolio, enhancing its photonic IP, and boasts industry-leading advisors like Lip-Bu Tan and Diane Bryant. The company was named a “Start-Up to Watch” by the Global Semiconductor Alliance in 2024.

In summary, Celestial AI’s Photonic Fabric exemplifies a bold industry shift towards radical solutions in response to AI’s explosive growth. Replacing electrical links with optical ones represents more than a technological leap; it’s a paradigm shift that could reshape data center architecture in the coming decade. With its roadmap, strategic partners, and robust funding, Celestial AI is poised to be a major player in pushing AI beyond silicon limits.

Intelligence, illuminated.™

Frequently Asked Questions

What is Celestial AI’s Photonic Fabric?

It’s an optical interconnection technology based on silicon photonics that enables scaling AI computation within a package and across multiple racks, with bandwidths in terabytes and latencies in nanoseconds.

What problems does it solve?

The bottleneck caused by electrical connections, which can no longer keep pace with the growth of AI models or multi-die systems.

Where is it applied?

In massive GPUs, AI accelerators, chiplet interconnects, and multi-XPU clusters in data centers.

Is it ready for production?

Yes, Celestial AI has completed four tapeouts and is already using its Photonic Fabric Gen1 in 16-port switches with integrated memory.

Sources: LinkedIn, ServeTheHome, Celestial.ai