Madrid. — During the Hot Chips 2025 conference, NVIDIA provided more details about the GB10 Grace Blackwell SoC and the DGX Spark system, marking what many analysts call a new phase in their AI strategy focused on bringing data center power to personal workspaces.

These products promise to deliver high-performance capabilities in more compact formats, enabling prototyping, fine-tuning, and running AI models with capabilities previously reserved for large servers.

Below are the known specifications, what these devices entail, their advantages, limitations, and how they fit into the current technological landscape.

Main Technical Features of the GB10 SoC and DGX Spark

Based on the disclosed specifications:

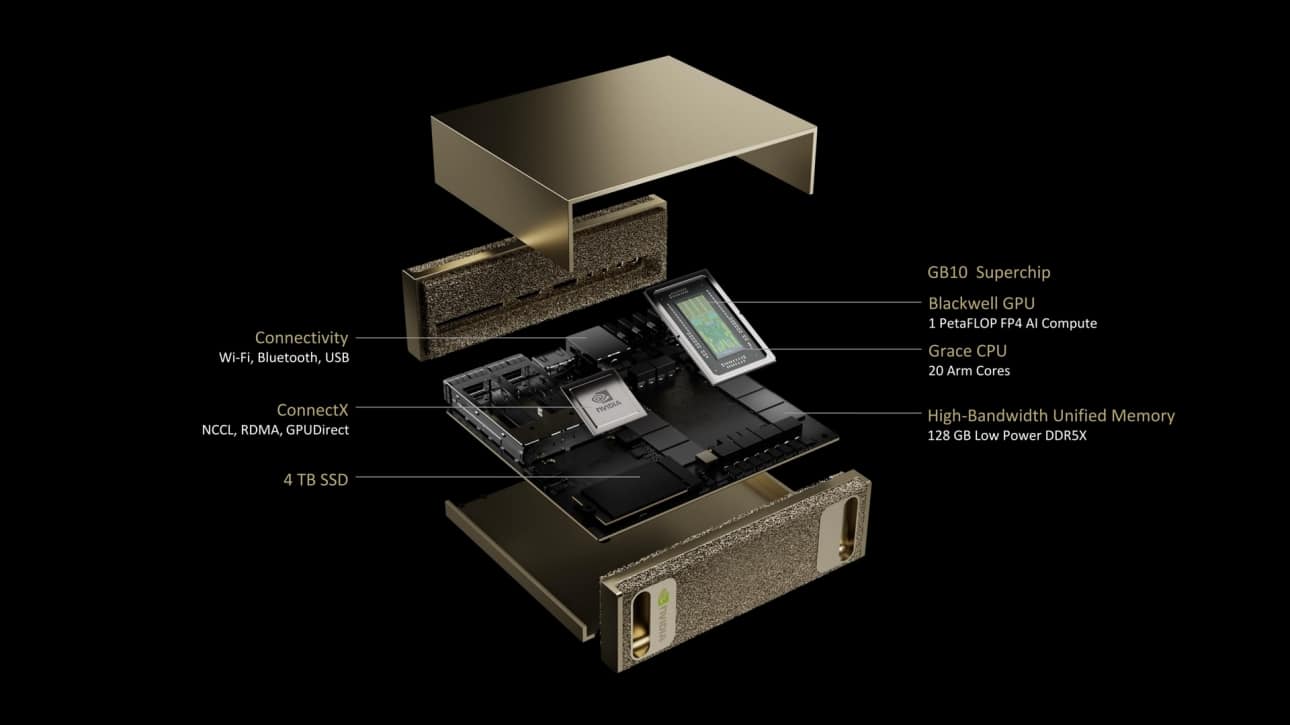

- The GB10 is a multi-dielet SoC (dielets) combining an Arm CPU with a GPU based on NVIDIA’s Blackwell architecture.

- Manufacturing: both dies (CPU and GPU) are produced using a 3 nm TSMC process, with packaging via a 2.5D interposer to connect the components.

- CPU: 20 Arm v9.2 cores, organized into two clusters of 10 cores each.

- GPU: all key features of Blackwell, including support for FP4 (low precision allowing more operations per watt).

- Unified coherent memory: offers 128 GB of system memory (LPDDR5X) with significant bandwidth (≈ 301 GB/s) and up to 4 TB of NVMe storage in some systems.

- Theoretical performance: up to 1 petaFLOP in FP4. For FP32 (traditional precision), it provides approximately 31 teraflops.

- Power consumption (TDP): around 140 watts for the entire chip under normal operational conditions.

The DGX Spark system will house this GB10 and offer it as a “personal supercomputer” (desktop AI supercomputer). Collaborations with OEMs like ASUS, Dell, Lenovo, HP, among others, have been announced to produce variants within this format.

What DGX Spark with GB10 Enables

Here are some real-world uses and clear advantages:

- Local prototyping and fine-tuning of large models: With 128 GB of coherent memory, it’s feasible to work on models with tens of billions of parameters for lightweight training or fine-tuning before deployment.

- Energy-efficient inference: Thanks to Blackwell architecture and FP4 support, inferences can be run with lower power consumption, making it useful for researchers, developers, and small teams without access to large data centers.

- Local development with flexible deployment: models developed on DGX Spark can be easily deployed on DGX Cloud or other NVIDIA accelerated infrastructures with minimal code changes, facilitating local testing and subsequent scaling.

- Connectivity and expansion: Using networks like ConnectX-7/-C2C (chip-to-chip), multiple units can be connected to handle even larger models.

Limitations and Open Questions

While promising, there are aspects to consider:

- Price and availability: Although DGX Spark is now in reservation phase, initial prices have not been publicly confirmed across all markets and may vary significantly.

- Heat and noise: 140 W is substantial for a desktop setup. Effective cooling solutions will be necessary, potentially increasing noise levels or requiring well-ventilated environments.

- Size of real models vs. theoretical capabilities: While models with tens or hundreds of billions of parameters are discussed, achieving this in practice often involves quantization or reduced precision techniques to efficiently utilize hardware.

- Competition with cloud alternatives: For users already reliant on cloud infrastructure, local hardware may not provide sufficient benefits in terms of space, electricity, and support, especially at large scales.

- Software ecosystem and compatibility: Though NVIDIA offers a robust platform (DGX OS, CUDA libraries, etc.), adapting new AI libraries, models, framework versions, and ensuring driver compatibility can be challenging.

Strategic Implications

The unveiling of GB10 and DGX Spark signals several strategic movements by NVIDIA:

- Hardware diversification: Not everything is centered on massive data centers; bringing some of that power to desktops, labs, universities broadens the base of AI developers.

- Broader Blackwell ecosystem: Blackwell is no longer just for mega-GPU servers but is evolving into a scalable family ranging from SoCs like GB10 to chips for entire data centers.

- Democratizing access to powerful AI: Enabling researchers, startups, academic institutions, and mid-sized companies to access potent hardware locally reduces dependence on the cloud, improves latency, privacy, and lowers operational costs.

- Competitive pressure: Competitors like AMD, Intel, etc., will need to respond with their own SoC, mini-server, or local AI PC offerings to stay relevant in the research or edge/desktop AI markets.

Conclusion

The GB10 superchip and DGX Spark system mark a significant step in NVIDIA’s vision of AI—not just existing in data centers but also in desktops, laboratories, and development studios. With Blackwell capabilities, a high-performance MediaTek CPU, unified memory, FP4 support, and scalable networking, they bridge the gap between once-considered “infrastructure” hardware and everyday AI development tools.

For AI model researchers, developers, and organizations needing local prototyping, these innovations open real possibilities. However, we’ll have to see when prices decrease, availability improves, and support details are refined.

Frequently Asked Questions

What is NVIDIA’s GB10 SoC?

It’s a superchip combining an Arm-based CPU (20 cores) with a Blackwell GPU, built on a 3 nm process by TSMC, designed to deliver data center performance in a desktop or workstation form factor.

What is DGX Spark and what does it do?

DGX Spark is a NVIDIA system that houses the GB10, intended as a “personal AI supercomputer” for researchers, developers, and local AI model prototyping, with 128 GB of unified memory.

How many parameters can it handle locally in AI models?

With 128 GB of unified memory, DGX Spark is estimated to fine-tune models up to around 70 billion parameters and run inferences on even larger models, especially using reduced precision like FP4.

How does its FP4 performance compare to alternatives?

The projected performance—up to 1 petaFLOP in FP4—places it among the highest for desktop setups, approaching high-end discrete GPUs. Actual performance will depend on cooling, software optimization, and workload specifics.

via: Server The Home