Microsoft has deployed the first large-scale cluster with NVIDIA GB300 NVL72 for OpenAI workloads. This is not a pilot or a proof of concept: the company confirms more than 4,600 GB300 NVL72 systems deployed, with Blackwell Ultra GPUs interconnected through the new NVIDIA InfiniBand Quantum-X800 network. This move marks the beginning of a new era in AI infrastructure that, according to Redmond, allows training in weeks what used to take months, and opens the door to models with hundreds of trillions of parameters (in American English, trillions).

The ambition extends beyond this milestone. Microsoft emphasizes that this cluster is the first of many: its roadmap includes hundreds of thousands of Blackwell Ultra GPUs distributed across its AI data centers worldwide. The goals are twofold: to accelerate cutting-edge model training and to improve inference performance in production with longer context windows, more responsive agents, and multimodality at scale.

“This co-engineered system provides the world’s first production-scale GB300 cluster, the supercomputing engine that OpenAI needs to serve multi-billion parameter models. It sets the new definitive standard in accelerated computing,”

highlighted Ian Buck, Vice President of Hyperscale and High-performance Computing at NVIDIA.

From GB200 to GB300: Azure raises the bar for general-purpose AI

Earlier this year, Azure introduced the ND GB200 v6 virtual machines, based on the NVIDIA Blackwell architecture. These VMs became the backbone of some of the most demanding workloads in the sector; OpenAI and Microsoft had already been using large clusters of GB200 NVL2 in Azure for training and deploying frontier models.

The new generation ND GB300 v6 raises the bar with a design focused on reasoning models, agentic AI, and multimodal generative capabilities. Its rack-scale approach groups 18 VMs together, totaling 72 GPUs and 36 Grace CPUs:

- 72 NVIDIA Blackwell Ultra GPUs (paired with 36 NVIDIA Grace CPUs).

- 800 Gbps per GPU inter-rack bandwidth via NVIDIA Quantum-X800 InfiniBand.

- 130 TB/s of NVLink inside the rack.

- 37 TB of fast memory per rack.

- Up to 1,440 PFLOPS of FP4 Tensor Core performance per rack.

The NVLink + NVSwitch architecture reduces memory and bandwidth bottlenecks within the rack, enabling internal transfers of up to 130 TB/s over 37 TB of high-speed memory. Practically, each rack acts as a tightly coupled unit that boosts inference throughput and reduces latencies even for larger models with extended contexts, which is critical for conversational agents and multimodal systems.

Scaling without blocking: full fat-tree architecture with Quantum-X800 InfiniBand

Beyond individual racks, Azure implements a non-blocking fat-tree topology with NVIDIA Quantum-X800 InfiniBand, the fastest available networking fabric. This design achieves a clear goal: allowing clients to scale training of ultra-large models efficiently to

Less synchronization means higher effective utilization of the GPUs, resulting in faster iterations and lower costs even for compute-heavy training. Azure’s co-designed stack—featuring custom protocols, collective libraries, and in-network computing—aims to maximize network efficiency with reliability and full utilization. Technologies such as NVIDIA SHARP accelerate collective operations by executing computations directly in the switch, practically doubling effective bandwidth and reducing pressure on endpoints.

Data center engineering: cooling, power, and custom software for AI

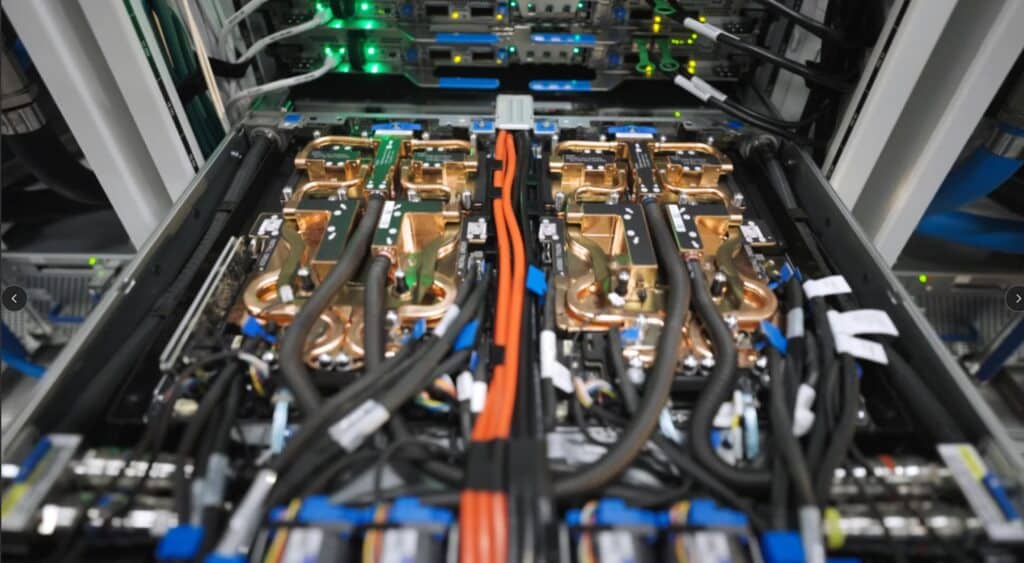

The arrival of GB300 NVL72 is not just a card upgrade—it requires rethinking every layer of the stack—computing, memory, networking, data centers, cooling, and power—as a unified system.

- Advanced cooling. Azure uses independent heat exchanger units combined with plant cooling to minimize water use and maintain thermal stability in dense, high-performance clusters like GB300 NVL72.

- Evolved power distribution. The company continues developing new power distribution models capable of supporting high densities and dynamic load balancing, essential for the ND GB300 v6 class.

- Re-architected software stacks. Storage systems, orchestration, and scheduling have been redesigned to fully leverage compute, network, and storage at supercomputing scale, with high efficiency and sustained performance.

The result is a platform that not only accelerates training but also reduces latencies and increases inference throughput, even with longer contexts and multi-modal inputs (text, vision, audio).

What it means for OpenAI (and the ecosystem)

For OpenAI, having access to the first large-scale production cluster with GB300 on Azure means a supercomputing engine ready for multi-billion parameter models and shorter training cycles. Training and deploying frontier models with longer context windows, deeper reasoning, and more sophisticated agent capabilities require coordinated compute farms where NVLink/NVSwitch and Infiniband keep synchronization and collective traffic under control.

For the rest of Azure’s clients, ND GB300 v6 is shaping up as the new standard for AI infrastructure: where previously a “job” took months, now the goal is to measure in weeks; where large-scale inference involved compromising latency or model size, the promise is to respond faster and with more context. This opens up use cases previously limited by physical constraints: high-context multimodal assistants, agentic AI with extended memory, recommendation systems, generative search with fresher responses and better recall, or simulations integrating language, vision, and structured signals within a unified loop.

Engineering piece: from rack to tens of thousands of GPUs

The rack is the building block: 72 Blackwell Ultra GPUs with NVLink/NVSwitch and 37 TB of fast memory, connected with 130 TB/s bandwidth. This “block” is replicated within the Quantum-X800 InfiniBand fabric, providing 800 Gbps per GPU to scale across racks without bottlenecks (fat-tree architecture). On this foundation, collective libraries and in-network computing mechanisms—like NVIDIA SHARP—operate to lighten load on network traffic via bringing computation into the switch, effectively doubling throughput and reducing endpoint pressure.

The sum is an elastic cluster that maintains high GPU utilization, which is crucial when each percentage point of efficiency can translate into millions of euros over a multi-week training session.

Deployment rooted in years of investment

Microsoft notes that this announcement is the result of years of infrastructure investment. The company has been building AI infrastructure for years, enabling it to rapidly adopt GB300 NVL72 at scale and accelerate its global deployment. As Azure continues to extend these clusters, customers will be able to train and deploy new models in a fraction of the time compared to previous generations.

The commitment remains to publicly share benchmarks and performance metrics as the global rollout of NVIDIA GB300 NVL72 progresses.

Why it matters: four key insights

- Faster training, cheaper iteration. If training time drops from months to weeks, the pace of hypotheses and improvements accelerates. In AI, the iteration time is the multiplier that separates those making progress from those chasing.

- Larger and more useful models. The combination of FP4, NVLink, and Infiniband makes possible models with hundreds of trillions of parameters (multitrillion scale) and longer contexts; this translates to more accurate responses, less fragmentation, and more contextual relevance.

- Inference with fewer compromises. Inference is no longer a lesser cousin to training: tightly coupled racks and optimized collective libraries enable low latency even for large models and extended contexts.

- Ecology and co-engineering. None of this is possible without NVIDIA: Blackwell Ultra, Grace, NVLink/NVSwitch, Quantum-X800, and SHARP are the building blocks; Azure provides the core infrastructure—data centers, power, cooling, planning, and software—to support and scale this evolution.

Technical specs (per ND GB300 v6 rack)

- 72 NVIDIA Blackwell Ultra GPUs + 36 Grace CPUs

- 800 Gbps per GPU intra-rack via InfiniBand Quantum-X800

- 130 TB/s of NVLink (inside rack)

- 37 TB of fast memory

- Up to 1,440 PFLOPS of FP4 Tensor Core performance

Summary

The deployment of the first large-scale cluster with GB300 NVL72 positions Azure as an immediate leader in AI supercomputing for frontier models. If the plan to scale to hundreds of thousands of GPUs unfolds as announced, the industry could normalize training cycles of weeks and accelerate a wave of larger, faster, and more useful models in agents and multimodality. The shift is not incremental; it is systemic: from silicon to data center infrastructure, passing through networking and software stacks.

The close collaboration with NVIDIA acts as both a risk and an advantage: it concentrates cutting-edge technology in a single evolution pathway that, for now, dominates the market. In the short term, for OpenAI and Azure clients, the message is clear: more capacity, faster, and with less operational friction to train and infer at very large scale.