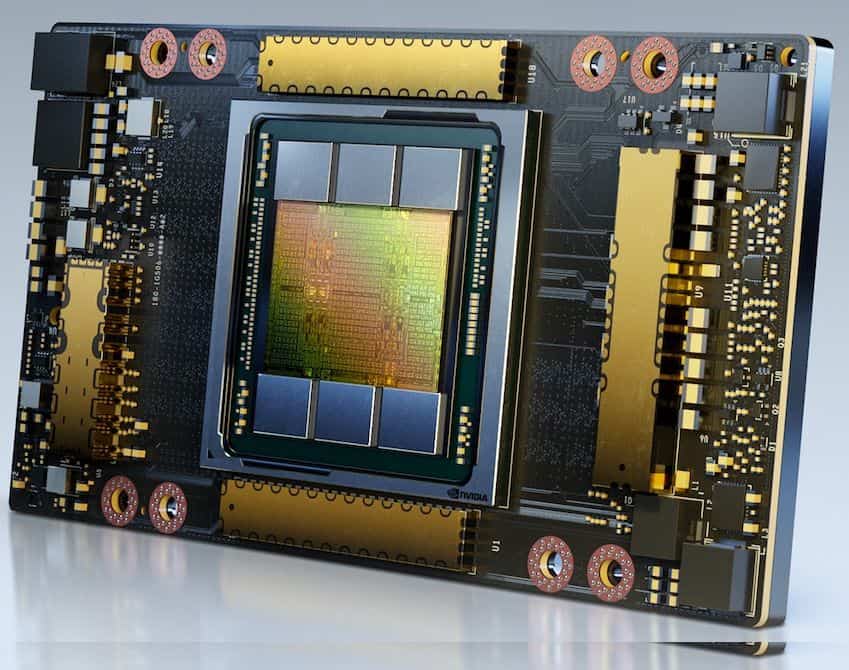

Amazon Web Services (AWS) has spoken out about a reality that many clients have been quietly sensing for months: when GPU demand outpaces supply, the tech calendar no longer dictates the rules. According to AWS CEO Matt Garman, the company continues operating servers with Nvidia A100—a GPU introduced in 2020—and confirms that none have been retired, partly because available capacity still falls short of market demand.

Garman explained this during a recent industry event in a conversation with Jeetu Patel, Cisco’s President and Chief Product Officer. His straightforward assessment was that capacity pressure remains so high that even “older” chips maintain real demand, to the point where AWS reports being completely sold out of A100-based instances. Beyond the headlines, the message points to a structural shift: cloud services are no longer driven solely by refresh cycles but by utilization and availability.

A sign of the times: hardware isn’t retired if it’s still useful

In the cloud world, “retiring” hardware isn’t a sentimental decision; it’s usually a cold calculation involving operational costs, energy efficiency, performance density, and support considerations. However, Artificial Intelligence has changed that equation. The surge in training and inference workloads, along with the adoption of increasingly large and expensive models to run, means that any GPU capable of delivering profitable results still finds a place.

The A100, even though it belongs to an earlier generation compared to the H100/H200 or the latest architectures, remains a valuable component in many scenarios: from intensive inference and data pipelines to “sufficient” training for teams that don’t need the latest hardware. And when alternatives involve waiting, paying premiums, or redesigning workloads, the practical decision often becomes clear.

Google and its TPUs too: “seven or eight years” at full utilization

AWS’s argument isn’t isolated. Garman compared the situation to what Google has already described elsewhere: Amin Vahdat, Vice President and Head of AI and Infrastructure, stated that the company maintains seven generations of TPUs in production, with hardware that’s “seven or eight years old” operating at 100% utilization. The key nuance is that not only GPUs are being stretched: proprietary silicon also persists when demand is oversized and platforms require continuity.

In market terms, this dispels a myth: it’s not always about rushing to the latest chip, but about achieving more useful hours per watt, euro, and rack with existing deployments.

Demand isn’t everything: precision rules in HPC

The most interesting part of Garman’s remarks wasn’t just “we’re still using A100,” but the technical reasons why some clients don’t want to automatically migrate to the latest generation. AWS’s CEO noted that the industry has achieved significant improvements by reducing numerical precision (fewer bits, more apparent performance) in many AI workloads. However, not everything benefits from this trend.

He explained that some users have conveyed that they cannot move to newer architectures because they perform HPC-style calculations where numerical precision is critical. In such work—simulations, science, engineering, quantitative finance, physical modeling—higher precision isn’t a “trade-off”; it can be an error. In other words, the race to accelerate AI with lower precision coexists with a world where accuracy remains non-negotiable.

This clash of needs helps explain why older hardware remains in use. It’s not just that it “works,” but in some cases, it works better for certain segments of the market.

Price cuts in 2025: maximizing amortized investment, competing by volume

AWS also dropped a notable hint about their commercial strategy. In June 2025, the company announced a 33% reduction in the on-demand price for instances using Nvidia H100, H200, and A100 GPUs with the P4d and P4e types.

Apart from the percentage, this move reflects a classic pattern: when a platform has valuable, already amortized capacity, lowering prices can stimulate demand and increase utilization. With GPU markets under tension, this lever makes even more sense: making the “older generation” attractive allows absorbing consumption spikes without fully depending on new hardware arrivals.

Implications for businesses and developers

For customers, the takeaway is less dramatic but more practical: designing AI infrastructure in the cloud no longer just involves choosing the “newest” GPU. It requires evaluating:

- Actual availability (what can be contracted today, not just what’s in the catalog).

- Compatibility and reproducibility (models, drivers, libraries, precision).

- Total cost (price per hour, but also wait times, migration, and redesign efforts).

- Dependency risk (if a certain instance type runs out, is there a backup plan?).

For cloud providers, the message is equally clear: in the AI era, retiring hardware on a fixed schedule may be a luxury. The priority is keeping capacity operational because demand won’t wait.

FAQs

Why would AWS continue using Nvidia A100 if newer GPUs are available?

Because GPU demand still exceeds supply, and many clients achieve competitive results with A100—especially when cost and availability are better.

What workloads benefit from keeping “old” hardware?

Inference, medium-sized training, data processing, and certain workloads where stability, compatibility, or cost are more important than having the latest generation.

Why can’t some clients migrate to newer GPUs?

In HPC-style tasks, numerical precision can be critical. If an architecture or setup prioritizes performance with lower precision, it might not be suitable for sensitive simulations or calculations.

What does the 33% price reduction on A100 instances (P4d/P4e) mean?

AWS is aiming to make that capacity more attractive to maintain high utilization and offer a competitive option when the latest GPUs are scarce or costly.

via: datacenterdynamics