Amazon made it clear at AWS re:Invent 2025 that they don’t plan to fall behind in the AI hardware race. The company unveiled their new Trainium3 UltraServers and previewed details about the upcoming Trainium4, two bets that reinforce their strategy: relying less and less on third-party GPUs and building their own scalable computation platform for large-scale AI models.

Trainium3 UltraServers: up to 144 chips and 4.4x more performance

The major commercial breakthrough is the Amazon EC2 Trn3 UltraServers, integrated systems that pack up to 144 Trainium3 chips into a single “super-server” designed specifically for training and running large AI models. According to AWS, these UltraServers offer:

- Up to 4.4 times more computing performance than the previous generation (Trainium2 UltraServers).

- Approximately 4 times greater energy efficiency.

- Almost 4 times more memory bandwidth, crucial for moving large amounts of data without creating bottlenecks.

In practical terms, this means reducing training times from months to just a few weeks, serving many more inference requests with the same infrastructure, and most importantly, lowering the cost per query for services like chatbots, AI agents, or real-time video and image generation.

AWS claims up to 362 PFLOPS in FP8 per system when fully utilizing Trainium3, a figure that places these racks among the most advanced supercomputers, packaged as a cloud service.

Custom networks: NeuronSwitch-v1 and UltraClusters 3.0

The chip is only half the story. The real challenge in AI isn’t just “having lots of FLOPS,” but connecting thousands of chips without the network becoming a bottleneck.

To address this, Amazon has designed a specific networking infrastructure:

- NeuronSwitch-v1, a new switch that doubles internal bandwidth within each UltraServer.

- An improved Neuron Fabric network fabric that reduces communication latency between chips to less than 10 microseconds, a critical figure for distributed training of giant models.

Additionally, the EC2 UltraClusters 3.0 enable chaining thousands of these UltraServers to form clusters of up to 1 million Trainium chips, ten times larger than the previous generation. This kind of infrastructure underpins the so-called “frontier models,” multimodal foundational models with trillions of parameters and datasets of trillions of tokens, trained by only a handful of companies worldwide.

Real-world results: lower costs and faster speeds

Amazon isn’t just stopping at slides. Companies like Anthropic, Karakuri, Metagenomi, NetoAI, Ricoh, and Splash Music are already using Trainium to train and deploy their models, achieving cost savings of up to 50% compared to GPU-based alternatives, according to AWS.

A notable example is Decart, a real-time video generative lab, which claims to achieve 4 times faster inference at half the cost when generating videos, compared to traditional GPU solutions. This paves the way for previously prohibitive applications: live interactive experiences, real-time simulations, or graphics assistants that respond with imperceptible latency.

Amazon’s Bedrock, AWS’s managed foundational models platform, is already serving production workloads on Trainium3, indicating that this chip is not just a laboratory experiment but a core component of the company’s commercial offerings.

Looking ahead: Trainium4 and the NVIDIA partnership

Far from resting on its laurels with Trainium3, Amazon has advanced its roadmap with Trainium4, the next-generation AI ASIC:

- At least 6x performance in FP4 (an ultra-low precision format designed for massive inference).

- 3x performance in FP8, a format that’s becoming the standard for training large models by balancing precision and efficiency.

- 4x memory bandwidth, to support even larger models without throttling the chip.

The most exciting news is that Trainium4 will integrate with NVIDIA NVLink Fusion and compatible MGX racks, enabling the combination of NVIDIA GPU servers and Trainium servers within the same high-speed infrastructure. This means it’s not a matter of choosing “NVIDIA or Trainium,” but rather a heterogeneous environment where each chip is used for what it does best.

Amazon’s strategic bet: less dependency, more control

This move by Amazon should be read in the context of a race where Google (TPU), Microsoft, Meta, and other tech giants are competing to develop their own AI chips to reduce reliance on third parties, better control costs, and tailor hardware to their most critical use cases.

With Trainium3, UltraServers, and the roadmap for Trainium4, AWS sends several clear messages:

- “Silicon-first”: The AI infrastructure of the next decade will be defined by both software and hardware.

- Relative democratization: While these systems will still be accessible mainly to large companies and cloud providers, offering them as EC2 services allows more companies to access capabilities that were previously reserved for a select few giants.

- Total optimization: It’s no longer enough to have many chips; every watt and every bandwidth bit must be optimized to keep costs from skyrocketing as models grow.

What does this mean for businesses and developers?

For most companies, this translates into very concrete benefits:

- Faster training: reducing training times from months to weeks enables more iterations, testing more models, and getting to market faster.

- Cheaper inference: if the cost per request decreases, more AI assistants, agents, and services can be deployed to more users without breaking the bank.

- Greater architectural options: combining Trainium with NVIDIA GPUs in a high-speed network allows for hybrid infrastructures tailored to each use case (training on one platform, inference on another, etc.).

- Reduced “de facto” GPU lock-in: as more real alternatives emerge for training and inference, there will be more room to negotiate prices and architectures.

It’s not an immediate revolution for the end user, but it’s a crucial piece of the “invisible side” of AI: the data center hardware that makes it possible for a chatbot to respond in seconds, a video to be generated in real-time, or an intelligent agent to make decisions in milliseconds.

In the AI compute race, Amazon has just made it clear that they are serious. And if Trainium3 with its UltraServers and the aggressive plans for Trainium4 prove anything, it’s that the next battle won’t just be about the best model, but about who has the fastest, most efficient, and most flexible infrastructure to run it at a planetary scale.

Frequently Asked Questions about Amazon’s Trainium3 and Trainium4

What exactly is an AWS Trainium3 UltraServer?

It’s an AWS integrated system that combines up to 144 Trainium3 chips into a single logical server, designed specifically to train and run large AI models. It offers up to 4.4x more compute performance and 4x better energy efficiency than the previous generation (Trainium2 UltraServers).

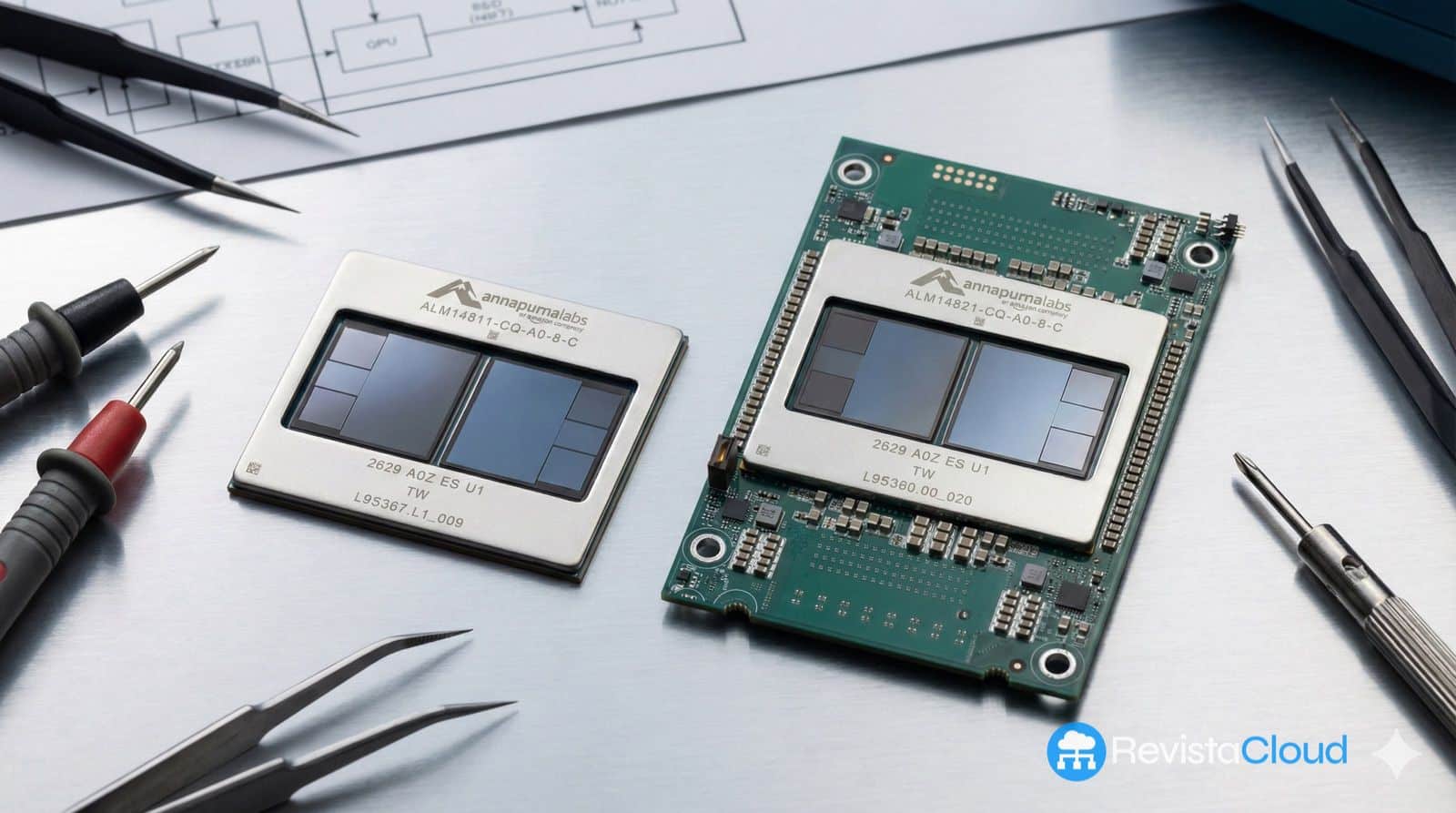

How does Trainium differ from traditional AI GPUs?

Trainium is an ASIC (application-specific integrated circuit) optimized for typical AI operations, whereas GPUs are more general-purpose processors. In theory, Trainium can deliver better performance per watt and lower cost per token trained or inferred, but it’s less versatile for other workloads.

Can companies use Trainium if they already have NVIDIA GPU infrastructure?

Yes. AWS already allows combining GPU and Trainium instances, and with Trainium4, support is being developed for NVLink Fusion and MGX racks, making integration of both in a high-performance architecture easier.

Is Trainium relevant for small and medium businesses, or only big tech?

While the design is clearly aimed at very large models and massive workloads, offering it as an AWS service means any company or startup can access this infrastructure on demand. It significantly lowers the entry barrier to compute capabilities that once only a few giants could afford to build in-house.

via: About Amazon