Elon Musk has once again brought up an ambitious and controversial idea: that Tesla should build its own mega-chip manufacturing facility, informally dubbed “Terafab.” The argument is straightforward: if the company truly wants to scale its autonomous driving and robotics AI plans, designing chips isn’t enough; volume is required, and that—according to Musk—demands controlling production at a scale that currently depends on third parties.

The most striking part isn’t the name (very much in the style of “Gigafactory”), but the industrial proposal: a “homegrown” facility that integrates logic, memory, and packaging under one roof. In other words, not only manufacturing the chip but also addressing two increasingly critical bottlenecks in AI: memory (by capacity and bandwidth) and advanced packaging (by density, thermal management, and efficiency).

An almost science-fiction scale… but with numbers on the table

In his proposal, Musk described a unit capable of processing 100,000 wafers per month, and mentioned a “complex” of up to ten similar facilities. Translated into industry language, this would mean building something that’s not just “another factory,” but a national-scale infrastructure project involving talent, equipment, suppliers, and geopolitics.

The reason for this scale is volume: Tesla isn’t just competing on performance but on quantity. When the final product includes fleets of vehicles, training and inference for autonomous software, and humanoid robots, the chip stops being just a component—it’s become a growth limiter.

Real plan or strategic message?

Nonetheless, the market perceives “Terafab” more as a signal than a fixed timetable. On one hand, Tesla already operates a dual-supplier strategy for its upcoming chips (AI5/AI6), relying on Samsung and TSMC to mitigate capacity, yield, and cost risks.

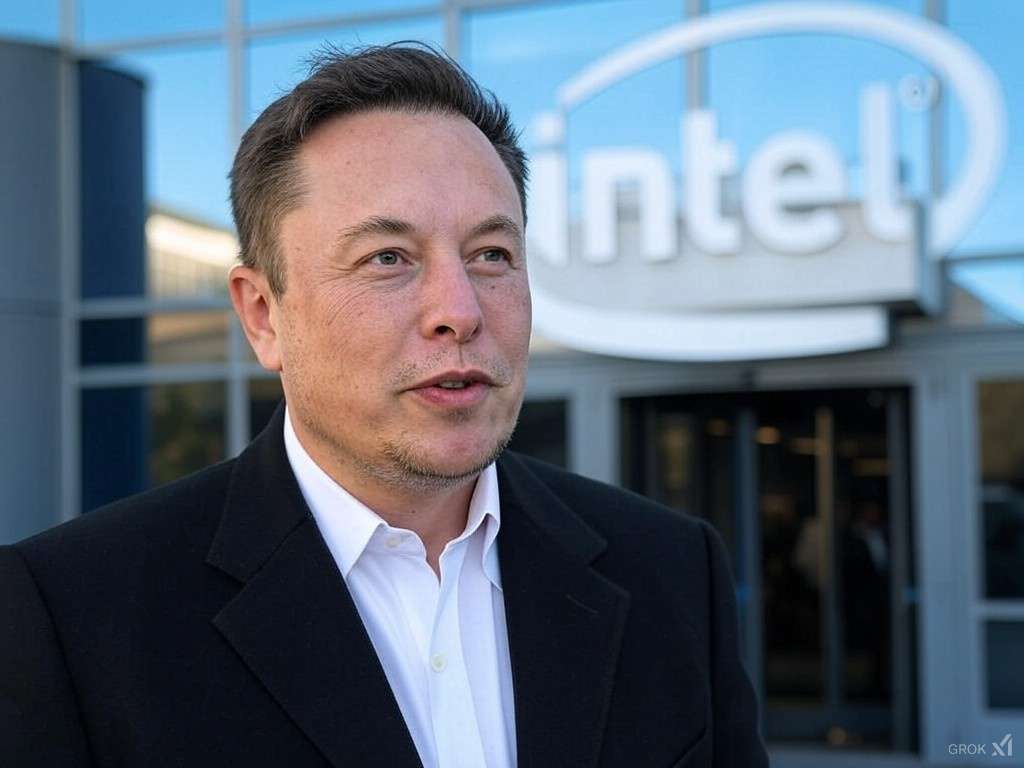

On the other hand, Musk has hinted at the possibility of partnering with an industrial partner: he explicitly mentioned that Intel could be involved, which aligns with the company’s current efforts to attract major clients for its foundry business.

The most pragmatic interpretation is that Tesla is applying pressure in two directions simultaneously:

- Short/medium-term: securing supply with existing large manufacturers.

- Long-term: making it clear that if bottlenecks persist, Tesla might consider further integrating its supply chain — possibly even manufacturing its own chips.

The elephant in the room: capital and execution

Building semiconductor capacity isn’t just about CAPEX; it’s about coordinating an entire ecosystem. Moreover, this comes at a time when many tech companies are already increasing infrastructure spending due to the AI boom. In this context, even “normal” investment figures for Tesla are moving. Bloomberg, in its earnings coverage and forecasts, projected a CAPEX of at least $20 billion in 2026 (though that doesn’t automatically mean funding a Terafab).

In other words: a full Terafab—if it ever materializes—would be a multi-year project with a direct impact on margins and strategic priorities, likely requiring partnerships, incentives, and large-scale purchase commitments.

The new battleground: factory footprint as strategic leverage

Beyond whether Tesla builds the Terafab or not, the message aligns with the emerging trend: AI demand is no longer decided solely through GPUs or software. It’s now driven by fab capacity, memory, and packaging, and by who can guarantee supply continuity when competition heats up.

Frequently Asked Questions

What exactly is a “Terafab”?

Musk’s idea points to a mega-facility capable of integrating chip manufacturing (logic), memory, and advanced packaging for mass-scale production.

Can Tesla manufacture chips without relying on TSMC or Samsung?

Currently, Tesla depends on external foundries. In fact, Musk has confirmed a dual-supplier strategy (Samsung and TSMC) for upcoming chips like AI5/AI6.

Why include “memory and packaging” in the same project?

Because in modern AI, actual performance depends not only on the chip itself but also on memory (capacity/bandwidth) and packaging (interconnection, density, thermal efficiency), which are growing bottlenecks.

What role would Intel play in all this?

Musk mentioned the possibility of partnering with Intel as a collaborator, which would fit Intel’s efforts to attract major customers to its foundry division.

via: wccftech