In the AI market, the race is no longer just about who trains the best model, but who secures the infrastructure capable of supporting it. And Nvidia is once again shifting the game with a proposal that, due to its power and cost, best illustrates the sector’s current moment: its new VR200 NVL72 rack, paired with the Vera Rubin platform, could be valued at around 180 million Taiwanese dollars, which is approximately $5.7–6.0 million USD, according to supply chain estimates in Taiwan.

The figure, “painful” even by corporate standards, has quickly raised eyebrows. At the same time, it also serves as a reminder of a recurring rule in every technological cycle: when a provider has something that others cannot match (or cannot deliver on time), price ceases to be just a number and becomes a tool of power.

A rack that is not sold as “hardware,” but as concentrated computing capacity

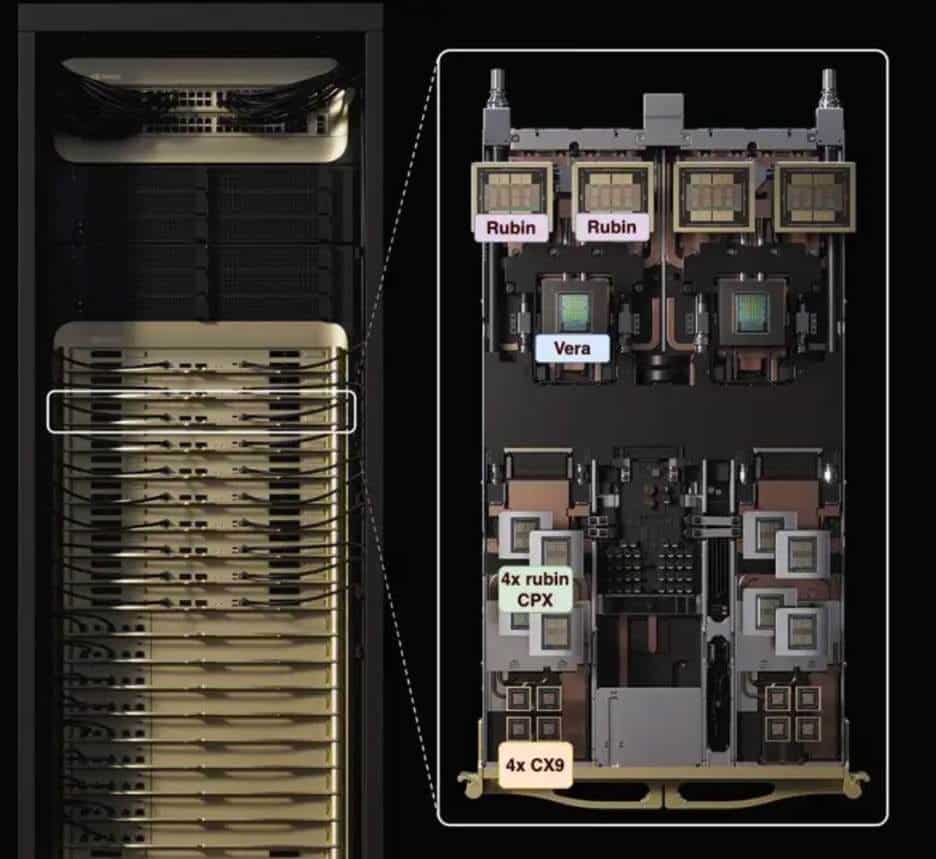

The VR200 NVL72 is not an ordinary server or a collection of cards; it’s a rack-scale system designed to deploy as a computing unit within large AI clusters. In practice, it’s the “building block” enabling the construction of pods and training and inference farms with increasingly extreme density.

According to available technical data, this rack integrates 72 Rubin GPUs and 36 Vera CPUs, along with a stack of interconnection and switching designed to behave as a high-performance acceleration domain. In numbers, the system operates in a realm that until recently was almost exclusive to supercomputing: discussions center on 3.6 EFLOPS of inference in NVFP4 format, showing a significant jump over previous generations.

The leap is not only in computing power. It also encompasses memory and power supply. The rack is associated with HBM4 memory in massive volumes, and features a design that requires liquid cooling and an electrical infrastructure that, in real-world environments, demands resizing parts of the data center.

From $2.9 million to $6.0 million: the price escalation in the “rack-scale” era

The leaked price of the VR200 isn’t in isolation. The market itself has been assuming that serious AI systems cost in the millions per rack. In Taiwan, estimates suggest that the previous GB200 NVL72 was around $2.9 million, while the GB300 moved toward $4.0 million. Now, with a more complex VR200, with higher integration and greater thermal and electrical demands, the benchmark appears to shift toward $5.7–6.0 million.

This data point is significant because it reflects not just the hardware cost but also the cost of accessing capacity. In a market where demand for AI compute continues to grow and the supply of critical components — such as HBM memory, advanced packaging, networking, and available power — doesn’t always keep pace, price becomes a form of rationing. Those able to pay get ahead in line.

The “infrastructure” factor: power that forces data center redesigns

The other side of the VR200 story is that purchasing a rack isn’t just about the hardware; it’s also about the right to overhaul the data center so that the rack can fit “properly.” Sector analyses indicate that the VR200 NVL72 involves higher power requirements, necessitating evolutions in electrical architecture and distribution, with liquid cooling at the core of the design.

In systems like this, the question isn’t just whether the server needs more fans; it’s whether the building can support the jump in density. Within this context, the several-million-dollar figure per rack is read differently: for a major purchaser, the difference between having capacity today or in six months could be worth more than the cost of the cabinet itself.

“If you have something others don’t, you set the price”: the power of pricing control in AI

The prevailing industry saying — “if you have something others don’t, you set the price” — summarizes a dynamic Nvidia has mastered. The company doesn’t just sell chips; it is driving the market toward integrated solutions where the customer buys a complete block: compute, networking, memory, cooling, system design, and validation.

This level of integration has a direct effect: it shifts part of the value from server OEMs or integrators toward those controlling the core technology. From a business perspective, it’s a way to capture more margin and, at the same time, turn the offering into a “scarce product” with high entry barriers.

The VR200 also aligns with another trend: acquiring AI infrastructure as a strategic asset, not just for experiments. By 2026, clients considering racks costing $5.7–$6.0 million are unlikely to be SMEs or modest labs; they are hyperscalers, large platforms, governments, defense agencies, or companies competing to train frontier models or deploy inference at scale.

The uncomfortable question: how much actual demand exists for a $6 million rack?

The big uncertainty isn’t whether the VR200 is expensive (it is), but how many units the market can sustainably absorb. According to Taiwanese supply chain estimates, there are differing views on shipping volumes precisely because the price leap and infrastructure demands shrink the universe of potential buyers.

Still, the sector’s logic pushes in one direction: as long as compute remains the bottleneck for advanced AI, systems capable of boosting performance and simplifying deployments will continue to find demand. In this context, the VR200 isn’t sold as a “rack” but as time: time to train earlier, serve inference with less latency, launch products before competitors.

And in the real AI economy, the market shows there are clients willing to pay.

Frequently Asked Questions

What is the estimated price of the Nvidia VR200 NVL72 rack?

Market estimates in Taiwan place the VR200 around NT$180 million, approximately $5.7–$6.0 million USD, though Nvidia has not officially published a price.

What distinguishes the VR200 NVL72 from previous racks like GB200 or GB300?

Paired with Vera Rubin, it enhances system integration and power, featuring 72 Rubin GPUs and 36 Vera CPUs, along with improvements in interconnection, memory, and large-scale operation design.

What data center requirements does an AI rack like the VR200 impose?

Such systems typically require liquid cooling, high energy density, and adaptations to the data center’s electrical infrastructure. The challenge isn’t just acquiring the rack but also powering and cooling it reliably.

Why can Nvidia set such high prices for AI infrastructure?

Because AI compute demand often exceeds available capacity, and Nvidia maintains a dominant position in acceleration and integrated systems. When supply is limited and performance offers a competitive edge, prices remain high.