The dominant narrative around infrastructure for artificial intelligence often centers on GPUs, interconnects, and data centers. However, a recent TrendForce report shifts the focus to a component that quietly influences costs, performance, and capacity planning: memory. According to their projections, the evolution of AI architectures— increasingly geared toward sustained inference, large data volumes, and random access— is pushing the combined DRAM and NAND flash market toward a historic peak in 2027, with growth rates reminiscent of a “second wave” of the AI boom, but this time with memory taking center stage.

A leap in scale: from sector cycle to AI’s “core infrastructure”

TrendForce describes a structural shift: as the volume of data to be queried and moved grows, AI systems rely more heavily on high-bandwidth, large-capacity, low-latency DRAM to process model parameters, run long-duration inferences, and support task parallelism. Meanwhile, NAND flash transitions from being “storage” to a critical element for fast data transmission within infrastructure environments, elevating its role in AI stacks and cloud service providers (CSP).

The implicit message is clear: if AI is industrializing computation, memory is industrializing data access. When data access becomes the bottleneck, pressure shifts to prices and availability.

The figures behind the hype: 2026 and 2027 as pivotal years

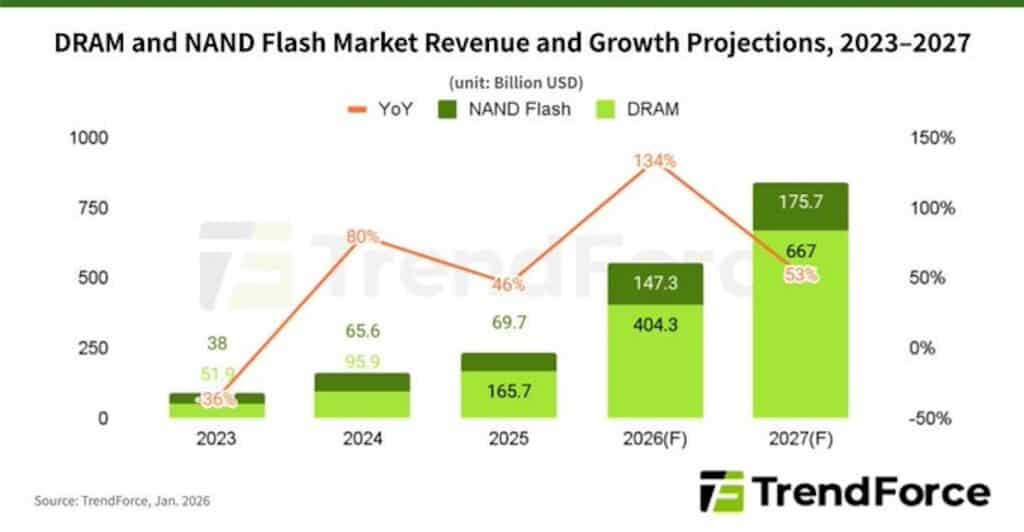

TrendForce projects the total memory market (DRAM + NAND) reaching $551.6 billion in 2026 and hitting a new peak of $842.7 billion in 2027, implying a year-over-year growth of 53% in 2027. The acceleration won’t be linear: the 2026 forecast relies on a significant rebound starting in 2025 and sustained demand linked to AI and enterprise infrastructure.

To understand the internal distribution—and why DRAM dominates the curve—here are the main figures from TrendForce’s scenario:

| Year | DRAM (mill. USD) | NAND (mill. USD) | Total (mill. USD) | YoY Growth (total) |

|---|---|---|---|---|

| 2025 | 165.7K | 69.7K | 235.4K | 46% |

| 2026 (forecast) | 404.3K | 147.3K | 551.6K | 134% |

| 2027 (forecast) | 667.0K | 175.7K | 842.7K | 53% |

The snapshot suggests that the significant jump in 2026 will largely be driven by DRAM, with NAND also rising substantially but less explosively in absolute value.

Rising prices: when capacity falls short and demand stays strong

The report doesn’t just forecast revenue; it also describes an environment where supply remains tight and vendors retain pricing power. In DRAM, TrendForce highlights that historically, quarterly increases of around 35% were common, but in the fourth quarter of the previous year there was a much sharper jump, with 53%–58% increases driven by DDR5 demand.

Most relevant for procurement and budgeting: TrendForce expects this pressure to continue into 2026. Its forecast suggests that prices could increase over 60% in the first quarter, with some products nearly doubling, amid sustained high demand from CSPs despite already elevated levels of memory consumption.

In NAND flash, a similar pattern is projected: TrendForce anticipates a quarterly increase of 55%–60% in the first quarter and expects an extended upward trend throughout the year, fueled by needs for high-performance storage in AI workloads.

Why this time it’s not just a “price cycle”: architecture shifts

TrendForce links this phenomenon to the evolution of AI usage itself. In early stages, much of the expenditure was on large-scale training; now, the market shifts toward systems that combine inference, memory, and decision-making, which structurally raises demands for capacity, bandwidth, and access efficiency.

This nuance is critical: when the bottleneck shifts from raw compute to data movement and access (rather than just processing power), memory demand stops being a “complement” to accelerators and begins to behave as a strategic resource.

Practical implications: budgets, TCO, and sourcing strategies

Operationally, the 2026–2027 scenario suggests three main implications for organizations deploying AI (or competing for hardware in the same market):

- Cost pressure on platforms: if DRAM and NAND capture a larger portion of each server’s incremental cost, overall TCO can rise even as compute costs per “unit” improve.

- Availability risks: just-in-time purchase cycles suffer when the market enters allocation mode, making medium-term contracts more critical.

- Mandatory optimization: the debate shifts from “How much AI do I add?” to “How do I design workloads and data to avoid overspending on memory.”

Frequently Asked Questions

What does it mean that memory is the “bottleneck” in AI infrastructure?

This means that performance and costs aren’t solely dependent on GPUs/CPUs: availability and price of DRAM and NAND can limit how much capacity is deployed and at what cost, especially for inference and data-intensive loads.

According to TrendForce, which market segment will grow more: DRAM or NAND?

In absolute value, DRAM will drive the 2026–2027 leap, with projections of $404.3 billion in 2026 and $667 billion in 2027, versus $147.3 billion and $175.7 billion for NAND, respectively.

Why does TrendForce associate the DRAM surge with DDR5?

Because it sees strong demand pressure on DRAM linked to DDR5, with quarterly increases of 53%–58% in the last quarter, well above typical historical gains.

What should companies monitor in 2026 if these forecasts hold?

Mainly: memory budgets, procurement strategies (contracts/planning), and data architecture (to reduce accesses and redundancies). TrendForce predicts aggressive price increases early in 2026 for both DRAM and NAND.