CoreWeave has set a date — at least within a tentative timeframe — for its next leap in AI infrastructure: the company announced that it will incorporate the NVIDIA Rubin platform into its cloud focused on training and inference, with the expectation of being among the first providers to deploy it in the second half of 2026. This move isn’t just about “having the latest GPU,” but about a more interesting shift for the technical field: how to operate a generation that pushes complexity throughout the entire rack, requiring power, cooling, and networking solutions that can no longer be managed as “standalone servers.”

CoreWeave’s thesis is clear: as models evolve toward reasoning and agentic use cases (systems that plan, act, and chain tasks), value is measured not just in TFLOPs, but in operational consistency, observability, and the ability to deploy systems frictionlessly. Against this backdrop, the announcement is accompanied by two features especially relevant to system administrators and platform teams: CoreWeave Mission Control and an approach to rack orchestration as a programmable entity.

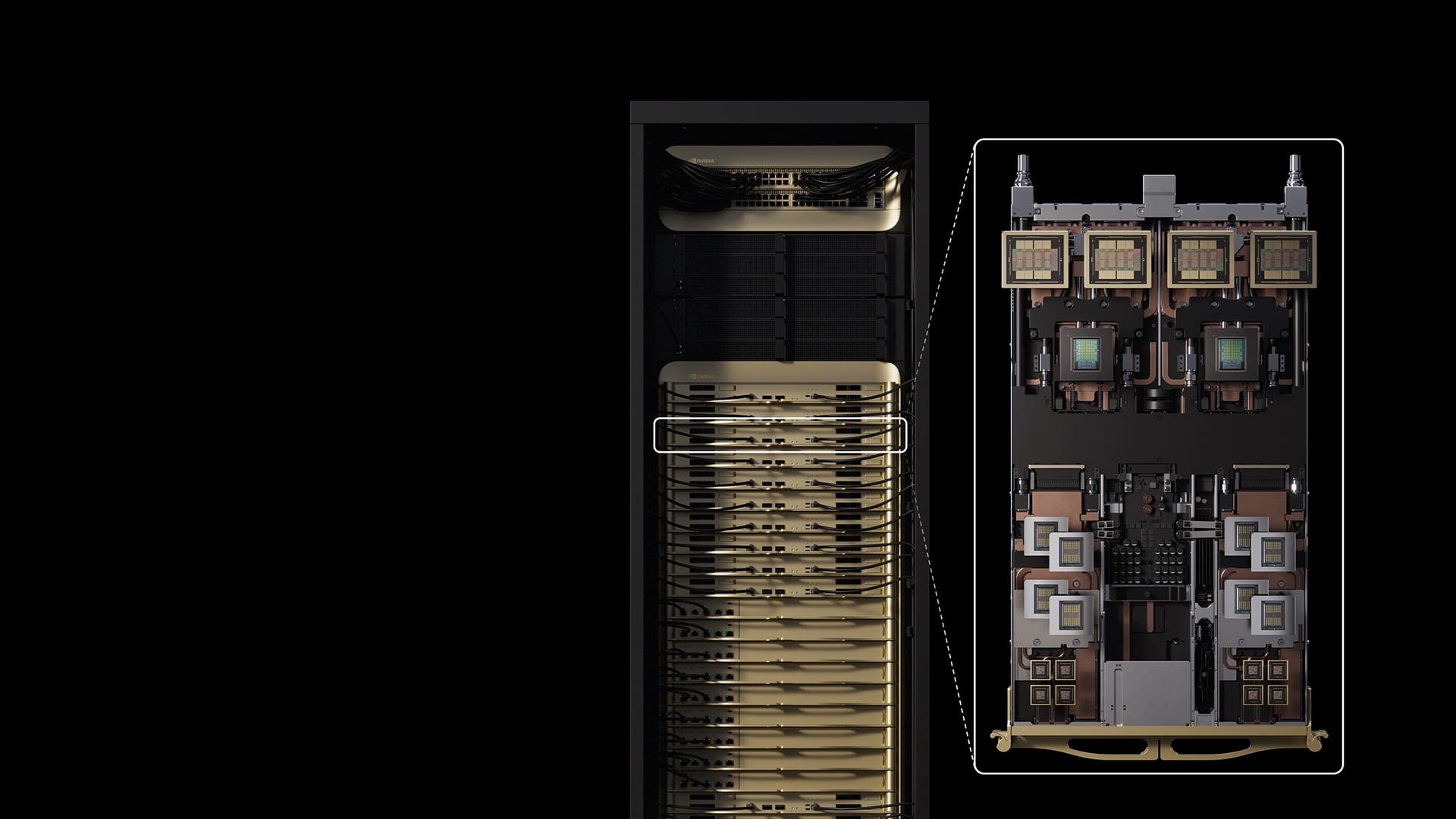

From “GPU Cluster” to Rack as an Operational Unit

In practice, large-scale AI deployments have been prompting a rethinking of “day 2” (operations): provisioning, validation, diagnostics, maintenance, component replacement, and fine-grained hardware status monitoring before accepting critical workloads. What’s novel in CoreWeave’s approach is explicitly recognizing that, with platforms like Rubin, the entire rack becomes the minimal “real” unit to govern.

According to the announcement, CoreWeave will deploy Rubin supported by Mission Control, defined as an operational standard for training, inference, and agentic loads, integrating a layer of security, expert operations, and observability. Additionally, the company describes a Rack Lifecycle Controller, a Kubernetes-native orchestrator that treats a rack NVL72 as a programmable “object”: coordinating provisioning, power operations, and hardware validation to ensure the rack is ready before serving client workloads.

For a sysadmin, this aspect matters perhaps even more than the GPU model itself: when the opportunity cost of one hour of downtime is measured in dozens (or hundreds) of GPUs or in training queues, the question “is the system healthy?” ceases to be a one-off check and shifts to continuous telemetry with automated decisions.

What Rubin Brings to the Upcoming Workloads

CoreWeave positions Rubin as a platform for Mixture-of-Experts models and workloads requiring sustained compute on a large scale, aligning with current trends: larger models, but also more complex in execution, with inference patterns changing depending on the request type, connected tools, or multi-agent flows.

In its messaging, the company also frames Rubin as an enabler for high-computation sectors (with high latency/reliability demands), citing examples like drug discovery, genomic research, climate simulation, or fusion energy modeling. Traditionally, these workloads have led platform teams to design “parallel layers” of observability, metrics, and change control. Here, the idea is to standardize this as an operational norm.

What a Systems Administrator Should Read between the Lines

This announcement suggests several practical implications:

- End-to-end observability, yet actionable

CoreWeave mentions diagnostics and visibility at the fleet, rack, and cabinet levels, aiming to present a “schedulable” real capacity. In production, this usually translates into: fewer surprises, more capacity planning based on actual health, and stricter policies on queued inputs. - Infrastructure orchestration, beyond just containers

Having the rack lifecycle controller Kubernetes-native hints at convergence: the platform’s control plane (K8s) starting to include decisions traditionally “outside” the cluster (power management, hardware validation, readiness checks). - Infrastructure as a product, not just a project

The announcement emphasizes “bringing new technologies quickly to market” without sacrificing reliability. In real environments, that requires validation pipelines, network and performance testing, and rollout/rollback processes similar to software deployments but applied to hardware.

Quick Table: Key Components and Why They Matter in Operations

| Layer / Component | What It Is | Why It Matters to a Sysadmin / SRE |

|---|---|---|

| NVIDIA Rubin (Platform) | Next-generation platform for large-scale training and inference | Alters provisioning, power, and networking profiles; demands more “industrial” operation |

| NVL72 (Rack as a unit) | High-density rack designed as a building block | Simplifies capacity units, but requires mastery of power, cooling, and telemetry per rack |

| Mission Control | Operational standard for AI workloads (train/infer/agentic) | Reduces DIY “layered operation” — unifying observability and processes | RAS / Real-time diagnostics | Telemetry and diagnostics in real-time | Enables automatic decisions (isolate, drain, reprovision), improves MTTR |

| Rack Lifecycle Controller (Kubernetes-native) | Orchestrator treating rack as a programmable entity | Brings hardware automation closer to GitOps/CI/CD workflows, enabling faster, safer deployment |

A Strategic Note: “Rubin in 2026” as a Market Signal

Beyond the technical specs, CoreWeave emphasizes its narrative: being the cloud where labs and enterprises run “real” AI when the technology is entering a new generation. In a market where bottlenecks are no longer just silicon but “bringing it to production reliably,” the promise of “being first to deploy” only makes sense if backed by mature operations. That’s why the announcement heavily focuses on operational standards, observability, and rack lifecycle management.

Frequently Asked Questions

What does it mean that Rubin is oriented toward “agentic” AI and reasoning?

It means the infrastructure is being prepared for models that not only respond but also chain steps, consult tools, and execute actions—raising demands for low latency, consistency, and continuous operation.

Why does treating an NVL72 as “a programmable entity” change data center operations?

Because the unit of management shifts from just a server. Provisioning, power management, and validation are automated per rack, facilitating repeatable deployments and reducing human errors in high-density environments.

What does a company gain by using a cloud with integrated operational standards (Mission Control) versus “generic GPU instances”?

In theory, more reliable production: better observability, faster diagnostics, and a truly “schedulable” capacity for critical workloads (training or inference) with fewer unexpected degradations.

When does CoreWeave plan to deploy NVIDIA Rubin?

The communicated window is the second half of 2026, with CoreWeave positioning itself as one of the first providers to make it available to customers.