For years, liquid cooling in data centers has been treated as an “extra” for very specific facilities: laboratories, HPC, especially dense clusters, or deployments with space constraints. But that script is rapidly changing. The reason has a name and surname: the wave of infrastructure for Artificial Intelligence, with increasingly powerful accelerators, denser racks, and a physical limit that air can no longer stretch much further without compromising power consumption, reliability, and operational costs.

This transition—from niche technology to a structural requirement—is the main thesis of a recent Dell’Oro Group report, which predicts that the global liquid cooling market in data centers will grow strongly, reaching around $7 billion in revenue for manufacturers by 2029. The firm also anticipates a particularly significant leap in the short term: the market could nearly double by 2025, reaching approximately $3 billion.

Why now: AI is pushing the thermal design of data centers to the limit

The debate is no longer whether liquid cooling “improves efficiency,” but whether certain configurations can operate without it. As accelerators increase their thermal demand (TDP) and AI architectures concentrate more power per rack, air becomes a bottleneck: moving enough airflow, maintaining stable temperatures, and avoiding hot spots become engineering—and economic—challenges.

Dell’Oro summarizes this with a clear idea: what was once an optional energy-saving upgrade is becoming a functional requirement for deploying large-scale AI infrastructure. Concurrently, the market is reorganizing: more vendors entering, increased investment, and a supply chain adapting to a new pattern of demand.

The dominant architecture today: single-phase direct liquid cooling

Within the “liquid world,” not all approaches are the same. The report highlights that single-phase direct liquid cooling has established itself as the dominant architecture for AI clusters.

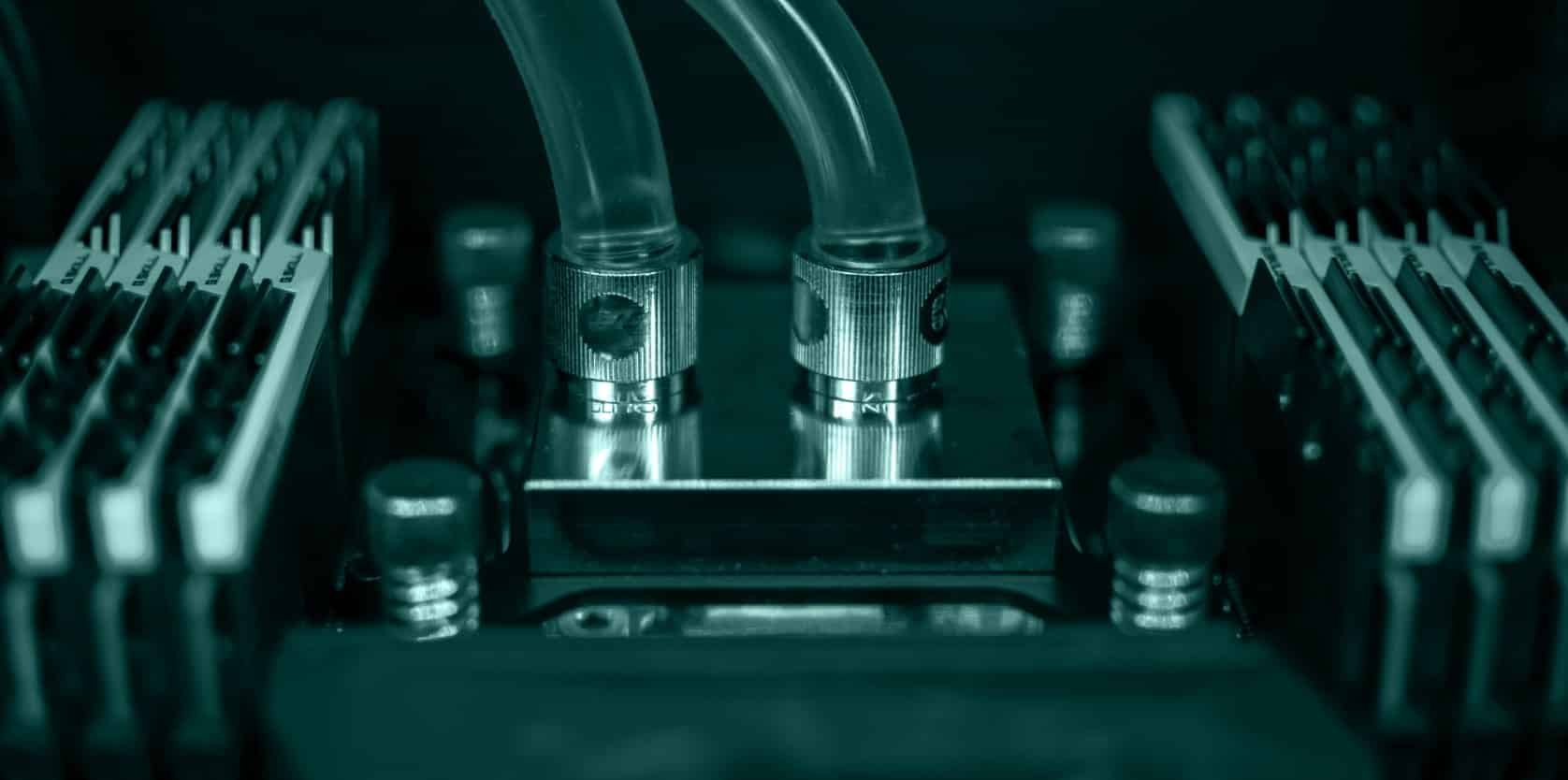

Simply put: instead of trying to cool the entire server with air, a refrigerant liquid is circulated across cold plates that make direct contact with the components generating the most heat (mainly GPUs/accelerators, CPUs, and, depending on the setup, memory and VRMs). Heat is extracted at the source with much higher efficiency and less reliance on “blowing air crazily.”

Why is this option prevailing over others?

- Industry maturity: there is real operational experience (especially at large scale).

- Broad ecosystem: server manufacturers, integrators, and infrastructure providers have standardized many components (plates, connectors, CDUs, distribution).

- Reasonable scalability: allows growth without completely redesigning the data center, especially when planned from the start.

And what about immersion or bifasic cooling?

Dell’Oro outlines a more nuanced scenario for alternative approaches:

- Two-phase direct cooling: expected to gradually increase and gain traction as chip heat and thermal density exceed the practical limits of single-phase systems. Until then, adoption will likely concentrate on pilots and early large-scale deployments.

- Immersion cooling: finds its niche in selective deployments where trade-offs (operation, maintenance, hardware compatibility, handling procedures) are justified by specific performance or operational goals.

In other words: the market isn’t driven by “trend,” but by thermal thresholds and the reality of operating thousands of servers without maintenance turning into a nightmare.

Who is capitalizing on the moment

Beyond the technology itself, there is a “power distribution” phenomenon within the ecosystem. Dell’Oro highlights that market leadership is maintained by Vertiv, with other well-positioned players like CoolIT, nVent, and Boyd. It also notes the rapid growth of Aaon as an example of how the ability to deliver highly customized solutions—and forge deep relationships with major clients—can quickly translate into market share.

This is important because liquid cooling isn’t just “plumbing.” It involves refrigerant distribution design, hardware integration, thermal control, monitoring, redundancies, operational procedures, and increasingly, custom engineering tailored to AI workloads.

Hyperscale and colocation: the two main drivers

The report positions hyperscalers as the natural anchors of demand (due to volume and technical needs), but also points to a secondary push: colocation centers, often built specifically to host AI loads and their thermal requirements.

This aligns with a market reality: not everyone will build their own data center for AI, but many can rent capacity in facilities prepared for high densities. In that context, liquid cooling becomes part of the operator’s “product”: power, space, connectivity… and thermal capacity.

Quick table: market size according to Dell’Oro (latest milestones)

| Indicator | Estimate (Dell’Oro) | Implication |

|---|---|---|

| Global market 2025 | ~ $3 billion | Acceleration: shifting from “niche” to broad adoption |

| Global market 2029 | ~ $7 billion | Liquid cooling establishes itself as basic infrastructure for AI |

| Current dominant architecture | Single-phase direct | Standardization and large-scale deployments |

| Technological evolution | Two-phase and immersion | More selective growth, tied to thermal thresholds |

What this means for companies and operators

For end users (businesses, labs, AI teams, SaaS providers), this translates directly to: computing capacity is no longer just “GPU and euros,” but also “watts and cooling.” In many bids and projects, the critical question is now: “Where do we host this to ensure stability and scalability?”

For data center operators and builders, the transformation is equally tangible:

- Facilities are designed with density in mind—not just the “average” server.

- The commercial offering includes liquid cooling capacity as a differentiator.

- The “day 2” operations—procedures, spare parts, monitoring, operational security—are becoming more professionalized.

And overall, the sector enters a clear cycle of investment, standardization, consolidation… and establishing a new “floor” for AI infrastructure.

Frequently Asked Questions

What is single-phase direct liquid cooling in a data center?

It’s a system where a refrigerant circulates through cold plates making direct contact with components (GPU/CPU). The liquid doesn’t change phase, and it’s usually integrated with distribution units and thermal control systems.

When does immersion cooling make sense?

Typically when maximum density and thermal efficiency are desired, or operational considerations (maintenance, hardware compatibility, procedures) justify it. It’s not the default choice for every case.

Why is AI accelerating the adoption of liquid cooling so much?

Because AI accelerators and racks concentrate substantial power in limited space. Under these conditions, air hits physical and economic limits in reliably removing heat.

Does liquid cooling reduce total costs or make them higher?

It depends, but market logic indicates it enables deploying workloads that otherwise couldn’t operate—or would do so with penalties. At higher densities, it shifts from an “extra” cost to a necessary one for scaling.

via: prnewswire