The race to run increasingly large AI models is no longer confined to data centers. At CES 2026, NVIDIA has shifted focus to a new scenario: the developer’s desktop. The company introduced NVIDIA DGX Spark and NVIDIA DGX Station, two “deskside” systems designed to enable engineering, research, and data science teams to test, fine-tune, and run advanced models locally, with the option to scale later to the cloud.

This move responds to a growing industry reality: open software accelerates innovation, but modern workflows — from RAG (Retrieval-Augmented Generation) to multi-step reasoning and agents — require unified memory, sustained performance, and optimized tools. In this context, NVIDIA envisions DGX Spark and DGX Station as a kind of “bridge” between a personal workspace and corporate infrastructure, reducing friction for working with heavier models without always relying on remote racks.

Two machines, two levels: from open models to the local “frontier”

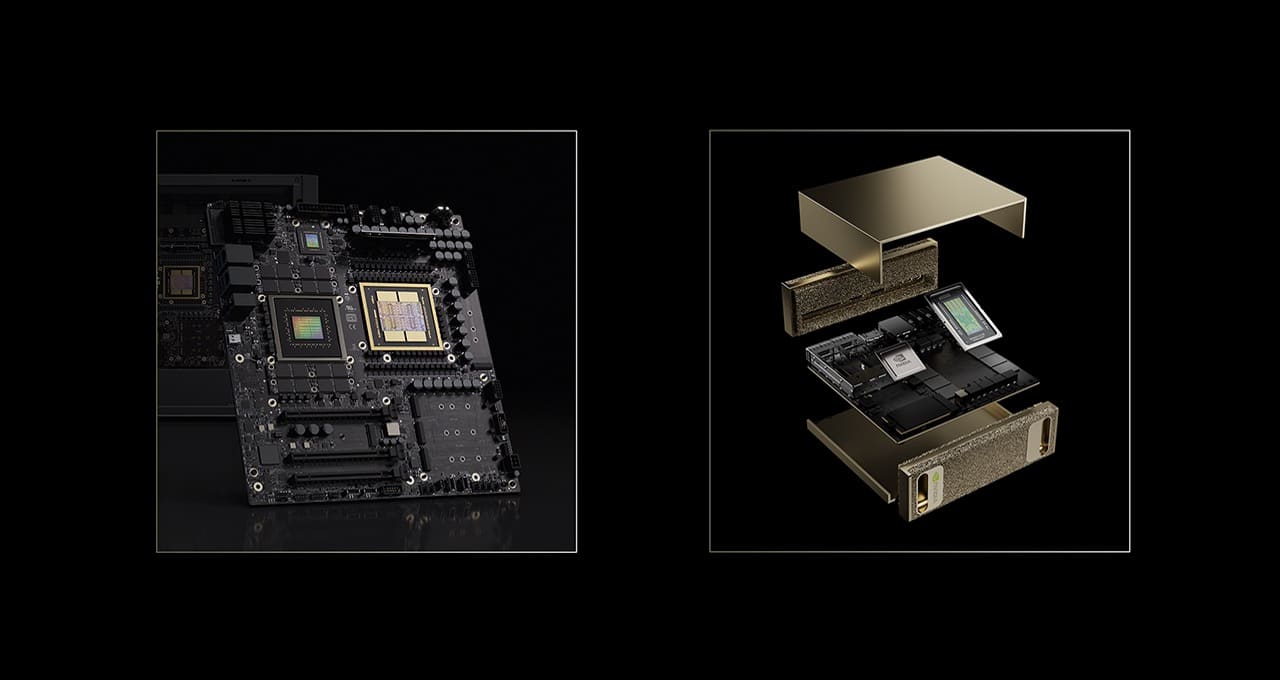

The approach is divided into two clear tiers. DGX Spark is aimed at broad use: a compact, “plug-and-play” system ready to run and fine-tune cutting-edge models right on the desktop. Conversely, DGX Station targets settings where cutting-edge workloads are handled: research labs, high-performance engineering groups, or companies wanting to experiment with large-scale models without turning every iteration into a cloud GPU reserve.

NVIDIA summarizes this difference simply: DGX Spark can handle models with around 100 billion parameters, while DGX Station raises the bar to 1 trillion parameters in a deskside system. According to the company, both systems leverage Grace Blackwell, combining coherent memory and performance optimized for today’s AI stage: more context, more reasoning, and more efficiency pressure.

DGX Spark: “plug-and-play” optimization and large models on a desktop

DGX Spark is presented as a machine designed so developers don’t have to start “from scratch.” It is preconfigured with NVIDIA’s software stack and CUDA-X libraries to accelerate typical AI workflows: from prototyping and fine-tuning to inference and validation.

A technical highlight NVIDIA emphasizes is the role of the NVFP4 format, associated with the Blackwell architecture. NVIDIA states this format allows for model compression of up to 70% and performance improvements without losing model capacity. This is especially relevant since desktop memory limits and data transfer costs are often practical constraints.

There’s also a clear nod to the open-source community: NVIDIA highlights its work with projects like llama.cpp, which has achieved a 35% average performance boost when running models on DGX Spark. They also mention “quality of life” improvements like faster loading times for LLMs. The message is significant: it’s not just about fast hardware, but a combination of optimization, numeric formats, and an open ecosystem that brings models once requiring data center hardware to a desktop setup.

In parallel, NVIDIA’s own product platform positions DGX Spark more ambitiously: it mentions up to 128 GB of unified memory and the capacity to run locally with models of up to 200 billion parameters, enabling scenarios like fine-tuning and inference testing aimed at development and validation.

DGX Station: the “GB300” comes off the rack and into the office

If DGX Spark aims to democratize access to large models, DGX Station seeks to change the routines of those developing infrastructure and frameworks. NVIDIA states that DGX Station is built with the GB300 Grace Blackwell Ultra and features 775 GB of coherent memory, a key reason they position it as capable of handling models around one trillion parameters locally.

Beyond the raw figures, the key value is in use: developers of runtimes and inference engines often face slow iteration cycles dictated by hardware availability. Industry voices cite examples from the ecosystem: from maintaining vLLM, which highlights the difficulty of testing and optimizing directly on GB300 when the chip is normally deployed at rack scale, to contributions from SGLang describing the advantage of working locally with very large models and demanding configurations without relying on “cloud racks.”

NVIDIA lists examples of models fitting this approach — including recent frontier scene and open-weight models — positioning DGX Station as a tool to shorten the loop: test changes, tune CUDA kernels, validate performance, and iterate—all within the lab.

Demos: speed, mass visualization, and “cobot” agents

At CES, NVIDIA showcased DGX Station live with demos designed to impress in volume and pace. Among them, a pretraining session reaching 250,000 tokens per second, visualizations with millions of points, and large knowledge base creation/visualization via “Text to Knowledge Graph.” These aren’t casual use cases—they aim to deliver a powerful image of a desktop capable of handling loads once associated with data centers.

DGX Spark, meanwhile, is presented as a practical piece for creative production and prototyping. NVIDIA notes that diffusion models and video generation proposals — from Black Forest Labs or Alibaba — already support NVFP4, reducing memory usage and speeding up execution. In the same vein, the company claims DGX Spark can download video generation loads that saturate creator laptops, and in a demo, achieved an 8x acceleration compared to a M1 Max MacBook Pro in a generative video scenario.

There’s also a focus on keeping data and code “local”: NVIDIA demonstrates a local CUDA programming assistant built on NVIDIA Nsight running on DGX Spark—appealing to companies seeking productivity without pushing their repositories to external services.

The most “CES-style” part of the announcement features a demo with Hugging Face: combining DGX Spark with the Reachy Mini robot to create an interactive agent—with vision, speech, and motor responses—guided by a step-by-step tutorial from Hugging Face. This powerful image shows the agent transitioning from a browser tab to a tangible presence on the desk.

Ecosystem, availability, and core message: local AI without sacrificing scalability

The list of partners selling these systems underscores NVIDIA’s goal to establish them as a category. According to the company, DGX Spark and related systems are available through manufacturers and partners like Acer, Amazon, ASUS, Dell, GIGABYTE, HP, Lenovo, Micro Center, MSI, and PNY. As for DGX Station, NVIDIA indicates availability from Spring 2026, with partners including ASUS, Boxx, Dell, GIGABYTE, HP, MSI, and Supermicro.

On the software front, NVIDIA adds a key commercial component: NVIDIA AI Enterprise extends support for DGX Spark and the GB10 systems from partners, with licenses expected by the end of January. This supports the corporate message of reliable support, drivers, operators, and deployment capabilities.

The overarching message is clear: AI has become too important — and too costly — to rely solely on a single consumption mode. NVIDIA advocates a hybrid vision: local and secure development when control, iteration, and IP matter most, and scaling to data centers or the cloud when training, serving, or production at scale are needed.

FAQs

What is the difference between NVIDIA DGX Spark and DGX Station for running language models locally?

DGX Spark is aimed at development and testing with large models in a compact, plug-and-play setup, while DGX Station targets frontier workloads and models up to 1 trillion parameters, with a focus on laboratory and enterprise use.

What does it mean that DGX Spark uses NVFP4, and why is it important for desktop AI?

NVFP4 is a format NVIDIA uses to reduce the effective size of models and boost performance. The company claims it can compress models by up to 70%, enabling larger models to run with less memory footprint.

Can DGX Station replace a GPU cluster for frontier model research?

It’s not intended to replace large clusters, but it fills a critical need: shortening iteration cycles and validating optimizations locally with data center-class hardware before scaling to massive infrastructure.

What real-world use cases do DGX Spark and DGX Station enable for companies wanting to keep data and code local?

Their focus is on local inference, RAG workflows, fine-tuning, agent prototyping, and development tools (such as CUDA programming assistants), all with the advantage of maintaining control over data and IP while enabling cloud scaling later.

via: blogs.nvidia