The new generation of advanced artificial intelligence models now has a clear favored architecture: Mixture of Experts (MoE). And NVIDIA aims to be the engine powering it. Their GB200 NVL72 system, a rack-scale design built for massive generative AI, is achieving up to 10 times the inference performance for leading MoE models like Kimi K2 Thinking, DeepSeek-R1, or Mistral Large 3 compared to the previous H200 generation, transforming the economics of AI in large data centers.

What does the Mixture of Experts architecture really bring?

For years, the industry followed a simple logic: to build smarter models, you had to make them bigger. Dense models with hundreds of billions of parameters that activate all at once for each generated token. The result: enormous capacity… and a computational and energy cost that’s hardly sustainable.

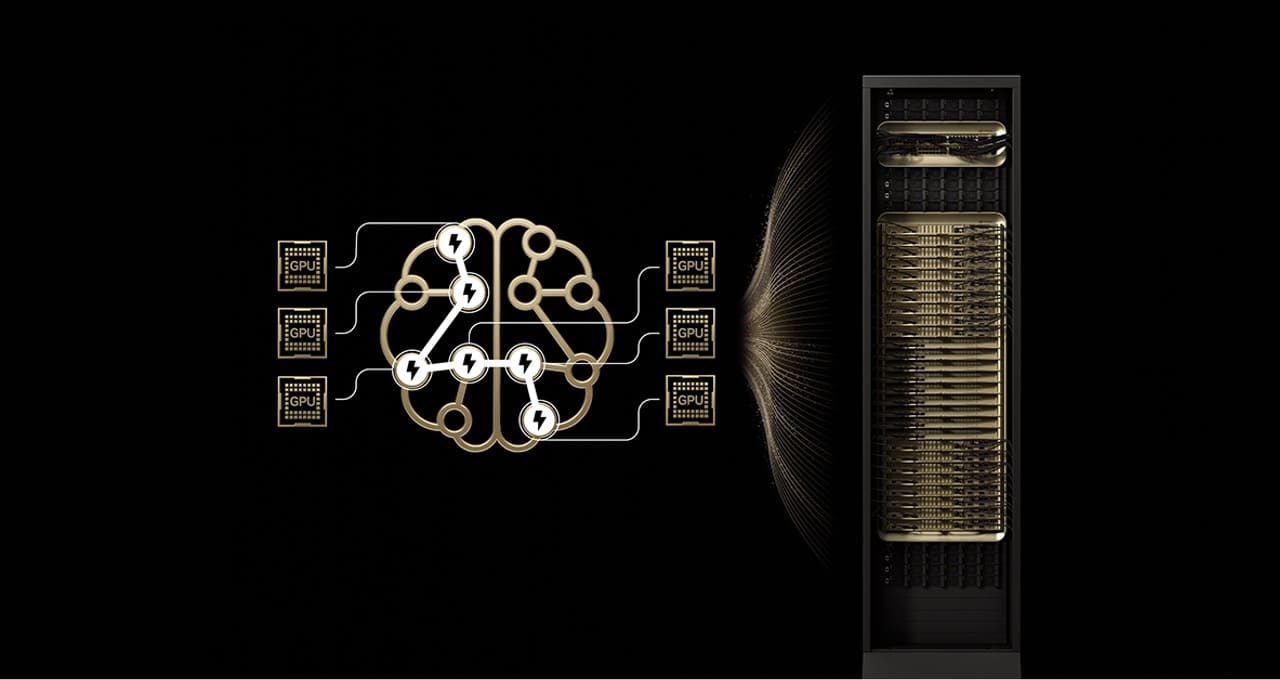

Mixture of Experts models break this scheme. Instead of activating the entire model, they organize the network into specialized “experts.” A router decides which subset of experts to activate for each token, similar to how the brain utilizes different regions depending on the task at hand.

This allows combining an enormous total number of parameters with a much smaller number of active parameters per token. The practical consequence is clear: more intelligence and reasoning capacity with a per-token cost that is significantly lower and energy efficiency that is much higher.

This is no trivial detail: according to Artificial Analysis rankings, the top 10 open models — including DeepSeek-R1, Kimi K2 Thinking, or Mistral Large 3 — utilize MoE architectures, indicating that this approach has become the de facto standard for frontier models.

The problem: scaling MoE in production is not trivial

The less-visible side is that deploying these models at large scale is very challenging. The main bottlenecks are well-known:

- Memory limitations: each token requires dynamically loading parameters of the selected experts into HBM memory, stressing each GPU’s bandwidth to the maximum.

- All-to-all communication: experts, distributed across many GPUs, must exchange information in milliseconds to produce a coherent response. When communication is routed over general-purpose networks, latency spikes.

Platforms like NVIDIA H200 face challenges when extending expert parallelism beyond 8 GPUs, often requiring external interconnection networks, which add latency and reduce overall performance. This is where NVIDIA’s GB200 NVL72 proposal comes into play.

GB200 NVL72: 72 Blackwell GPUs as if they were one

GB200 NVL72 is a rack-scale system that groups 72 NVIDIA Blackwell GPUs interconnected via NVLink Switch within a single high-speed interconnection domain, delivering 1.4 exaflops of AI performance and 30 TB of high-speed shared memory.

Practically, for MoE models, this means:

- Fewer experts per GPU: by distributing them across 72 GPUs, the load on each accelerator’s memory is drastically reduced, freeing capacity to handle more concurrent users and longer contexts.

- Ultrafast communication between experts: NVLink interconnection provides up to 130 TB/s fabric within the rack, enabling all-to-all exchanges between experts without resorting to higher-latency networks.

- Computation within the switch itself: NVLink Switch can perform reduction and aggregation operations in-network, speeding up the combination of results from various experts.

On top of this hardware foundation, NVIDIA adds a full-stack optimization layer: the NVFP4 format to maintain precision at lower cost, the NVIDIA Dynamo orchestrator to separate prefill and decode phases, and native support in open inference frameworks like TensorRT-LLM, SGLang, or vLLM, which already implement these MoE-specific techniques.

10x more performance for frontier models like Kimi K2 and Mistral Large 3

The impact is measurable. According to the company’s own data and external analyses, MoE models such as Kimi K2 Thinking, DeepSeek-R1, or Mistral Large 3 achieve up to 10 times more performance when running on GB200 NVL72 compared to H200 systems from the previous generation.

This 10x leap isn’t just a lab figure: it translates into:

- Much higher throughput of tokens per second with the same power consumption.

- Reduced cost per token, directly improving the profitability of AI services.

- Lower latency, critical for interactive applications, conversational assistants, or agents that chain multiple model calls.

AI service providers and specialized clouds like CoreWeave, Together AI, or large tech companies are beginning to adopt the rack-scale GB200 NVL72 design precisely for these workloads — from advanced translation (DeepL case) to enterprise intelligent agents platforms.

Beyond MoE: towards AI factories with shared experts

While GB200 NVL72 has been introduced as the reference system for MoE, this approach aligns with the broader direction of modern AI:

- Multimodal models combine experts across text, vision, audio, or video, activating only what’s necessary for each task.

- Agentic systems coordinate multiple specialized agents (planning, search, external tools, long-term memory) working together to solve complex problems.

In both cases, the central idea remains the same as in MoE: having a set of specialized capabilities and routing each part of a problem to the “appropriate expert.”

With rack-scale infrastructures like GB200 NVL72, providers can go even further: building genuine “AI factories” where a large pool of shared experts serve many applications and clients simultaneously, maximizing hardware utilization and reducing the unit cost of each AI task.

Frequently Asked Questions about MoE and NVIDIA GB200 NVL72

How does a Mixture of Experts model differ from a traditional dense model?

In a dense model, all parameters are activated for each generated token. In a Mixture of Experts model, the model is divided into multiple “experts,” and only a few are activated per token, selected by a router. This allows combining enormous total capacity (hundreds of billions or even trillions of parameters) with a much lower computational cost per token.

What exactly does GB200 NVL72 bring compared to earlier generations like H200?

GB200 NVL72 groups 72 Blackwell GPUs into a single high-speed domain with NVLink, providing 1.4 exaflops of power and 30 TB of shared memory. This eliminates classic MoE bottlenecks (memory and all-to-all communication), enables distributing experts across many GPUs without latency penalties, and achieves up to 10x more inference performance on models like Kimi K2 Thinking, DeepSeek-R1, or Mistral Large 3.

What types of organizations will benefit most from this MoE + GB200 NVL72 combo?

Primarily large cloud providers, AI-as-a-service platforms, organizations training and deploying frontier models, and those developing advanced assistants, autonomous agents, or multimodal services with high traffic volumes. For these players, reducing per-token cost and energy consumption is critical to making cutting-edge models economically viable.

Will MoE replace dense models entirely?

Not necessarily. Dense models remain useful in memory-constrained environments, edge devices, or for specialized tasks. However, for large frontier models demanding high reasoning capacity and serving millions of users, the Mixture of Experts approach combined with infrastructures like GB200 NVL72 is gaining ground as a more efficient and scalable option.

via: Nvidia blogs and AI news