The debate has gained momentum in the industry: as artificial intelligence progresses from large foundational model training to a phase of massive inference, does it make sense to keep filling data centers with GPUs, or is it time for custom ASICs and TPUs?

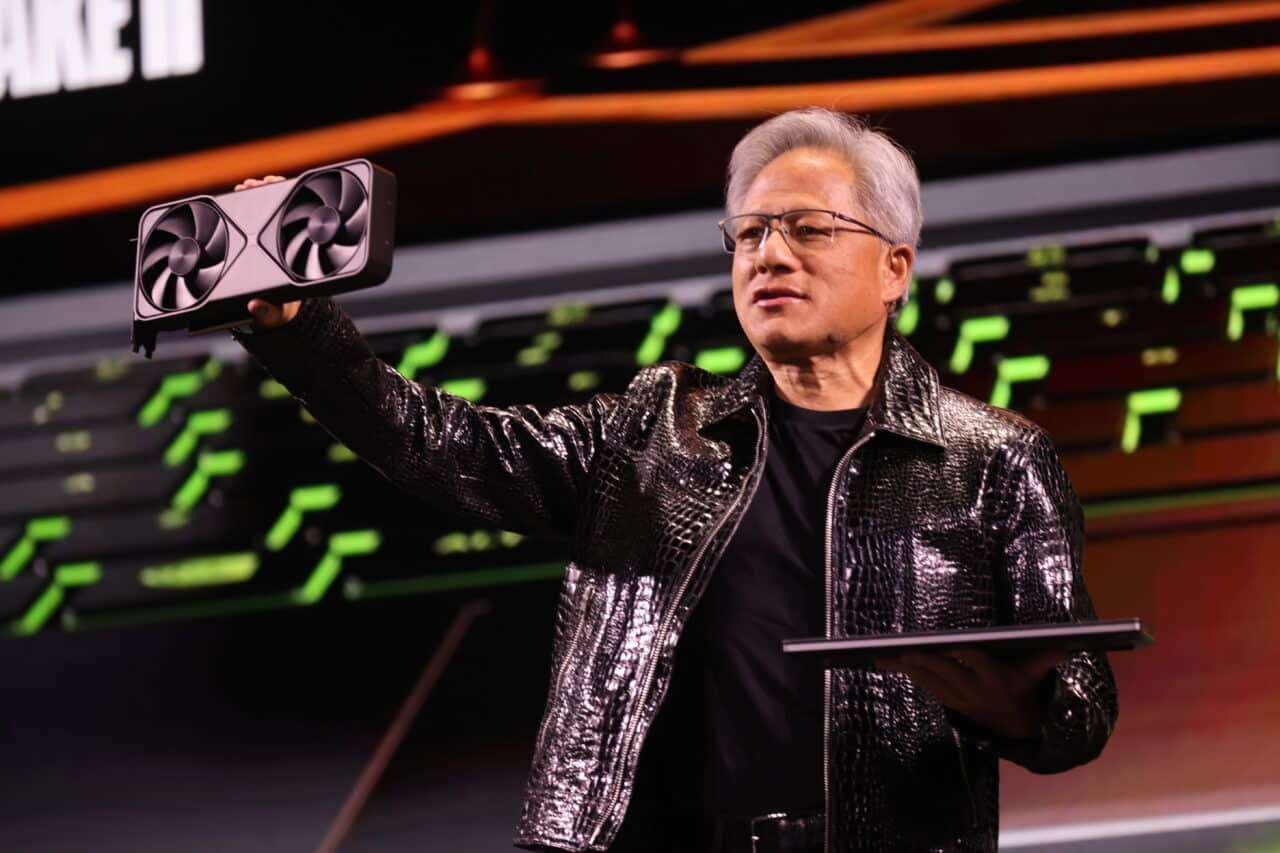

For Jensen Huang, CEO of NVIDIA, the answer remains clear. In a recent analyst session, he reiterated that the true battle of AI isn’t between companies, but between engineering teams, and that very few in the world can keep up with the pace of innovation his company maintains. In this context, he downplayed the rise of ASICs (application-specific integrated circuits) and TPUs from giants like Google, which many see as alternatives to general-purpose GPUs.

GPU vs. ASIC/TPU: two very different approaches to AI computation

Before diving into Huang’s perspective, let’s clarify what’s at stake. In large AI data centers, two families of chips coexist, or aspire to:

- GPU (Graphics Processing Unit):

- Originally designed as graphics processors, but became the de facto standard for deep learning.

- Highly parallel general-purpose chips, programmable and flexible.

- In NVIDIA’s case, accompanied by the CUDA ecosystem, libraries, compilers, and tools optimized for AI.

- ASICs / TPU (Application-Specific Integrated Circuit / Tensor Processing Unit):

- Custom-designed chips for very specific tasks, like tensor multiplications and accumulations for neural networks.

- Seeking maximum energy efficiency and low operation costs for certain models and workloads, especially in large-scale inference.

- Iconic example: Google’s TPUs, used internally and offered in their cloud services.

Simplified comparison: GPU vs. ASIC/TPU for AI

| Feature | GPU (e.g., NVIDIA) | ASIC / TPU (e.g., Google TPU) |

|---|---|---|

| Type of chip | General-purpose, highly parallel | Optimized for specific AI operations |

| Flexibility | Very high (training, inference, others) | Medium/Low (tailored for specific cases) |

| Software ecosystem | Mature (CUDA, cuDNN, TensorRT, etc.) | Limited to platform control |

| Ease of adoption | High: large community, multi-cloud support | More complex: requires adapting tools and models |

| Inference efficiency at scale | Very good, improving generation after generation | Excellent in well-optimized scenarios |

| Lock-in risk | Lower, available from many providers | Higher, tied to cloud or proprietary provider |

| Innovation pace | Continuous, with annual GPU families | Depends on each Big Tech’s roadmap |

What Jensen Huang really says

In the last quarter, NVIDIA strengthened its position with record results in its data center business and deals with key players in the AI ecosystem. When an analyst asked Huang about the impact of dedicated chip programs — like TPUs and other ASICs — from companies like Google or Amazon, the executive responded with a core idea:

It’s not about competing against companies; it’s about competing against teams. And very few teams in the world are capable of building such incredibly complex systems.

This nuance is important. Huang does not deny the existence or utility of ASICs like TPUs; what he questions is whether there are sufficient teams capable of supporting, version after version, everything NVIDIA already offers:

- Hardware optimized for AI across multiple segments (training, inference, scientific computing).

- A unified software ecosystem: CUDA and a set of libraries, frameworks, and tools that have become the de facto standard.

- Massive support from the developer community, startups, universities, and cloud providers.

Essentially, his message is that matching a technical specification isn’t enough; you need to be able to replicate an integrated platform.

The Anthropic example: GPU and TPU coexist

The case of Anthropic illustrates this coexistence well. The company has agreements with NVIDIA, which provides systems based on architectures like Blackwell or Rubin, as well as with Google, which offers their latest-generation TPUs (Ironwood) for specific workloads.

To some analysts, this combination might be seen as a sign that GPUs are dispensable. However, Huang presents it differently:

- Major AI companies diversify hardware to optimize cost and performance based on the task.

- But for many critical workloads, especially large-scale training and complex pipelines, they still rely on NVIDIA’s ecosystem.

In other words, the emergence of ASICs doesn’t displace GPUs; rather, it forms part of a more heterogeneous landscape where each chip type fills its own niche.

Advantages of GPUs: flexibility and ecosystem

Why do data centers continue to buy GPUs at such a high rate, even when there are more efficient ASICs for certain tasks?

- Extreme flexibility

A modern GPU is not only used for inference of trained models, but also for:- Training new foundational models.

- Fine-tuning for specific use cases.

- Simulations, HPC, advanced graphics, and more.

- Established CUDA ecosystem

Most deep learning frameworks (PyTorch, TensorFlow, JAX, etc.) offer mature and optimized support for NVIDIA GPUs.

Migrating all that to a specific ASIC is possible, but very costly in time, talent, and maintenance. - Provider portability

NVIDIA GPUs are available across multiple clouds and infrastructure providers, allowing companies and labs to avoid total lock-in to a single proprietary platform. - Sustained innovation cycle

Each new GPU generation delivers improvements in performance per watt, memory, bandwidth, and support for new AI instructions. Replicating that pace internally with an ASIC isn’t trivial.

Where ASICs and TPUs shine: efficiency and controlled scale

That said, ASICs and TPUs are not just marketing hype. They have clear advantages under certain conditions:

- Enormous volume of repetitive inference

For global services executing billions of queries daily with relatively stable models, a well-designed ASIC can offer a much lower inference cost than a GPU. - Total control of the stack

When a company controls the hardware layer, middleware, and software, as Google does with its TPUs, it can maximize platform efficiency and tailor it to its internal products (search, advertising, video, etc.). - Extreme energy optimization

In data centers where the limit isn’t just cost but available energy and cooling capacity, a highly optimized ASIC can make a significant difference.

To sum up: TPUs and other ASICs make a lot of sense for hyperscalers willing to invest billions in their own silicon and with very predictable workloads. For most companies, however, GPUs remain the most practical choice.

Real threat or competitive noise?

Huang’s statements should be understood on multiple levels:

- In the short term, GPU demand for AI continues to be extraordinarily strong, reinforced by deals like those with Anthropic.

- In the medium term, ASICs and TPUs from Google, Amazon, and other Big Tech won’t disappear, but tend to be used as specialized components within hybrid architectures where GPUs still play a central role.

- In the long-term, the big question isn’t just about the chip, but about who can maintain the best engineering team and the most attractive software ecosystem.

And that is exactly where Huang emphasizes: even if good ASICs exist, very few teams worldwide can sustain the pace of innovation that NVIDIA has established across hardware, software, and industry support.

For now, the race between GPUs and ASICs doesn’t seem to be zero-sum. Instead, it’s shaping into a hybrid landscape where each technology has its place… but with NVIDIA determined to remain the axis around which modern AI revolves.