In recent months, all the media focus has been on GPUs for artificial intelligence. Each new deployment of training clusters is measured in billions of dollars and megawatts, and the conversation revolves around who will get more cards and greater computing capacity.

But while headlines concentrate on GPUs, an equally profound—and much less visible—change is taking shape in the rest of the supply chain: memory, storage, and standard servers. The upcoming rise in infrastructure costs will not only affect those training giant models but also anyone looking to set up “a normal server” for databases, virtualization, or web services.

AI is consuming the memory manufacturing industry

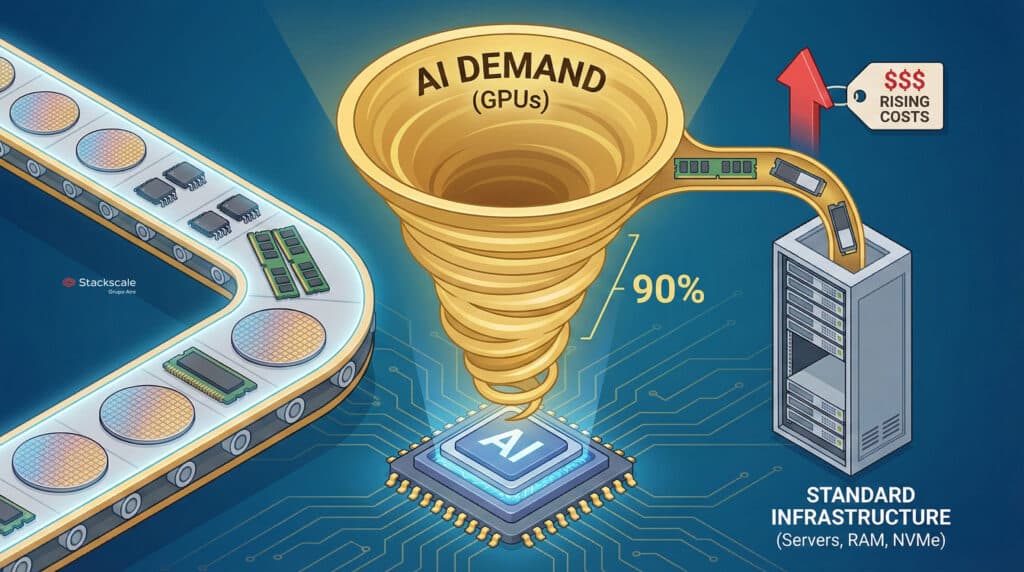

The starting point is simple: the demand for AI is diverting an increasing share of global memory production capacity toward the most profitable chips, primarily those integrated into cutting-edge GPUs.

This means that:

- Manufacturers prioritize high-value memory (HBM, GDDR, high-end NAND) for large AI clusters.

- There is less effective capacity available to produce “standard” RAM modules or NVMe units that serve the broader market: traditional data centers, SMBs, hosting providers, enterprise storage, etc.

When total production doesn’t grow at the same pace as demand, the result is predictable: prices rise, even for products that are not labeled “AI”.

What’s coming: servers 15% to 35% more expensive

Internal forecasts within the industry point to an uncomfortable scenario for anyone planning hardware purchases:

- The same server built in December 2026 could cost between 15% and 35% more than in December 2025.

- The pressure isn’t just from GPU costs but from the combined effect of RAM, NVMe, certain CPU types, and related components.

The supply chain is already shifting. Many players—infra providers, hyperscalers, large integrators—are buying components months in advance to hedge against price hikes.

However, this defensive move has a secondary effect: it accelerates price escalation. When half the market tries to stockpile early, the “hoarding” phenomenon seen during the 2020–2021 chip crisis repeats—more tension, greater uncertainty, and increased volatility.

From hardware to cloud: costs will also rise in the cloud

In the cloud environment, this pressure on hardware costs will sooner or later be reflected in public pricing catalogs.

Current models from some providers suggest:

- Average increases of 5–10% in certain services between April and September 2026, if market dynamics remain unchanged.

It won’t be a sudden shock but rather a series of adjustments: new generations of slightly more expensive instances, recalculations of high-performance storage prices, tariff revisions for certain RAM and NVMe blocks.

Those focusing only on GPU costs will be surprised: AI inflation will also impact “supporting” infrastructure, from database nodes to general virtualization servers.

Stackscale’s take: prepare for the storm, don’t just discuss it

In this context, providers of private cloud and bare-metal solutions, like Stackscale (Grupo Aire), cannot afford to be passive observers. They must redesign their strategies proactively.

The company explains that they are already working on multiple fronts:

- Optimizing procurement, with selective early orders to hedge against price increases, while avoiding speculative spirals.

- Refining platform designs, seeking server configurations that deliver more performance per euro and better energy efficiency.

- Enhancing density and utilization, to maximize each physical node and better amortize memory and storage investments.

The clear goal is to absorb part of the impact without passing it directly and immediately to the end customer.

As co-founder David Carrero states:

“Whenever possible, we will try to keep the prices of new infrastructure stable, offering high-performance environments and, above all, long-term predictability. We know many companies depend on a stable pricing structure to plan their IT investments, especially in mission-critical and AI projects.”

In other words: the battle isn’t just technological; it’s also about economic engineering.

What companies can do: from reactive to strategic planning

For organizations relying on infrastructure—whether in public cloud, private, or hybrid models—the conclusion is uncomfortable but necessary: prepare now, don’t wait for the next invoice hike.

Some rational courses of action include:

- Review the workload inventory

- Identify which projects truly require GPUs and which can continue operating with CPU and standard storage.

- Avoid “paying the AI tax” on services that don’t need it.

- Plan 2–3 years ahead

- Don’t see infrastructure as a collection of one-off purchases but as a capacity strategy.

- Anticipate renewals, consolidations, and expansions before prices peak.

- Negotiate predictable pricing models

- Prioritize providers offering stable price frameworks or clear scaling options over opaque rates subject to constant change.

- Value private cloud and bare-metal solutions when consumption is stable or predictable; well-planned CAPEX can better cushion inflationary environments.

- Maximize efficiency before requesting more hardware

- Review VM configurations, eliminate over-provisioning, and optimize storage.

- Invest in observability and FinOps: you can’t optimize what you don’t measure.

AI’s quiet impact beyond headlines

The dominant narrative portrays AI as a series of large models, spectacular launches, and GPU performance records. But its real impact is much quieter and more pervasive.

In the coming months, the pressure on memory and storage supply chains will demonstrate that the AI revolution isn’t only about large training clusters, but also involves seemingly “everyday” components: RAM modules, NVMe units, and general-purpose servers.

For the tech ecosystem—vendors, integrators, user companies—the message is clear:

- It’s not enough to talk about AI; infrastructure numbers matter.

- Chasing the latest GPU isn’t enough; ensuring the rest of the hardware isn’t an economic bottleneck is crucial.

- And reacting solely to price hikes isn’t enough; it’s time to carefully select infrastructure partners and demand more than just raw compute power.

What the last decade has shown is that technology progresses at a lightning-fast pace, but budgets do not. The difference in the coming years will be held by those organizations that understand the next cost wave won’t just come as a 700-watt black card, but also as the ongoing “smog and paint” that once seemed cheap and plentiful.

Those who prepare today, with a serious infrastructure strategy and aligned partners, will face fewer surprises tomorrow—and have more room to invest in what truly matters: developing products and services that leverage AI, instead of getting caught paying the cost of its collateral effects.