Cloudflare, one of the key components of internet infrastructure, has acknowledged that the global outage experienced on November 18, 2025, was caused by an internal failure within its own network, not by a cyberattack. For several hours, millions of users worldwide saw error pages instead of the websites that rely on their CDN and security services.

The company has published a detailed technical report explaining what happened, why its protection mechanisms failed, and what measures it plans to implement to prevent a similar incident from recurring. Cloudflare’s CEO admits that this is “the worst outage since 2019” and describes the incident as “unacceptable,” given the company’s significance in the internet ecosystem.

A seemingly minor change that brought down the global network

The problem originated on November 18 at 11:20 UTC, when Cloudflare’s network began returning massive HTTP 5xx errors, indicating an internal failure in its systems. For end users, this translated into Cloudflare’s error page instead of the usual content.

The cause was not an attack but a chain of effects triggered by a change in permissions on one of the databases used by the company to feed its Bot Management system. This change caused a query in a ClickHouse cluster to generate a configuration file much larger than expected, with duplicated rows.

This file, known as a feature file, is distributed every few minutes to all servers in the Cloudflare network. It informs the bot detection system about which features it should consider to score each request and decide whether it’s automated or legitimate traffic.

Because the number of features included doubled, the file exceeded an internal size limit set in the software running on the network nodes. The Bot Management module was not designed to handle more than 200 features, and attempting to process this oversized file caused a failure that made Cloudflare’s central proxy stop functioning properly, leading to widespread 5xx errors.

Intermittent error pattern suggested a DDoS attack

During the initial phase of the incident, internal graphs showed abnormal behavior: the volume of 5xx errors spiked sharply, then stabilized, seemingly improved, and then surged again. This intermittent pattern initially raised suspicions of a large-scale DDoS attack.

The explanation came later: the feature file was generated every five minutes on the ClickHouse cluster. Since permission updates hadn’t been applied to all nodes yet, the resulting file could be “good” or “bad” depending on which node executed the query at that moment. This caused the network to function normally for a period until a faulty file was propagated again, triggering the error cascade.

Once permission updates were completed on all nodes, the intermittent behavior ceased, and the failure stabilized into a permanent error state. From that point, the engineering team managed to isolate the cause: stop generating the incorrect files, revert to a known good version, and restart affected services.

According to internal timelines, core traffic began to recover from 14:30 UTC, and full normality returned at 17:06 UTC, after restarting services that had entered inconsistent states.

How Cloudflare’s proxy works and why chain failures occurred

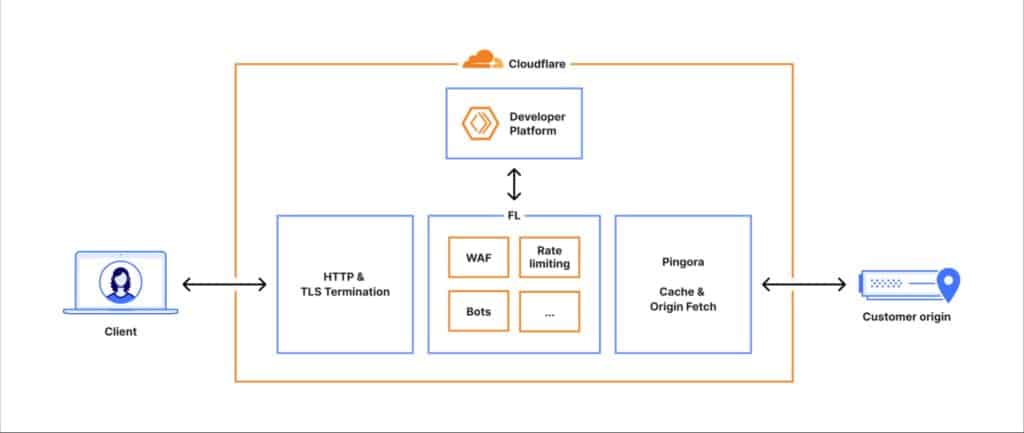

To understand the scope of the failure, the company explained the flow each request follows when a user accesses a website using Cloudflare:

- The connection is established at the HTTP and TLS layers of the network.

- The request goes to the central proxy (internally called FL, Frontline), responsible for enforcing security, performance, and routing rules.

- Through a component called Pingora, the system checks if the content is cached or if it needs to be fetched from the origin server.

In this path, the request passes through various specialized modules: Web Application Firewall (WAF), DDoS protection, access management, developer platform, distributed storage, and among them, the Bot Management module.

This last component uses a machine learning model that assigns a “bot likelihood” score to each request. To do this, it loads a configuration file containing the features that feed the model into memory.

This configuration file is updated constantly to adapt to new traffic patterns and changing attacker tactics. However, the design assumed its size would remain relatively stable and within set limits. When, due to the ClickHouse change, the number of features exceeded this limit, the bot module threw an unhandled error, causing the central proxy process to fail. The result: the network couldn’t route traffic normally and began responding with generic 5xx errors.

Differences between the old and new proxy versions

Alongside all this, Cloudflare was in the process of migrating to a new version of its proxy, internally called FL2, written in Rust. Both the old (FL) and new (FL2) versions were affected by the features file issue, but in different ways:

- In FL2, the bot module failure directly translated into visible HTTP 5xx errors for users.

- In FL, the system didn’t return errors, but all traffic received a bot score of zero, which could in practice cause false positives in rule-based blocks based on those scores.

In other words, clients fully migrated to FL2 experienced a more visible impact (error pages), while in the older version, the effect was subtler—related to bot detection logic but still undesirable.

Affected services: CDN, Workers KV, Access, Turnstile, and dashboard

The proxy failure not only impacted the main CDN and security service but also several products depending on the same internal route.

Among the impacted services, the company highlights:

- Main CDN and security services: returned HTTP 5xx errors, typically showing Cloudflare’s internal error page.

- Workers KV: the key-value storage system for serverless applications experienced high error rates, relying on the affected front-end gateway.

- Cloudflare Access: authentication attempts failed en masse, though active sessions continued to work.

- Turnstile: the form protection system was affected, preventing many users from logging into Cloudflare’s dashboard.

- Dashboard: while some functions remained operational, new user access was hindered by issues with Turnstile and Workers KV.

- Email Security: it didn’t stop processing emails but saw a temporary reduction in reputation accuracy and automatic actions.

Additionally, response latency on the content delivery network increased during the incident—not only due to the failure itself but also because internal observability and debugging systems consumed extra CPU resources trying to capture error data.

From suspecting a massive DDoS to discovering a configuration bug

Another element complicating the diagnosis was that, concurrently, the public status page of Cloudflare also went offline, despite being hosted outside the company’s infrastructure. Although this was a coincidence, it initially fueled hypotheses of a coordinated attack on Cloudflare’s network and external reporting systems.

Within internal communication channels, the team even considered whether it was a continuation of a recent large-scale DDoS wave. It wasn’t until they correlated the changes made to ClickHouse, the anomalous growth of the features file, and the memory limits of the bot module that it became clear the root cause was internal.

Lessons learned and promised changes

With the service restored, Cloudflare outlined several initiatives to strengthen its platform against similar failures:

- Strengthen the ingestion process for internally generated configuration files, treating them with the same caution as data introduced by external users.

- Introduce more “kill switches” that allow rapid deactivation of specific modules, such as Bot Management, without taking down the entire proxy.

- Prevent memory dumps and diagnostic systems from saturating resources during errors, ensuring observability tools don’t worsen the crisis.

- Review failure modes for all central proxy modules to prevent an error in one component from escalading into network-wide traffic paralysis.

The company emphasizes that an outage of this magnitude is “unacceptable” and recognizes the severity of a period during which much of the core internet traffic passing through its network was interrupted. At the same time, it highlights that every major incident has led to new levels of resilience and internal redesigns.

In an ecosystem where businesses, media, public administrations, and essential services depend on a few large infrastructure providers, the November 18th failure serves as a reminder of the fragility of the network and the importance of transparency when incidents occur. Cloudflare, at least on this occasion, chose to disclose its “black box” with all the technical details and accept responsibility for one of its most significant recent setbacks.

Sources:

- Cloudflare technical report “Cloudflare outage on November 18, 2025,” published on their official blog.

via: blog.cloudflare