The Slovak-American company Tachyum has revealed the details and specifications of its new 2-nanometer universal processor Prodigy, a chip that, according to the company, will mark a generational leap in performance, efficiency, and cost within the fields of artificial intelligence and supercomputing.

The new Prodigy Ultimate will deliver up to 21.3 times the performance per rack in AI workloads compared to the Nvidia Rubin Ultra NVL576, while the Prodigy Premium version will reach a 25.8 times higher performance than the Rubin 144. With this generation, Tachyum claims to surpass for the first time the barrier of 1,000 PFLOPs in inference, compared to the 50 PFLOPs achieved by Nvidia’s Rubin.

A leap challenging the limits of current computing

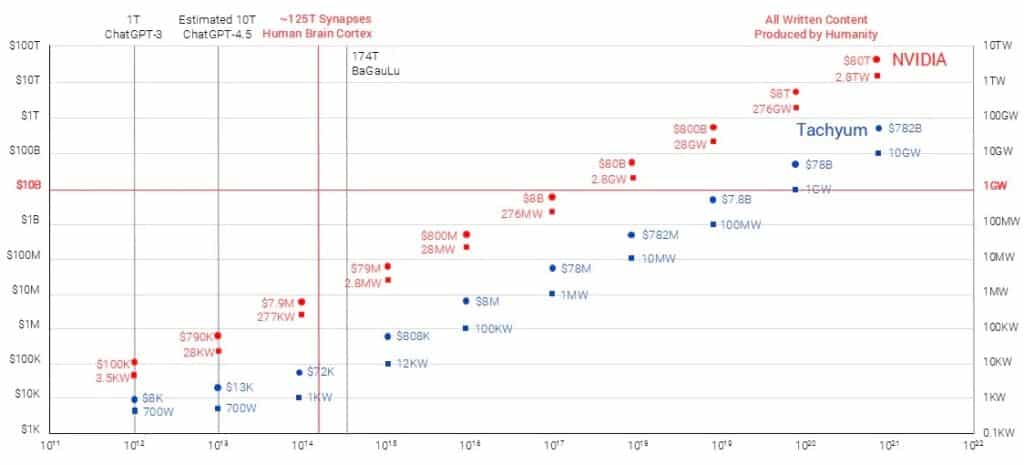

Tachyum asserts that the new Prodigy will enable running AI models with parameters thousands of times larger than any current solution, at a fraction of the cost. In a context where language models are reaching massive sizes — ChatGPT-4 has around 1.8 trillion parameters and emergent systems like BaGauLu reach 174 trillion — the company envisions an even bolder future: models that incorporate complete human knowledge, with up to 10²⁰ parameters (100 quintillion).

Tachyum estimates that running such models with traditional technologies would cost over $8 trillion and require 276 gigawatts of power. Their new architecture, by contrast, could achieve comparable capabilities at an estimated cost of $78 billion and a power consumption of only 1 gigawatt. This dramatic difference could, if proven true, open the AI race to new countries and companies that today cannot compete with the tech giants.

Open technology and a push for standardization

Beyond performance improvements, Tachyum aims to democratize access to technology. The company plans to release all its software as open source, as well as its new memory technology based on standard components. This advance could multiply bandwidth by ten in conventional DIMM modules and facilitate licensing to memory or processor manufacturers, with the possibility of adoption by JEDEC, the standards body for memory.

In 2023, the company already launched its Tachyum AI Data Types (TAI) and made its Tensor Processing Unit (TPU) core available for licensing by third parties. The next step will be to open its instruction set architecture (ISA), reinforcing the interoperability philosophy that characterizes Prodigy.

Prodigy 2 nm: architecture, power, and efficiency

The new 2-nanometer chip represents a deep redesign of Tachyum’s original platform. The miniaturization process significantly reduces energy consumption and enhances density, integrating 256 high-performance 64-bit cores per chiplet. Multiple chiplets can be combined in each package, increasing overall computational power without drastically increasing costs.

According to the company, this evolution multiplies:

- Performance in integer operations by 5

- Performance in AI by 16

- Memory bandwidth of DRAM by 8

- Chip-to-chip connectivity and I/O by 4

- Energy efficiency by 2

The Prodigy Ultimate features 1,024 cores, 24 DDR5 controllers at 17.6 GT/s, and 128 PCIe 7.0 lines, reaching exascale supercomputing levels.

The Prodigy Premium, with between 512 and 128 cores, offers 16 DDRAM channels and support for up to 16-socket configurations.

The Entry-Level range, intended for compact servers and edge computing, will be offered with 32 to 128 cores and between 4 and 8 memory controllers.

Full compatibility and immediate deployment

A key to Prodigy’s potential success lies in its compatibility with existing architectures. Tachyum has designed the system to run Intel or AMD x86 binaries without modification, while allowing native applications to be mixed with legacy software within the same environment.

The company will provide customers with a complete package including operating system, compilers, libraries, AI frameworks, and HPC tools, ready to use from day one, removing traditional barriers to adoption.

Investment, timeline, and target markets

With a recent $220 million investment, Tachyum has secured funding for the tape-out (the pre-mass production phase). The first chips are expected to target supercomputing, large-scale AI, data analytics, cryptocurrencies, cloud computing, databases, and hyperscale environments.

The goal is for Prodigy to become a universal processor capable of replacing CPU, HPC GPU, and AI accelerators in a single component, reducing both CAPEX and OPEX for data centers.

“With the tape-out funding secured, the world’s first universal processor enters production. It’s designed to overcome the inherent limitations of today’s data centers,” said Dr. Radoslav Danilak, founder and CEO of Tachyum.

“The Prodigy Premium and Ultimate versions will drive workloads with superior performance at lower costs than any other solution available today.”

Impact on the global AI race

This announcement arrives amid a full-scale technological escalation between the US and China, where computing power has become a geopolitical factor. While giants like Nvidia, AMD, and Intel compete to dominate the GPU and accelerator markets, Tachyum aims to break the paradigm by unifying all processor types into a single universal architecture.

If the announced figures are confirmed, Prodigy 2 nm could be positioned far ahead in performance per watt, with 21 times more rack capacity than Nvidia’s latest GPUs and an unprecedented energy efficiency. In a time when data center power consumption for AI threatens network stability, such a leap could have a direct impact on costs and sustainability.

The universal processor, closer to reality

Tachyum’s vision extends beyond hardware. Its Prodigy aims to integrate CPU, HPC GPU, and AI accelerators into a single, programmable chip capable of running any workload. Internal tests claim to have achieved 3 times the performance of the most powerful x86 processors and 6 times the HPC performance of current GPGPU GPUs.

This approach seeks to provide maximum flexibility to cloud operators, reducing the number of servers needed and increasing average rack utilization. The result: a drastic reduction in total cost of ownership and a significant increase in power density in data centers.

A direct challenge to Silicon Valley giants

Tachyum’s entry will not go unnoticed. Its promises of performance and open technology suggest direct competition with Nvidia, AMD, and Intel across the three most lucrative markets: generative AI, enterprise servers, and HPC supercomputing.

As the sector awaits the full specification release of Prodigy 2 nm next week, analysts and governments will watch carefully how an emerging player attempts to reshape the global balance in AI infrastructure.

Frequently Asked Questions (FAQs)

What is Tachyum’s 2 nm Prodigy processor?

A universal chip combining CPU, HPC GPU, and AI accelerators in an open architecture built with 2-nanometer process technology.

Why is it considered revolutionary?

Because it promises over 1,000 PFLOPs of inference performance — 20 times Nvidia Rubin’s GPU — with much lower energy consumption and cost.

Will it be compatible with existing software?

Yes. Prodigy can run Intel/AMD x86 binaries unaltered and allows native applications to be combined with legacy software, enabling immediate deployment.

When will it be available?

With the tape-out already funded, production is expected in 2026. Initial units will focus on data centers, supercomputing, and large-scale AI models.

via: tachyum