Artificial intelligence is stepping out of the digital realm and into the physical world with the announcement of NVIDIA IGX Thor, an industrial-grade platform designed to run real-time AI at the edge — from operating rooms to railways, factories, or — with enhanced guarantees of functional safety, reliability, and an extended lifecycle. According to the company, IGX Thor delivers up to 8 times more AI computational power than its predecessor, IGX Orin, in the integrated GPU, and 2.5 times more in the discrete GPU, in addition to double the connectivity to run generative models and vision-language locally without bottlenecks.

The platform comes with early adopters in critical sectors — Diligent Robotics, EndoQuest Robotics, Hitachi Rail, Joby Aviation, Maven Robotics, and SETI Institute — while CMR Surgical evaluates it for bringing intelligent assistance and surgical guidance in real-time to their robots. Commercial availability of production systems and development kits is scheduled for December.

A leap in performance and connectivity designed for edge applications

At the core of IGX Thor lie two Blackwell GPUs: an integrated (iGPU) and a discrete (dGPU). Together, they provide 5.581 TFLOPS FP4 of AI compute, connected via 400 GbE for sensor data ingestion and high-fidelity video streams. In practical terms, this translates to ample margin to orchestrate multiple models simultaneously — e.g., medical image segmentation, 3D detection, SLAM, visual-linguistic reasoning, and control agents — without compromising latency.

The generational leap compared to IGX Orin is summarized in three axes:

- Compute: ×8 in iGPU and ×2.5 in dGPU for AI workloads.

- Connectivity: ×2 increase, with 400 GbE for cameras, LIDAR, industrial buses, or sensor fusion.

- Real-time capability: deterministic processing capacity to handle multiple pipelines with functional safety and traceability.

The platform — supported for 10 years — is engineered to operate in and keep the software stack accelerated and secure throughout its lifecycle.

Functional safety and end-to-end software

IGX Thor integrates elements of the NVIDIA Halos system to provide functional safety (functional safety) and “safety by design” for robots, medical devices, and infrastructures: leveraging onboard sensors and off-system sensors (what NVIDIA calls outside-in) to monitor environments shared with people and mitigate risks.

Built on NVIDIA AI Enterprise, IGX Thor runs:

- NVIDIA NIM (microservices) for deploying packaged AI models — inference, RAG, VLMs — from cloud to edge.

- NVIDIA Isaac for robotics (planning, perception, control, simulation).

- NVIDIA Metropolis for industrial AI vision (quality, counting, safety).

- NVIDIA Holoscan for low-latency sensor processing (medical imaging, multispectral, ultrasound).

This combination aims to significantly reduce the gap between prototype and field-ready devices, with a coherent development, validation, and deployment stack.

Use cases: from operating rooms to railways

- Surgical robotics (CMR Surgical, EndoQuest, Diligent): intraoperative assistance with high-fidelity real-time analysis, overlaying anatomical information and providing contextual alerts. Safety and latency are critical: the system must perceive, reason, and act without pauses during minimally invasive procedures.

- Railways (Hitachi Rail): predictive maintenance and autonomous inspection networked. With IGX Thor, local processing of embedded video/sensors makes it possible to detect anomalies in infrastructure and rolling stock, optimize operations, and reduce downtime.

- Industry and logistics (Maven Robotics, Joby Aviation): versatile, mobile robots with embodied AI models that balance regulatory compliance and capability. IGX Thor provides safety-rated compute with enough power for VLMs and complex planning within the robot.

- Science and exploration (SETI Institute): filtering and detection of rare events in instrumentation with large data volume, executing complex pipelines at the edge to react without relying on the cloud.

Ecosystem: ready hardware and manufacturing partners

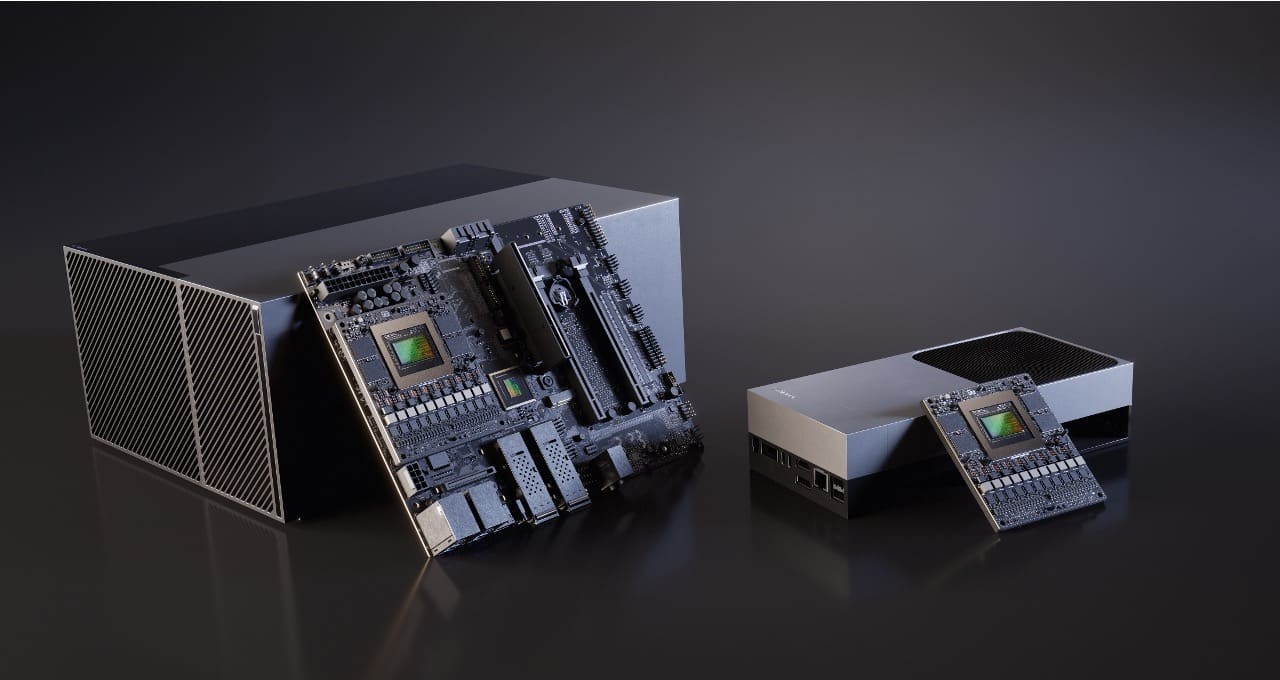

To accelerate projects, NVIDIA will support deployment with two ready-for-production systems:

- IGX T5000 (module): designed for integrators needing to embed the IGX Thor brain into their own equipment.

- IGX T7000 (board kit): full reference board to build edge systems with industrial connectivity and I/O.

Manufacturers such as Advantech, ADLINK, ASRock Rack, Barco, Curtiss-Wright, Dedicated Computing, EIZO Rugged Solutions, Inventec, NexCOBOT (NEXCOM), Onyx, WOLF Advanced Technology, and YUAN will provide edge servers, carrier boards, cameras/sensors, and design services based on IGX Thor, shortening certification and approval times.

Why it matters (and what changes)

- Real “physical” AI: many organizations faced power limitations or latency issues preventing the execution of multiple models in parallel on-site. IGX Thor raises the bar, enabling perception + reasoning + control on the same node.

- Less dependence on the cloud: 400 GbE and local power reduce traffic and latency, improve privacy, and enhance resilience (operation even without external connectivity).

- Integrated functional safety: “safety-rated” hardware and inside-out/outside-in telemetry facilitate safer human-machine collaboration.

- Industrial lifecycle: 10-year support and a stable stack provide coverage for medical/industrial certifications and 24/7 deployments.

What’s next: edge agents and “in-situ” validation

Building on IGX Thor as a foundation, the logical next step is to bring AI agents capable of perceiving, planning, and acting in closed loops, with continuous monitoring and auditing. The integration of Isaac, Holoscan, and NIM simplifies constructing reproducible pipelines that transition from simulation to field deployment with less friction, and enables safety and explainability validation in regulated environments.

Frequently Asked Questions

What does IGX Thor bring compared to IGX Orin?

A significant leap in AI compute (×8 in iGPU, ×2.5 in dGPU), connectivity (×2, up to 400 GbE), and integrated functional safety, while maintaining a 10-year lifecycle and compatibility with the NVIDIA AI Enterprise stack.

Can I run multiple generative and vision models simultaneously at the edge?

Yes. The combination of Blackwell iGPU + dGPU and 400 GbE enables concurrent pipelines (VLMs, 3D segmentation, tracking, local RAG) with low latency without depending on the cloud.

What development tools are included in the stack?

NVIDIA NIM (microservices for models), Isaac (robotics), Metropolis (AI vision), and Holoscan (sensing), all on NVIDIA AI Enterprise, integrated to go from prototype to production within the same ecosystem.

When will systems and kits be available?

NVIDIA expects production systems (IGX T5000 and IGX T7000) and development kits to be available by December, with an ecosystem of manufacturers and partners ready to accelerate projects.

Note: Key data from the announcement include 5,581 TFLOPS FP4 of AI compute, 400 GbE connectivity, dual Blackwell GPU (iGPU + dGPU), integration with NVIDIA Halos for functional safety, 10-year support, and IGX T5000/T7000 availability in December, alongside an extensive network of industry and medical partners.