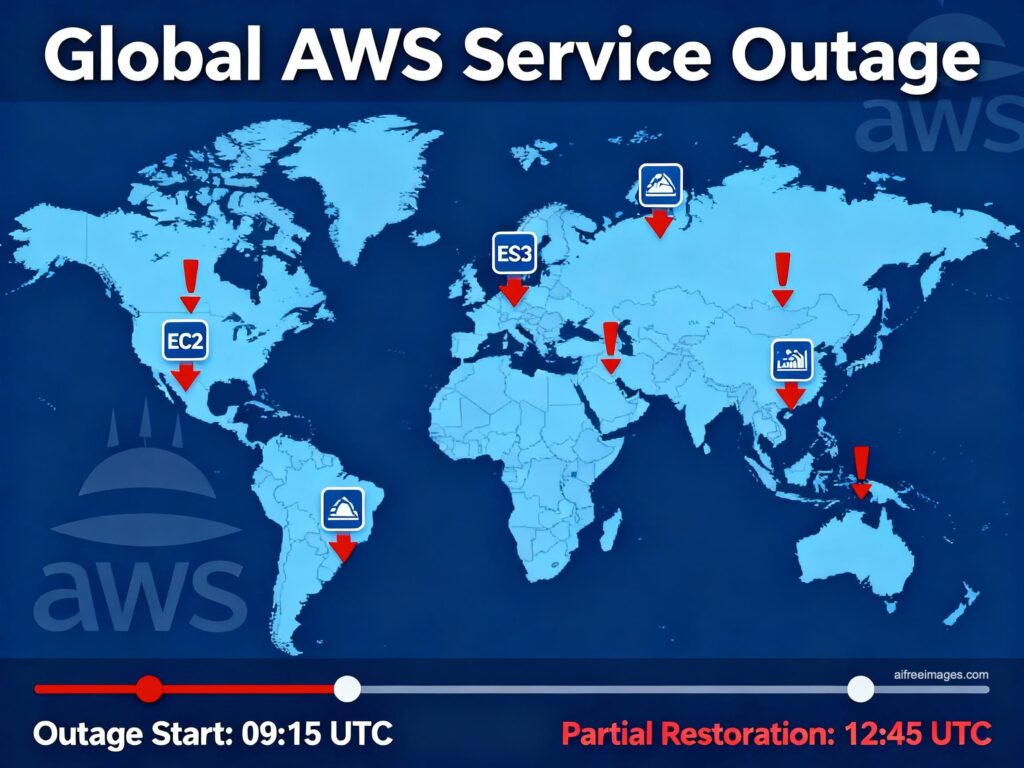

This Monday morning, October 20th, began with a震 for half of the internet: Amazon Web Services (AWS) experienced a widespread outage that disrupted the functioning of Amazon, Alexa, Snapchat, Fortnite, Epic Games Store and Epic Online Services, ChatGPT, Prime Video, Perplexity, Airtable, Canva, Duolingo, Zoom, McDonald’s app, Roblox, and Clash Royale, among other services. The AWS status panel acknowledges an “increase in error rates and latencies” in US-EAST-1 (Northern Virginia) — the provider’s busiest region — and states that it is working to mitigate the incident and determine its root cause.

According to Amazon’s internal cloud log, problems were detected at 03:11 ET (09:11 in mainland Spain) with an initial technical report promising an update “in 45 minutes.” Simultaneously, at 08:40 (local time), reports of outages began accumulating on portals like DownDetector, while forums and social media users confirmed that Alexa was unresponsive to requests and did not run routines — including preconfigured alarms — and that popular games and apps were inaccessible or experiencing intermittent errors. The scope is global, though the impact varies across regions and depending on each service’s reliance on the affected infrastructure.

“Perplexity is down right now. The root cause is an AWS problem. We are working to resolve it,”

acknowledged Perplexity CEO Aravind Srinivas on X.

What’s failing and why is it so noticeable?

AWS describes the incident as an increase in errors and latencies affecting multiple services in US-EAST-1. Even though it’s “just” one region, its disproportionate weight in Amazon’s cloud explains the domino effect: many companies host workloads in N. Virginia for historical, cost, latency, or availability reasons. Additionally, certain control planes and internal dependencies (authentication, queues, internal DNS, orchestration) rely on multi-tenant components that, when degraded, ripple out to other regions. This explains why today, services in Europe are also feeling the impact with failed logins, partial loads, or latency spikes.

In practice, users encounter four common symptoms:

- Pages not loading or returning 5xx errors.

- APIs responding erratically or timing out.

- Inability to upload/download content (images, attachments).

- “Smart” services — voice assistants, recommendations, automations — silent.

Impacted platforms include entertainment giants and communication services — Fortnite, Snapchat —, voice assistants — Alexa —, enterprise apps (Airtable, Canva), AI services (ChatGPT, Perplexity), and consumer apps (Prime Video, McDonald’s). AWS’s popularity — used by Netflix, Spotify, Reddit, Airbnb, among many others — amplifies the impact of any failure.

Known incident timeline

- 03:11 ET (09:11 in mainland Spain). AWS status panel reports an incident in US-EAST-1 with errors and high latencies.

- 03:51 ET. Amazon publishes an update: “We are working to mitigate the problem and understand the root cause. An update in 45 minutes or sooner if there’s new information.”

- 08:40 (local time). Users in Spain and Europe begin noticing issues with connectivity and service loads dependent on AWS.

- Morning to noon (Europe). Disruptions persist with intermittent behavior, varying by service and region. The cause has not been officially confirmed, and there’s no ETA for complete recovery.

This isn’t the first time: the precedent of US-EAST-1

The US-EAST-1 region has had notable incidents in recent years. 2020, 2021, and 2023 saw outages with widespread disruptions lasting several hours. The pattern — impacting North Virginia with visible effects worldwide — has encouraged many engineering teams to rethink their architecture to avoid consolidating critical control/data planes in a single failure point.

Impact in Spain and Europe

The morning’s snapshot shows a mixed picture: some services operate normally, others fail to log in or upload content, and others simply don’t load. The impact varies over minutes as AWS mitigates internal components or redistributes load. The deployment strategy of each platform—whether multi-region for real or not—also influences resilience; a true multi-region setup is more likely to degrade gracefully rather than fail completely.

What affected companies say

In addition to AWS official statements, several teams have openly acknowledged their dependence on the provider. Perplexity was explicit on X. Gaming, design platforms, and productivity apps have posted Status page alerts and social media updates, some deactivating features while awaiting normal operation.

Alexa exemplifies this: as a cloud-first service, AWS’s outage renders routine commands like turning on lights, launching routines, or checking weather inoperable. On the enterprise side, organizations using SaaS on Amazon are reporting slowdowns, intermittent errors, and authentication failures.

Why “the internet goes down” when a hyperscale fails?

Cloud computing allows “renting” resources (compute, storage, databases, messaging) rather than buying them. This economy of scale — the big promise of cloud — fosters concentration: if many services rely on the same layer (e.g., US-EAST-1 and common components), a single incident can spread. Best practices recommend multi-AZ (multiple zones within a region) and, for critical systems, multi-region or even multi-cloud deployments. However, not all platforms implement this, often due to cost, complexity, or legacy architecture.

What can users and companies do now?

End users

- Check the status page of the service and AWS.

- Avoid reinstalling apps or deleting data if the issue is on the provider’s side.

- Retry the operation later;

intermittency may improve as mitigation progresses.

IT teams

- Do not make urgent configuration changes during the incident unless there’s a clear mitigation plan (e.g., failover to another region already prepared).

- If architecture allows, migrate traffic to healthy regions (active/active or active/passive multi-region setups).

- Communicate with clients and staff about status and estimated times; set up task queues for retries when the provider recovers.

- Review after normalization: Which critical dependencies were monoregion? Which alarms fired? Which SLA/SLO commitments were missed?

What’s known (and what’s not)

- Confirmed: US-EAST-1 suffers from errors and high latencies affecting multiple AWS services; impact is global with varying intensity.

- Reported: platforms such as Alexa, Fortnite, Snapchat, Prime Video, Perplexity, Airtable, Canva, Duolingo, Zoom, Roblox, Clash Royale, ChatGPT, and Epic are impacted wholly or partially.

- Unconfirmed: the root cause and estimated time for full restoration (AWS continues investigation).

Lessons likely to come from this outage

- US-EAST-1 can’t be everything. Centralizing control and data in N. Virginia is convenient but risky.

- Multi-AZ isn’t foolproof. When failures cut across components, hosting multiple Zones of Availability in one region doesn’t prevent disruption.

- Multi-region and chaos-gamedays. Testing failovers and degradations in cold (and hot) environments is as vital as writing the runbook.

- Transparency. Early communication and periodic updates reduce customer and user uncertainty.

Frequently Asked Questions (FAQs)

What exactly is US-EAST-1, and why does its outage impact so many services?

US-EAST-1 is AWS’s region in Northern Virginia and one of the busiest by history, cost, and service catalog. Many platforms host control planes or key workloads there, so an incident can spill over and degrade services elsewhere.

Which services have been affected by today’s AWS outage?

The incident has impacted Amazon, Alexa, Prime Video, Snapchat, Fortnite, Epic Games Store/Epic Online Services, ChatGPT, Perplexity, Airtable, Canva, Duolingo, Zoom, Roblox, Clash Royale, partially or fully. The extent varies regionally and based on each platform’s reliance on US-EAST-1.

How long might an AWS outage last, and what can my company do meanwhile?

Duration depends on the cause and scope. Past incidents in 2020, 2021, and 2023 lasted several hours. Meanwhile, avoid risky changes, communicate status to users, switch to another region if possible, and collect metrics for post-mortem analysis.

How to mitigate future AWS (or hyperscale) outages?

Adopt multi-AZ and, for critical services, true multi-region. Separate control/data planes, use idempotent queues, implement retries with backoff, practice failovers and gamedays, and consider multi-cloud strategies and CDN/DNS failover controls.

Note: This coverage is based on public data from the AWS status panel, affected companies’ statements, and real-time user reports. Information may update as Amazon releases new details or the situation evolves.

via: Downdetector