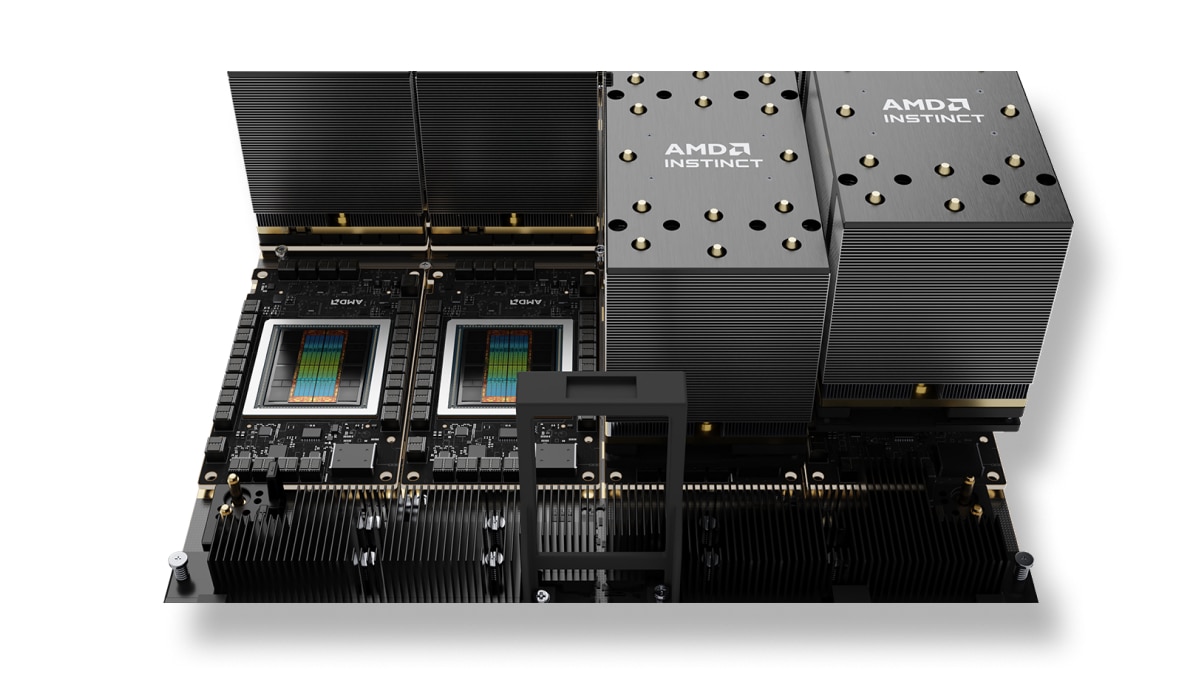

IBM and AMD have announced a collaboration to supply Zyphra —a San Francisco-based startup focused on “superintelligence” open-source— with an advanced AI model training infrastructure on IBM Cloud based on AMD Instinct™ MI300X GPUs. The multi-year agreement positions IBM as the provider of a large dedicated cluster with AMD accelerators for training multimodal foundational models (language, vision, and audio). According to the companies, this is the first large-scale deployment on IBM Cloud integrating the full AMD stack for training — from compute to networking — and it also leverages AMD Pensando™ Pollara 400 AI NICs and AMD Pensando Ortano DPUs. The initial phase was launched in early September, with plans for expansion in 2026.

This announcement follows Zyphra’s Series A funding round, valued at $1 billion, aiming to build a leading open science and open-source lab to drive new neural architectures, long-term memory, and continuous learning. The flagship product on the horizon is Maia, a general-purpose “superagent” aimed at enhancing productivity for knowledge workers in business environments.

“It’s the first time that AMD’s training platform — from compute to network — has been integrated and scaled on IBM Cloud, and Zyphra is honored to lead the development of frontier models with AMD silicon on IBM Cloud,”

Krithik Puthalath, CEO and President of Zyphra

What’s Included: GPU, Smart Network, and Enterprise Cloud

The dedicated cluster for Zyphra combines several pieces of the AMD stack:

- AMD Instinct MI300X GPUs for generative training and related HPC workloads.

- AMD Pensando Pollara 400 AI NICs (smart network cards designed for AI) to fuel high-scale data flow with low latency and high performance.

- AMD Pensando Ortano DPUs (data processing units) to offload network and security tasks and orchestrate infrastructure services without “stealing” cycles from main compute.

Building on this, IBM Cloud provides its enterprise cloud layer —security, reliability, scalability, and hybrid multicloud—, already offering MI300X as-a-service since 2024. The approach targets a very specific use case: dedicated training farms, “isolated” for clients with extreme compute needs but with the elasticity and governance of a hyperscaler.

“Scaling AI workloads faster and more efficiently makes a difference in ROI,

both for large enterprises and startups,”

emphasizes Alan Peacock, GM of IBM Cloud. For AMD, this collaboration demonstrates “innovation at speed and scale” — in the words of Philip Guido, EVP and Chief Commercial Officer —, aiming to set a “new standard” for AI infrastructure by combining IBM’s enterprise cloud expertise with AMD’s leadership in HPC and AI acceleration.

What Zyphra Will Use It For: Multimodal Models and Maia, the “Superagent”

Zyphra will develop multimodal foundational models on this platform, integrating language, vision, and audio. The immediate goal is to give Maia, a general-purpose superagent, the ability to accelerate knowledge-intensive processes (search, analysis, writing, coordination) within the enterprise environment. The concept: enable the agent to understand documents, summarize calls, cross-reference internal and external sources, and take intermediate steps (or suggest next-best actions) in corporate systems — without forcing humans to jump between applications.

Zyphra’s labeling of its approach as open-source/open-science isn’t just a nuance: in a market dominated by closed models, the focus on transparency and scientific collaboration adds attractiveness for companies that want to audit what they use or adapt it for on-premises.

Why This Announcement Matters (Beyond Zyphra)

- “Full-stack” AMD proven in hyperscaler environment. This is the first dedicated macrocluster on IBM Cloud integrating AMD GPU + NIC + DPU for training. It sets a benchmark for other companies looking to deploy their AI in the cloud without sacrificing high-performance isolation.

- Signal to the supply chain. Major AI projects depend heavily on HBM and advanced packaging as well as silicon. Coordinated efforts between IBM and AMD with a key client anticipate capacity and service priorities for 2026.

- Real competition to NVIDIA. Despite NVIDIA’s dominance with CUDA and an unrivaled software ecosystem, the AMD stack (ROCm, libraries, optimized kernels) is gaining traction especially when combined with co-engineering with heavy compute customers.

- Cloud as a “time-to-model” vector. Hyperscalers with offerings of “GPU as-a-service” and dedicated contracts will have an edge capturing frontier training: reducing wait times and providing network and production-ready services.

Towards “Quantum-Centric Supercomputing”: The Other IBM–AMD Path

Apart from this announcement, IBM and AMD have outlined plans to explore next-generation architectures along the lines of “quantum-centric supercomputing”: combining quantum computing (where IBM leads in hardware and software) with HPC and AI acceleration (where AMD excels). Though no detailed timeline is provided, their clear goal is to orchestrate heterogeneous resources (classical, accelerated, and quantum) based on the problem type, cost, and compute window.

Key Areas to Watch (and Risks)

- Software: functional parity and performance of ROCm versus CUDA in real workloads (efficient attention, MoE, dense training, low-latency serving) will be critical for TCO.

- HBM and OSAT: high-bandwidth memory and advanced packaging remain under tension; any scaling will depend on the global supply queue.

- Power and thermal management: clusters of this scale require MW-level power with liquid cooling (D2C, immersion). Location and electricity costs matter as much as the chip.

- Networking: even with AI NICs and DPUs offloading work, topology and backplanes at terabits/sec remain central to long eras.

- Timeline: Zyphra’s expansion in 2026 will depend on gradual capacity increases without intermediate bottlenecks.

Quick Read for CTOs and Infrastructure Teams

- Use case: frontier training of multimodal models (text+image+audio) with a dedicated cluster on IBM Cloud.

- Technology: MI300X + Pensando NICs/DPUs (network and data tasks closer to the rack), security, and IBM’s hybrid multicloud.

- Goal: boost model productivity and efficiency at scale (lower latency, higher throughput, better PUE/TUE).

- Product: feed Maia, Zyphra’s superagent for collaboration and automation in corporate settings.

Conclusion

The IBM–AMD–Zyphra collaboration adds an important piece to the AI at scale landscape: a dedicated macrocluster on IBM Cloud with a full AMD stack (GPU + NIC + DPU), designed for frontier multimodal models and with a planned expansion in 2026. For Zyphra, it’s the fuel for its open-source/open-science initiative and for bringing Maia from the lab to the real world; for IBM, a benchmark case showcasing its GPU as-a-service offer; and for AMD, a demonstration that its full-stack training scales in a hyperscaler environment with SLA. In a market where training windows are shrinking and useful watts matter, such operable alliances are the most valuable currency.

FAQs

What makes this cluster “different” from other cloud AI deployments?

It’s the first dedicated macrocluster on IBM Cloud integrating the full AMD training stack (GPU MI300X, Pensando Pollara 400 AI NICs, and Pensando Ortano DPUs), linking compute and smart networking with IBM Cloud’s enterprise layer.

What will Zyphra focus on with this infrastructure?

Developing multimodal foundational models (text, vision, audio) to feed Maia and working on new architectures, long-term memory, and continuous learning.

Why IBM and AMD?

Because of cutting-edge product roadmaps and the ability to deliver accelerators at Zyphra’s required pace. IBM offers security, reliability, and scalability; AMD provides HPC, GPU acceleration, NICs, and DPUs.

What’s the timeline?

The initial deployment was operational in early September 2025; the cluster’s expansion is scheduled for 2026. IBM and AMD expect to continue scaling as Zyphra’s training needs grow.

via: amd