Databricks has announced Data Intelligence for Cybersecurity, a solution that brings its Lakehouse architecture into the security realm with a clear message: unify data and operations so teams can detect and investigate threats – including those driven by AI with more context and less friction. This innovation is supported by the company’s ecosystem of partners and by Agent Bricks, the framework for building AI agents that not only analyze data but also execute governed actions at each stage of the security workflow.

The challenge they address: fragmented data, generic models, and slow responses

As attackers incorporate AI into their toolkit, many organizations remain confined to generic models and dispersed data spread across SIEMs, EDRs, NDRs, cloud logs, and applications. The result:

What’s included in “Data Intelligence for Cybersecurity”

- Scale AI Agents (Agent Bricks): framework for developing and operating ready-for-production agents with precision and governed actions (e.g., isolate a host, open a ticket, adjust a control).

- Conversational Security: natural language search, dashboards, and real-time insights that make posture and alerts accessible to experts and non-technical leaders.

- Unified Data Layer: a lakehouse that combines all security sources for comprehensive attack visibility and context, without being trapped by traditional SIEM limitations or vendor lock-in.

“With Data Intelligence for Cybersecurity, data and AI become the best defense,” summarizes Omar Khawaja, VP of Security and Field CISO at Databricks. “It enables a more accurate, governed, and flexible approach to building agents that proactively combat modern threats.”

Early customer results

- Arctic Wolf processes over 8 trillion events weekly and accelerates AI innovation for its SOC.

- Barracuda: 75% reduction in daily processing/storage costs, alerts in under 5 minutes, and increased engineering time for new detections.

- Palo Alto Networks: unification of fragmented data and a x3 boost in detection capabilities with AI, along with lower operational costs.

- SAP Enterprise Cloud Services: 80% reduction in engineering time, more than 5x faster rule deployment; increased visibility and savings.

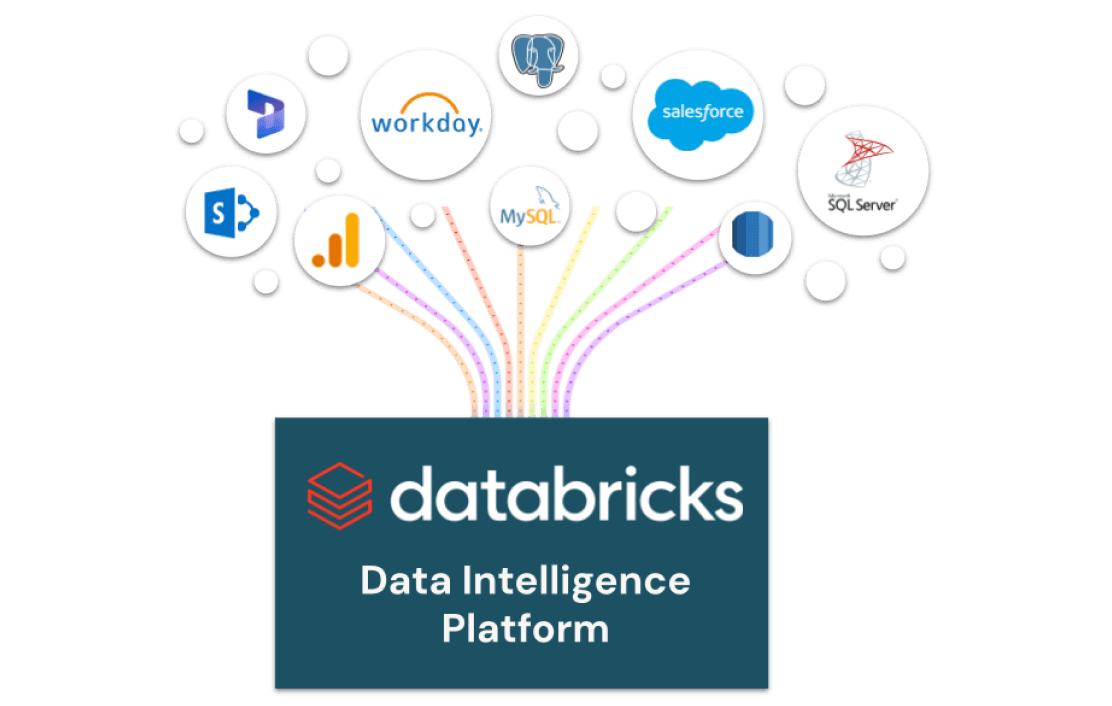

Ecosystem of partners

Integrations with numerous providers of detection, classification, data protection, and services (including Abnormal AI, Accenture Federal, ActiveFence, Arctic Wolf, BigID, Deloitte, Panther, Varonis), aimed at delivering measurable results and a unified defense.

What a CISO gains from this approach

- Less silos, more context: a single data plane to correlate telemetries and enrichments (identities, assets, cloud, SaaS).

- From dashboard to action: agents that operate under policies and traceability, closing the detection → analysis → response loop.

- Horizontal governance: a catalog and consistent controls (Unity Catalog/Delta Lake) across teams and environments (on-premises, cloud, edge).

- Financial health of the SOC: storage and compute optimized within the lakehouse, with openness to avoid sunk costs and vendor lock-in.

Use cases that fit well

- Detection and response (SecOps): unification of logs (cloud, EDR/NDR, SaaS), detections in streaming, and playbooks with actionable agents.

- Threat hunting and fraud detection with conversational search and custom models (not generic).

- Data security posture: integration with classification, discovery, and protection of sensitive data (e.g., with Varonis).

- MDR/MSSP: platforms that already handle huge volumes and need linear costs, elasticity, and in-house AI.

Risks and questions to address (balanced view)

- Agent governance: set action limits, human approvals, and traceability to prevent dangerous automation.

- Data quality: a unified lake only pays off if there is modeling, normalization, and cataloging; garbage in, garbage out.

- Convivial coexistence with SIEM: decide what stays in the SIEM (compliance, legal retention) and what migrates to the lakehouse (analytics, AI, economies of scale).

- Latency and costs: dimension streaming/batch processing and storage use to balance real-time performance with budget.

- Privacy and transfers: if processing PII, align with GDPR/NIS2/DORA and transfer mechanisms where applicable.

Architectural fit (high level)

- Ingestion: connectors to sources (cloud, endpoints, network, SaaS) → Delta Lake with managed schemas.

- Processing: Spark/Structured Streaming + models (MLflow) + features (Feature Store).

- Governance: Unity Catalog (policies/lineage/masking).

- Action: Agent Bricks orchestrating playbooks with guardrails, integrated with ITSM, EDR/NDR, IAM, firewalls.

- Observability: dashboards, NLQ (natural language queries), detection metrics, and MTTR.

Conclusion

The announcement positions Databricks at the forefront of a trend already emerging: cybersecurity as a data at scale problem with agents that act with governance. For organizations battling silos, costs, and generic models, the combination of lakehouse + agents presents a feasible path toward faster detection, context-rich investigations, and frictionless response. The execution—ingest quality, agent controls, and coexistence with SIEM—will shape the return on investment.

For more details, Databricks has published technical resources, solution briefs, and customer case studies on their blog and Data Intelligence for Cybersecurity pages.