The race to lead the new generation of language models is no longer measured solely by accuracy but also by efficiency, cost, and context capacity. In this arena, xAI has launched Grok 4 Fast, an optimized version of their Grok 4 series that combines a monumental context window of 2 million tokens, inference speed, and cost efficiency per token.

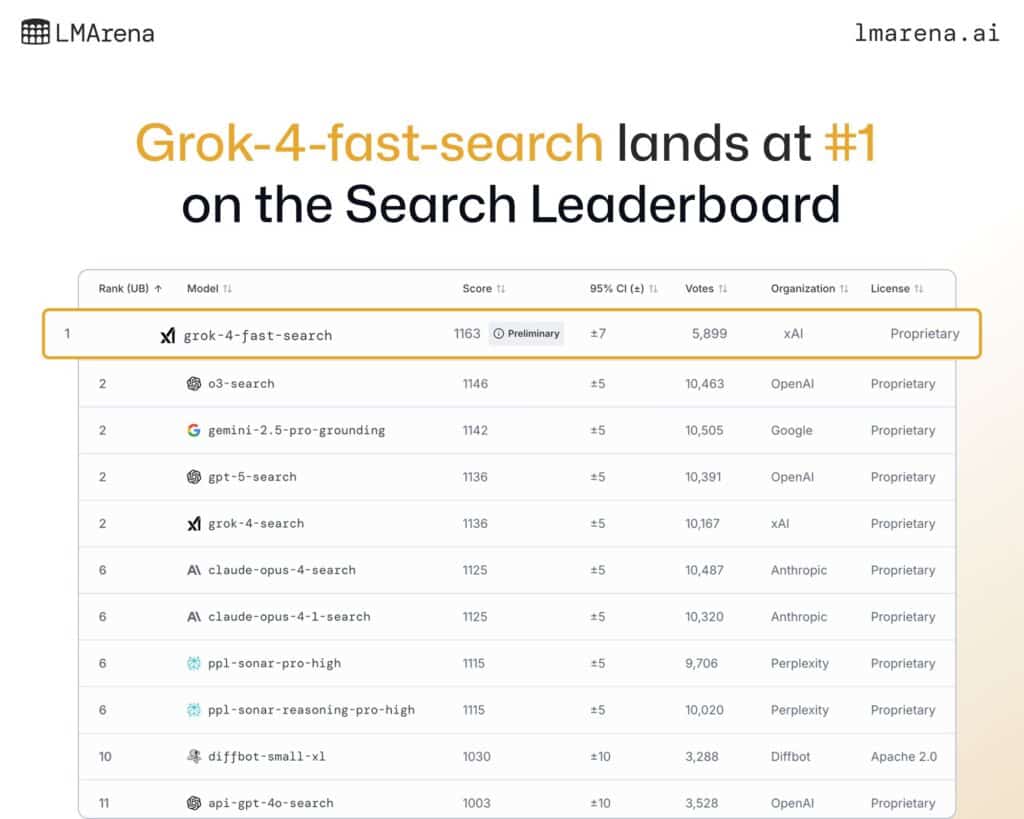

In public tests and community benchmarks like LMArena, Grok 4 Fast has already demonstrated credentials: #1 on the Search Leaderboard and Top 10 in Text Arena, outperforming heavyweight rivals like OpenAI and Anthropic in key tasks.

A model designed to do more with less

- Token efficiency: Grok 4 Fast achieves performance similar to Grok 4 while consuming 40% fewer “thinking tokens”, resulting in a 98% reduction in cost on frontier benchmarks.

- Massive context: up to 2M tokens in a single query, enabling processing of manuals, entire document bases, or complete books like Moby Dick without losing coherence.

- Speed: optimized to respond quickly on simple queries and deploy detailed reasoning on complex cases, thanks to its unified architecture.

Key benchmarks

In academic and reasoning tests:

- AIME 2025 (no tools): Grok 4 Fast = 92%, comparable to GPT-5 High (94.6%) and surpassing Claude Opus-4 (≈91%).

- HMMT 2025: Grok 4 Fast = 93.3%, tying with GPT-5 High and outperforming Claude.

- GPQA Diamond: Grok 4 Fast = 85.7%, in line with GPT-5 High and Grok 4.

In search and navigation, Grok 4 Fast excels in:

- BrowseComp (zh): 51.2%, outperforming GPT-5 Search and Claude.

- X Bench Deepsearch (zh): 74%, compared to 66% for Grok 4 and 27% for previous models.

Comparison with GPT-5 and Claude Opus

| Feature | Grok 4 Fast (xAI) | GPT-5 High (OpenAI) | Claude Opus 4 (Anthropic) |

|---|---|---|---|

| Context window | 2M tokens | 1M tokens (extended) | 1M tokens (with extension) |

| Inference speed | Very high (optimized “fast”) | High, but more costly | High, with a safety focus |

| Token efficiency | 40% fewer thinking tokens vs Grok 4 | High consumption | Moderate-high |

| Relative cost | Up to 98% less than Grok 4 for same performance | Premium, higher $ per million tokens | Premium, focused on trust and enterprise use |

| Math benchmarks | 92–93% (AIME, HMMT) | 94–95% (AIME, HMMT) | 91–92% (AIME, HMMT) |

| Search & navigation | Frontier agentic search (web + X) | Solid, but less optimized for multi-hop searches | Limited active navigation |

| Architecture | Unified (reasoning and non-reasoning in one model) | Multiple variants (GPT-5, GPT-5 Mini) | Opus, Sonnet, Haiku variants |

| LMArena ranking | #1 in Search, #8 in Text | Top 3 in Search/Text | Top 5–10 in Search/Text |

Market landscape

- OpenAI (GPT-5): leads in raw accuracy and ecosystem tools but with higher costs and still under 2M tokens of context.

- Anthropic (Claude Opus): excels in reliability, alignment, and expanded context, with strong adoption in corporate environments but lower performance in complex searches.

- xAI (Grok 4 Fast): positions itself as the “fast & efficient” model, ideal for search applications, massive document analysis, and environments where speed and cost are as important as accuracy.

Conclusion

With Grok 4 Fast, xAI demonstrates that competing at the highest level doesn’t require slower, more expensive models. Its 2M token window, combined with cost and speed efficiency, makes it an ideal candidate for:

- Businesses needing to analyze large information corpora.

- End-users seeking quick responses and advanced reasoning in complex tasks.

- Real-time search applications, where it already ranked #1 on LMArena.

In the new AI landscape, GPT-5 leads in accuracy, Claude Opus in safety and alignment, but Grok 4 Fast shines in efficiency and context, opening the door to real democratization of frontier models.

Frequently Asked Questions (FAQ)

What does it mean that Grok 4 Fast has a 2M token context?

It can process up to two million tokens in a single input, equivalent to thousands of pages of text without cuts or fragmentation.

Is it more accurate than GPT-5 or Claude?

In raw precision, GPT-5 remains slightly ahead. However, Grok 4 Fast achieves similar results at a much lower cost and faster speed.

Where has Grok 4 Fast particularly excelled?

In search and navigation benchmarks (LMArena Search Arena), outperforming OpenAI and Google, and in mathematical reasoning, matching GPT-5.

Which model is the better choice: GPT-5, Claude Opus, or Grok 4 Fast?

Depends on the use case: GPT-5 for maximum accuracy, Claude for safety and trust, Grok 4 Fast for speed, massive context, and low costs.

More info: xAI press release.