Inference has become the new battleground in artificial intelligence. Today’s models are no longer just simple text or image generators; they are evolving into agent systems capable of multi-step reasoning, maintaining persistent memory, and managing contexts of millions of tokens. To meet this challenge, NVIDIA has introduced Rubin CPX, a GPU specifically designed to accelerate large-scale context workloads with higher performance and efficiency.

The challenge of large-scale inference

As AI integrates into more industries, the requirements are changing. In software development, for example, coding copilots need to analyze entire repositories, file dependencies, and project structures. In video, generating long content requires sustained coherence over hours of footage, which can amount to more than one million tokens per context.

These workloads surpass the limits of traditional infrastructures, which were designed for relatively short contexts. The problem isn’t just computational: it also impacts memory, network bandwidth, and energy efficiency, forcing a rethink of how to scale inference.

Disaggregated inference: separating to optimize

NVIDIA proposes an architecture of disaggregated inference, where processing is divided into two distinct phases:

- Context phase: compute-intensive. The system must ingest and analyze large volumes of data to generate the first output.

- Generation phase: memory-intensive. Requires fast transfers and an efficient interconnect to produce token-by-token results.

Separating these phases allows resource optimization tailored to each, dedicating more power to initial ingestion and more bandwidth to sustained generation. However, it also introduces new layers of complexity: key-value (KV) cache coordination, model-sensitive routing, and advanced memory management.

This is where NVIDIA Dynamo comes into play — the orchestration platform that synchronizes these processes and has been key in recent MLPerf Inference records.

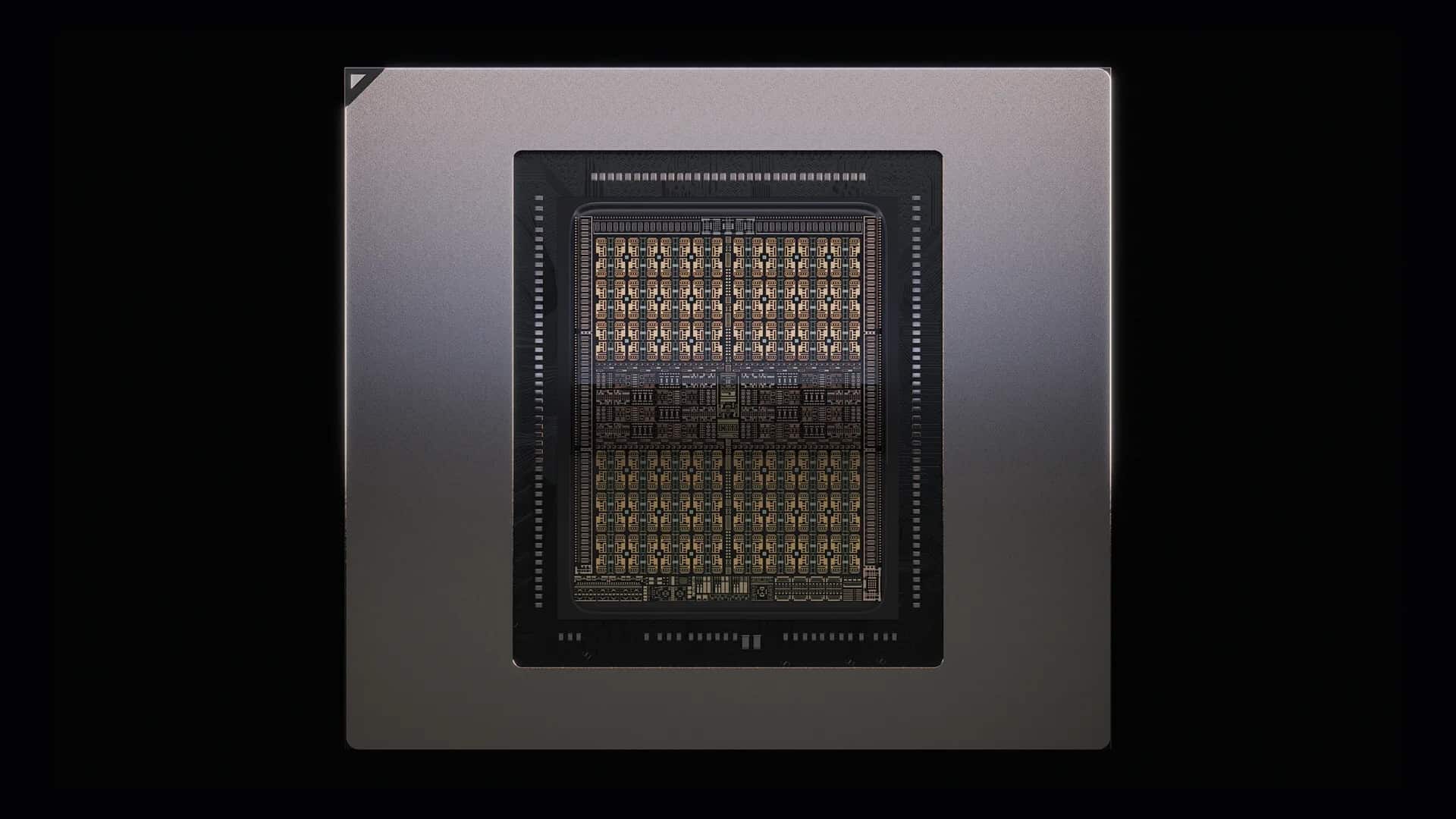

Rubin CPX: acceleration for massive context

Rubin CPX addresses the bottleneck in the context phase. Designed with the Rubin architecture, the GPU delivers:

- 30 petaFLOPs of compute in NVFP4.

- 128 GB of high-efficiency GDDR7 memory.

- Threefold acceleration in attention mechanisms compared to the GB300 NVL72 series.

- Natively supports high-resolution video decoding and encoding.

Thanks to these features, Rubin CPX stands out as the key component for high-value applications, from AI-driven software development to HD video generation and large-scale research.

Vera Rubin NVL144 CPX: exaFLOP-scale power

The new GPU isn’t standalone. It is part of the NVIDIA Vera Rubin NVL144 CPX platform, a rack that integrates:

- 144 Rubin CPX GPUs for the context phase.

- 144 Rubin GPUs for the generation phase.

- 36 Vera CPUs to coordinate and optimize workflow.

The result is a capacity of 8 exaFLOPs in NVFP4, with 100 TB of high-speed memory and 1.7 PB/s bandwidth, all in a single rack. This power is 7.5 times greater than the GB300 NVL72 platform, setting a new industry standard.

Interconnection relies on Quantum-X800 InfiniBand and Spectrum-X Ethernet, alongside ConnectX-9 SuperNICs, ensuring low latency and scalability in distributed AI environments.

Inference economics: ROI at the core

Beyond performance, NVIDIA emphasizes economic impact. According to their estimates, a deployment based on Rubin CPX can deliver 30 to 50 times ROI, generating up to $5 billion in token revenue with an investment of $100 million in CAPEX.

This approach reflects a shift in metrics: it’s no longer just about FLOPs but about cost-effective token processing, a key indicator for companies offering AI-generated services.

Transformative use cases

Rubin CPX’s value translates into concrete applications:

- Software development: assistants that understand entire repositories, commit histories, and documentation without retraining.

- Video generation: AI capable of maintaining narrative and visual coherence in long-form content with cinematic quality.

- Research and science: analysis of large knowledge bases, enabling AI agents to work with extensive corpora in real time.

- Autonomous agents: systems capable of making complex decisions with million-scale contextual memories.

An ecosystem ready

Rubin CPX seamlessly integrates into the NVIDIA software stack:

- TensorRT-LLM, to optimize language model inferences.

- NVIDIA Dynamo, as orchestration and resource efficiency layer.

- Nemotron, family of multimodal models with advanced reasoning capabilities.

- NVIDIA AI Enterprise, ensuring production-ready deployments across cloud, data centers, and workstations.

Availability

NVIDIA expects Rubin CPX to be available by late 2026, aligning with the increasing demand for long-context inference and the rise of next-generation AI agents.

Conclusion

With Rubin CPX, NVIDIA is not just launching another GPU: it introduces a new way to think about inference, separating context and generation phases, and providing specialized hardware for each.

In a world where AI must understand entire repositories, generate hours-long videos, and sustain autonomous agents, this architecture offers not only power but also efficiency and economic viability.

Rubin CPX and the Vera Rubin NVL144 CPX platform are redefining the future of AI, placing massive inference at the heart of technological and business debates.

Frequently Asked Questions (FAQ)

What sets Rubin CPX apart from other NVIDIA GPUs?

Rubin CPX is specifically designed for the inference context phase, optimizing long-sequence processing and delivering up to 3 times the attention mechanism performance compared to previous generations.

What is disaggregated inference?

It’s a model that separates processing into two phases — context and generation — allowing independent optimization of compute and memory for better efficiency and latency.

What economic ROI can Rubin CPX provide?

NVIDIA estimates a 30 to 50 times ROI, with up to $5 billion in token revenue per $100 million in infrastructure investment.

When will Rubin CPX be available?

The GPU and the Vera Rubin NVL144 CPX platform are expected to be ready for customers by late 2026.

via: developer.nvidia