Canonical, the company behind Ubuntu, has announced official support for the new NVIDIA Jetson Thor family, strengthening a strategic partnership with NVIDIA to accelerate innovation in generative AI, humanoid robotics, and edge computing. With this integration, developers and companies will be able to access optimized Ubuntu images for Jetson Thor modules, backed by Canonical’s reliability, security, and enterprise-grade support.

This news comes at a pivotal moment: as AI expands from the cloud to smaller, user-near devices, the challenge is to deliver increasingly powerful models to environments with limited energy resources, low latency needs, and strict regulatory compliance requirements.

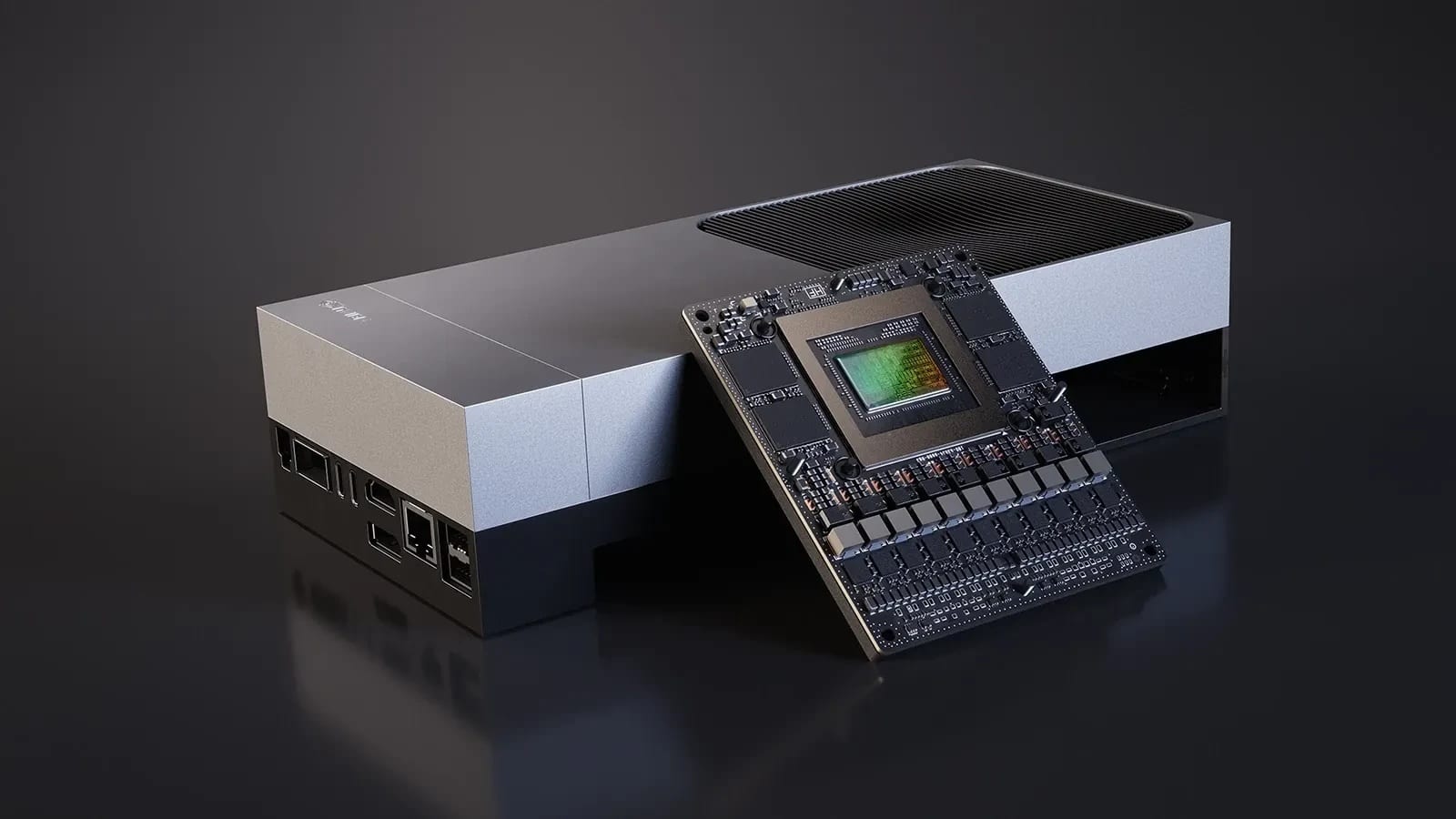

Jetson Thor: a giant leap beyond Orin

The new Jetson Thor generation redefines edge computing standards. Based on the NVIDIA Blackwell architecture, it features up to 128 GB of memory and delivers 2,070 TFLOPS of performance in FP4 precision.

The numbers speak volumes:

- 7.5 times more performance than Jetson Orin.

- 3.5 times more energy efficiency.

- A compact, low-power format capable of running cutting-edge generative AI models on humanoid robots, medical devices, autonomous vehicles, or industrial systems.

The Jetson AGX Thor Developer Kit provides a comprehensive environment for development and prototyping, while the Jetson Thor modules are designed for integration into production hardware.

Ubuntu as a trusted pillar at the edge

Canonical’s announcement ensures Jetson Thor can run on official Ubuntu 24.04 LTS images (and future versions), including long-term security support and critical patches.

Key benefits include:

- Enterprise security and regulatory compliance: Canonical guarantees regular updates aligned with regulations like the European Cyber Resilience Act, vital for sectors such as healthcare, automotive, or defense.

- Certified stability: each Ubuntu image passes over 500 hardware compatibility tests, ensuring reliability in production.

- Frictionless development: the same Ubuntu architecture can be used across workstations, public clouds, and edge devices, facilitating large-scale, consistent deployments.

Canonical emphasizes that this combination will enable companies and startups to innovate without compromising security, solving a classic dilemma: advancing rapidly or adhering to regulations. Now, they can do both.

Unique capabilities for generative AI and autonomous robotics

Jetson Thor is designed not just for power but also for specific applications:

- Real-time kernel: ensures deterministic latencies, crucial in humanoid robotics or medical systems.

- Multi-Instance GPU (MIG): divides a GPU into multiple secure, isolated instances, allowing different models to run simultaneously with guaranteed performance.

- Compatibility with any GenAI model: from Llama 3.1 to Qwen3, Gemma3, or DeepSeek R1, including vision-action models like NVIDIA GR00T N.

- NVIDIA Holoscan: streams sensor data directly to the GPU with minimal latency, eliminating CPU bottlenecks and opening new possibilities in computer vision or autonomous vehicles.

This positions Jetson Thor as a true hub for physical AI, where the line between algorithms and the real world blurs.

Implications for developers

The arrival of official Ubuntu support on Jetson Thor is particularly significant for developers. They will now be able to run local generative AI models without always relying on the cloud, which:

- Lowers inference costs.

- Minimizes latency (crucial in robotics and automotive).

- Boosts privacy by keeping data on the device.

Additionally, the ecosystem will be compatible from day one with inference frameworks like Ollama, vLLM, Hugging Face, and many more, easing teams’ transition to this platform.

A long-term strategy: democratizing edge AI

Canonical frames this partnership within its Silicon Partner Program, aimed at providing certified Ubuntu images for key hardware manufacturers. NVIDIA is one of its most strategic partners, and Jetson Thor is just the latest step in a relationship that has already provided official support for previous generations such as Orin.

Both companies share a vision that the future of AI involves a hybrid deployment: while the cloud remains essential, more processes will run at the network’s edge—closer to users and physical devices.

With Jetson Thor and Ubuntu, Canonical and NVIDIA aim to democratize AI across sectors, overcoming limitations posed by the lack of secure, efficient, and standardized platforms.

What’s next: availability and deployment

Canonical has announced that official Ubuntu images for Jetson Thor will be available on its download portal in the coming weeks.

Developers will be able to choose between:

- Images for the Developer Kit, ideal for exploration and prototyping.

- Production-ready images for modules.

- Versions of Ubuntu Core, designed for IoT and secure edge device deployments.

The process will be straightforward: download the appropriate image, write it to a USB or NVMe device, and load it onto the module.

Frequently Asked Questions (FAQ)

What is NVIDIA Jetson Thor?

It’s NVIDIA’s new edge platform for physical AI and advanced robotics. Built on the Blackwell architecture, it offers 2,070 TFLOPS FP4, 128 GB of memory, and energy efficiency 3.5 times higher than its predecessor, Orin.

What does official Ubuntu support provide?

It ensures stability, long-term security patches, hardware certification, and regulatory compliance—reducing risks in critical sectors like healthcare, automotive, or manufacturing.

Which practical applications stand to benefit most?

Humanoid robotics, autonomous vehicles, medical devices, smart factories, and vision systems, along with edge generative AI to reduce latency and cloud costs.

When will Ubuntu images for Jetson Thor be available?

Canonical has confirmed they will be available soon on its official website, for both development kits and production environments.

Is it compatible with current AI models?

Yes. Jetson Thor supports frameworks like Hugging Face, vLLM, Ollama, and popular models like Llama 3.1, Qwen3, or Gemma3, including multimodal and vision-action models.