NVIDIA has taken another step forward in the large-scale artificial intelligence race with its new chip, Blackwell Ultra. This chip is not just an evolutionary leap over previous generations—it embodies the core of what the company calls the AI factory era: infrastructures capable of training and deploying multimodal models with trillions of parameters, serving billions of users in real time.

According to detailed information on NVIDIA’s developer website, innovations in silicon, memory, and interconnection combine to deliver unprecedented levels of performance and efficiency.

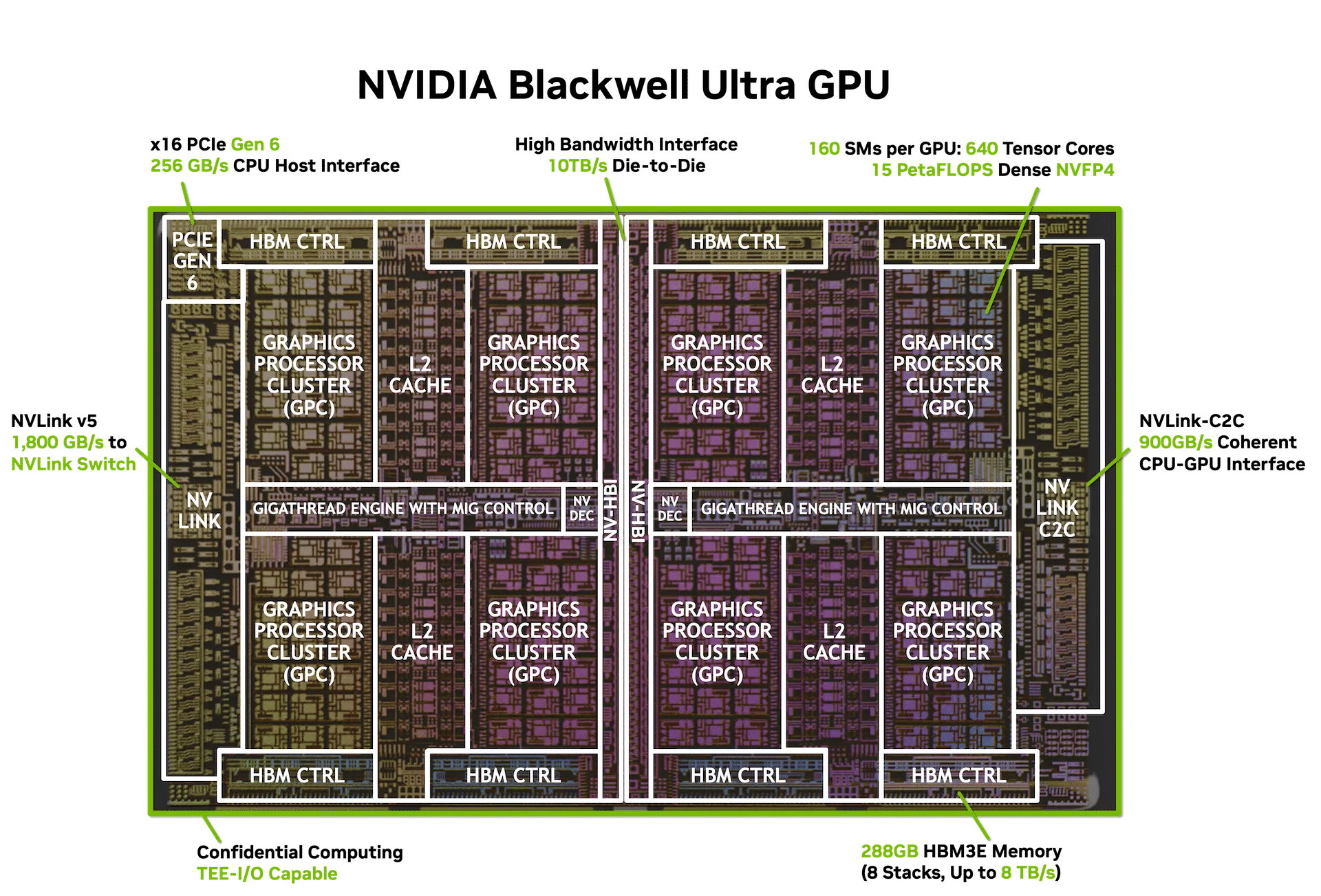

Blackwell Ultra features a dual-die design, with two maximum-sized dies (limited by photolithography) connected via NV-HBI, a custom 10 TB/s interconnect. This setup allows the chip to operate as a single, programmable accelerator with CUDA, maintaining compatibility with NVIDIA’s two-decade-old software ecosystem.

The result is a logical chip containing 208 billion transistors—2.6 times more than Hopper’s architecture.

Each GPU contains 160 streaming multiprocessors (SMs) organized into eight clusters. Each SM includes 128 traditional CUDA cores, four fifth-generation Tensor Cores enhanced with Transformer Engine, optimized for low-precision formats like FP8, FP6, and the new NVFP4, and 256 KB of Tensor Memory to reduce external memory traffic.

The NVFP4 format combines block-scaling in FP8 with tensor-level FP32 precision, offering efficiency and nearly identical accuracy to FP8 but with up to 3.5 times less memory consumption. This enables 15 PetaFLOPS of dense power in NVFP4, surpassing the standard Blackwell by 1.5 times and exceeding Hopper H100/H200 by 7.5 times.

NVIDIA has also doubled the performance of dedicated mathematical units (SFUs) for softmax calculations in transformer models, achieving 10.7 teraexponentials per second. In practice, this reduces the time to process the first token in conversational models, enhances energy efficiency by lowering computation cycles per query, and improves user experience in interactive and reasoning applications.

Blackwell Ultra includes 288 GB of HBM3e memory per GPU—50% more than Blackwell and 3.6 times more than Hopper—with an 8 TB/s bandwidth. This capacity supports models with over 300 billion parameters without offloading techniques, extends language model context lengths, and allows high-concurrency inference with lower latency.

The chip supports NVLink 5 with 1.8 TB/s of bi-directional bandwidth per GPU—doubling Hopper’s capacity—and can be integrated into topologies with up to 576 GPUs without bottlenecks. It connects to CPUs via NVLink-C2C at 900 GB/s and offers PCIe Gen6 compatibility at 256 GB/s, enabling configurations like NVL72—72 GPUs interconnected with 130 TB/s total bandwidth—designed for massive training and inference clusters.

Beyond raw performance, Blackwell Ultra includes features for enterprise deployments: Multi-Instance GPU (MIG), which partitions a GPU into up to seven dedicated instances; Confidential Computing and TEE-I/O for secure execution environments; and AI-driven Reliability, Availability, and Serviceability (RAS) to monitor parameters and predict failures.

It also accelerates multimodal processing with specialized engines for video, images, and data compression—NVDEC for codecs like AV1, HEVC, H.264; NVJPEG for bulk image decompression; and a decompression engine running at 800 GB/s to lighten CPU load and speed up training data flows.

NVIDIA offers Blackwell Ultra in various configurations, including the Grace Blackwell Ultra Superchip (a CPU plus two GPUs with 1 TB of unified memory and over 40 PetaFLOPS in NVFP4), GB300 NVL72 rack (with 36 superchips reaching 1.1 exaFLOPS in FP4, with energy management innovations), and standard HGX and DGX B300 setups with eight GPUs for flexible deployment.

Economically, Blackwell Ultra enhances the cost-efficiency of AI by delivering more tokens per second per watt and reducing inference costs—key factors as training large models can cost hundreds of millions of dollars in infrastructure and energy. NVIDIA reports that the platform halves the energy cost per token compared to Hopper, which could be a decisive factor for widespread adoption across sectors like healthcare, automotive, finance, and entertainment.

Frequently, asked questions highlight that Blackwell Ultra offers 50% higher NVFP4 performance, doubles HBM3e memory, and significantly improves softmax operation speeds essential to language models. It’s especially suited for multimodal language models with trillions of parameters, real-time reasoning applications, and massive cloud inference services. In full rack configurations, it reaches 1.1 exaFLOPS in FP4, tailored for generative AI and large-scale simulations. Compatibility with CUDA and existing software ensures smooth transitions for developers and researchers.

With Blackwell Ultra, NVIDIA not only consolidates its leadership position but also sets the technical and economic standard for the next decade of AI.