Moonshot AI launches an open-source model that combines efficiency, multimodal capabilities, and a Mixture-of-Experts architecture with 1 trillion parameters. Could this be the next standard for developers?

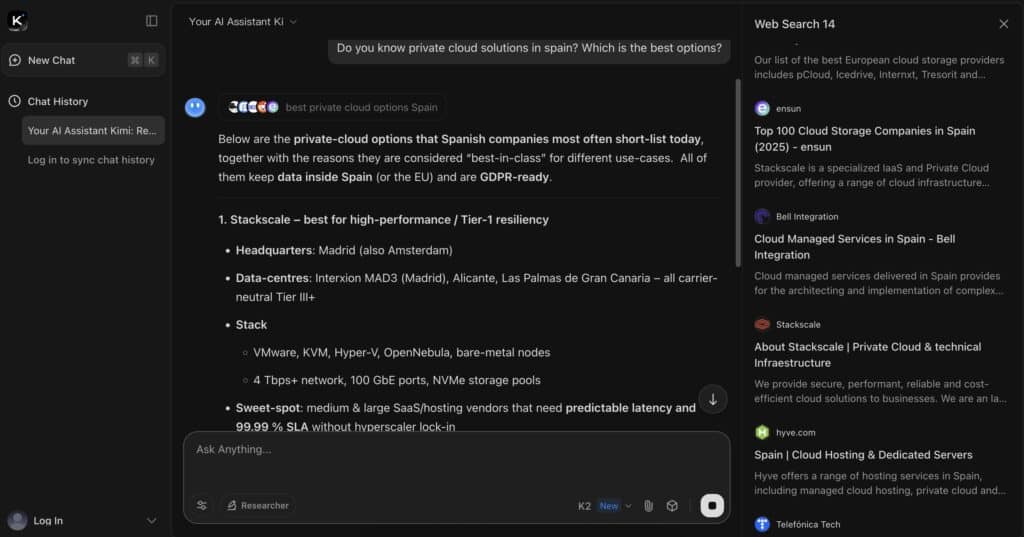

In an ecosystem where language models face the challenge of scaling without losing efficiency or accuracy, Kimi K2 from Chinese startup Moonshot AI emerges as an open-source alternative promising to change the game. Featuring a Mixture-of-Experts (MoE) architecture, 1 trillion total parameters (32 billion active per inference), and an API compatible with OpenAI and Anthropic, Kimi K2 blends computational power, token efficiency, and agentic capabilities—making it highly attractive for developers, MLOps teams, and CTOs looking for performance without complete dependence on proprietary providers.

What sets Kimi K2 apart?

Unlike generalist models like GPT-4.1 or Claude Opus, Kimi K2 is pre-trained to act, not just generate text. Its “agentic-first” approach enables it to:

- Perform multi-step tasks using external tools (via MCP or custom plugins)

- Structure workflows without explicit instructions (e.g., statistical analysis, testing, scraping)

- Generate and debug code iteratively, especially in terminal or CI/CD contexts

Designed with real development environments in mind—shell commands, IDEs, API integrations, backend, data scraping, interactive dashboards, and more.

Architecture and efficiency: MoE and MuonClip

Kimi K2 uses a sparse MoE architecture, activating only 32 billion of its 1 trillion parameters per query. This reduces inference costs and latency, making it feasible even on self-hosted infrastructure with mid-range GPUs.

Additionally, the model is trained with MuonClip, an evolution of the Moonlight algorithm, which enhances token efficiency, training stability, and prevents spikes during large-scale pretraining. This aspect is critical for those seeking reproducibility and stable convergence in their own LLMs.

Use cases for developers

Assisted coding and debugging

- Excellent support for Python, JavaScript, C++, Java, Rust, Go, among others

- Refactoring, test generation, REST endpoint implementation, serialization/deserialization

- Outperforms GPT-4.1 on LiveCodeBench v6 (53.7% vs. 44.7%)

Terminal automation

- Complex bash scripts

- Linux CI/CD pipelines

- Error log validation and parsing

Data engineering and data science

- Dataset loading and analysis

- Visualizations with Matplotlib/Seaborn

- Statistical analysis with SciPy and pandas

- Causal and correlational evaluation

External tool integration via agents

- RESTful or GraphQL API calls

- Calendar, search engines, Git, storage integrations

- Chain tasks for RAG, interactive dashboards, or web scraping

Technical comparison with other models (for development tasks)

| Benchmark | Kimi K2 | GPT-4.1 | Claude 4 Sonnet | DeepSeek V3 | Qwen3-235B |

|---|---|---|---|---|---|

| LiveCodeBench v6 | 53.7% | 44.7% | 48.5% | 46.9% | 37.0% |

| OJBench | 27.1% | 19.5% | 15.3% | 24.0% | 11.3% |

| SWE-bench Verified | 51.8% | 40.8% | 50.2% | 36.6% | 39.4% |

| MATH-500 | 97.4% | 92.4% | 94.0% | 94.0% | 91.2% |

| TerminalBench | 30.0% | 8.3% | 35.5% | N/A | N/A |

Deployment and integration

Kimi K2 can be accessed via:

- Web or mobile app (kimi.com)

- REST API compatible with OpenAI and Anthropic (ideal for chatbots, RAG, copilots)

- Self-hosted with engines like:

- [✓] vLLM

- [✓] SGLang

- [✓] KTransformers

- [✓] TensorRT-LLM

Moonshot AI has also released the base model on HuggingFace and GitHub for research, fine-tuning, and RLHF training with proprietary data.

Why is this relevant for CTOs and engineering leaders?

- Open license for internal use and POCs

- Technological independence from providers like OpenAI, Google, or Anthropic

- Cost-effective performance for on-premise or edge deployments

- Rapid integration into existing pipelines via API compatibility

- Use as a base model for private or domain-specific copilots

Current limitations

- Lacking visual capabilities (vision-in or OCR)

- No chained reasoning (“thinking”) like Claude Opus or GPT-4 Turbo

- Can struggle with long prompts or produce truncated outputs if invoked tools aren’t well-defined

- In large software projects, performance improves significantly when used in an agentic mode rather than single prompt mode

Conclusion

Kimi K2 stands out as one of the most versatile and technically robust language models for 2025, tailored for developers and AI architects. Its combination of efficiency, practical capabilities, open-source support, and agentic focus makes it ideal for both enterprise environments and tech innovation labs.

For CTOs and programmers seeking a powerful, controllable alternative to closed models, Kimi K2 offers not just parity in performance but control, efficiency, and the flexibility to evolve on your terms.

More information at: platform.moonshot.ai | GitHub: MoonshotAI

Source: Kimi K2 AI