The new validated architectures for the enterprise AI Factory integrate liquid cooling, high-density racks, and full support for scalable intelligent agents.

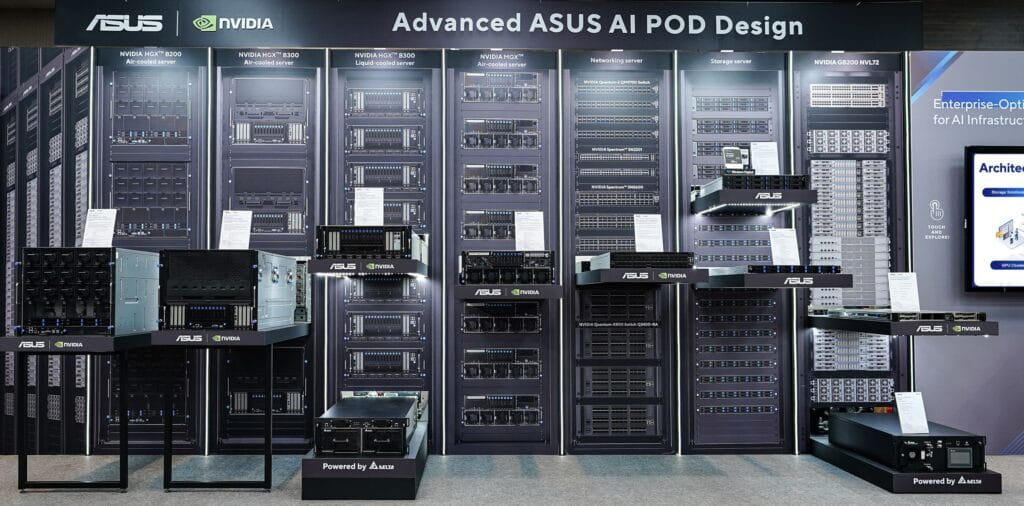

Today at COMPUTEX 2025, ASUS introduced its new proposal for advanced infrastructure for artificial intelligence (AI) in partnership with NVIDIA. Under the name AI POD, the company launches a validated reference architecture for the NVIDIA Enterprise AI Factory, providing optimized and scalable solutions for AI agents, physical AI, and HPC workloads.

These new designs are certified to operate with NVIDIA’s Grace Blackwell, HGX, and MGX platforms and are available for data centers with liquid cooling as well as air-cooled solutions, thus offering unprecedented flexibility, performance, and thermal efficiency for companies looking to deploy AI at scale.

Reference Architecture for the AI Factory

The ASUS proposal offers a validated enterprise reference architecture, aimed at simplifying the development, deployment, and management of advanced AI applications in on-premises environments. This solution includes:

- Accelerated computing with NVIDIA GB200/GB300 NVL72 GPUs

- High-performance networks with NVIDIA Quantum InfiniBand or Spectrum-X Ethernet

- Certified storage with ASUS RS501A-E12-RS12U and VS320D racks

- Enterprise software via NVIDIA AI Enterprise and ASUS management tools

One of the standout configurations is a cluster of 576 GPUs distributed across eight racks with liquid cooling, or a compact version of 72 GPUs in a single air-cooled rack. This design redefines performance for workloads such as language model training (LLMs), massive inference, and intelligent agent reasoning.

MGX and HGX Racks Optimized for Next-Generation AI

ASUS has revealed new MGX-compliant configurations with its ESC8000 series, equipped with Intel Xeon 6 processors and RTX PRO 6000 Blackwell Server Edition GPUs, integrated with the NVIDIA ConnectX-8 SuperNIC capable of reaching up to 800 Gb/s. These platforms are prepared for content generation, generative AI, and immersive AI tasks, offering expandability and cutting-edge performance.

The HGX racks optimized by ASUS (XA NB3I-E12 with HGX B300 and ESC NB8-E11 with HGX B200) provide high GPU density and outstanding thermal efficiency, both in liquid and air configurations. These solutions focus on workloads like model fine-tuning, LLM training, and continuous deployment of AI agents.

Infrastructure for Autonomous AI Agents

Integrated with the latest innovations in agentic AI, ASUS’s infrastructure is aimed at AI models capable of making autonomous decisions and learning in real-time, enabling advanced business applications across multiple industries.

To achieve this, ASUS includes tools such as:

- ASUS Control Center – Data Center Edition and Infrastructure Deployment Center (AIDC), to facilitate the deployment of models

- WEKA Parallel File System and ProGuard SAN Storage, for data management at speed and scale

- Advanced SLURM management and NVIDIA UFM for InfiniBand networks, optimizing resource utilization

A Step Closer to the AI Factory

According to ASUS, its goal is to position itself as a strategic partner in the creation of AI factories on a global scale, combining validated hardware, orchestrated software, and enterprise support. These solutions are designed to reduce deployment time, improve TCO (total cost of ownership), and facilitate smoother and secure scalability.

ASUS and NVIDIA thus set a new standard for the intelligent data centers of the future, focusing on the massive adoption of next-generation AI and the evolution toward autonomous agents within enterprise infrastructure.