In a landscape dominated by names like OpenAI, Google DeepMind, and Anthropic, a new Chinese proposal bursts onto the scene with vigor in the competitive field of generative artificial intelligence. This is MAGI-1, a large-scale autoregressive video generation model developed by Sand AI, which promises to raise the bar for what diffusion models can achieve in visual synthesis tasks.

With 24 billion parameters, an optimized Transformer-based architecture, and a fully open-source approach, MAGI-1 not only matches but surpasses commercial models like Sora or Kling in several aspects, according to recent technical evaluations and the company’s own benchmarks.

A Distinct Technical Approach: Chunk-by-Chunk Generation and Autoregressive Architecture

Unlike other AI video generators that process video as a complete sequence, MAGI-1 opts for a chunk-by-chunk strategy: it divides the video into segments of 24 frames, which are processed sequentially and autoregressively, maintaining a temporal consistency from left to right between chunks.

This design enables:

- Natural scalability for streaming and real-time synthesis.

- Greater visual coherence between scenes.

- Parallel processing of multiple blocks during inference.

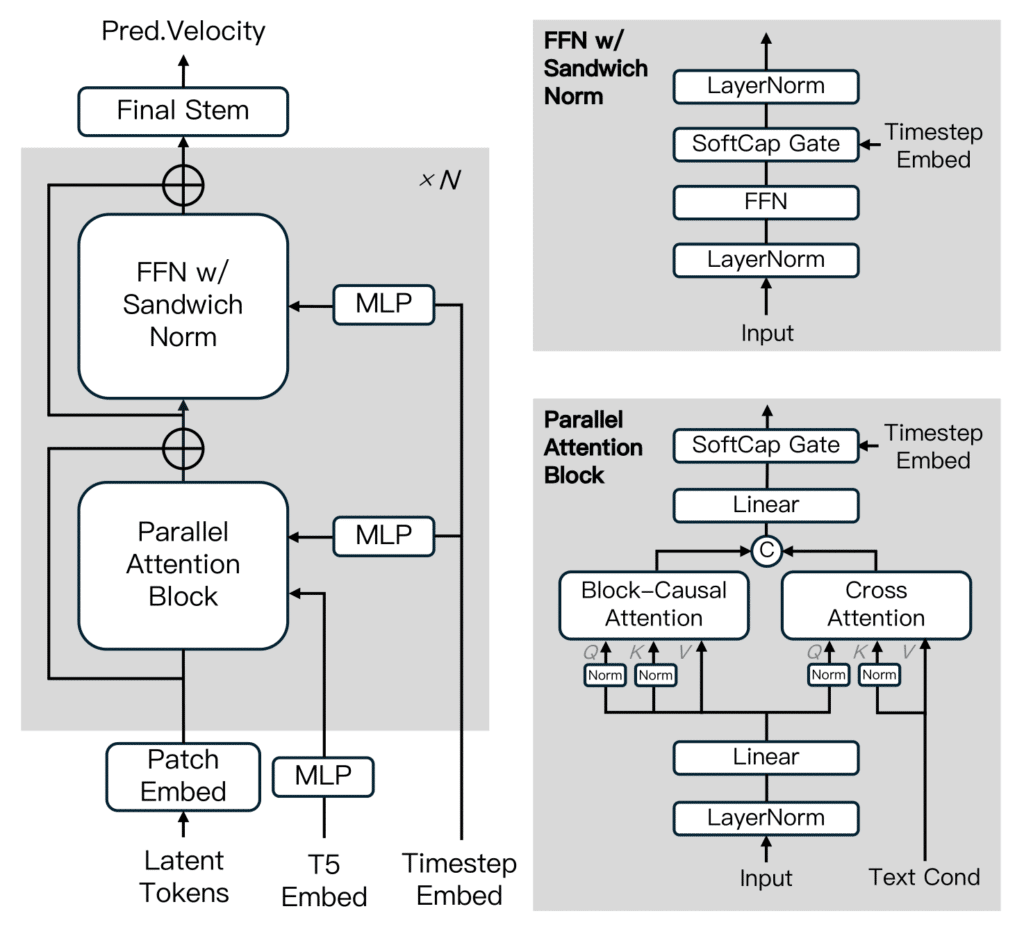

The architecture is based on a Transformer-type diffusion model, with improvements such as Block-Causal Attention, QK-Norm, Sandwich Normalization, and Softcap Modulation. Additionally, it utilizes a progressive noise approach over time during training, allowing the model to learn the causality between frames.

Outstanding Performance Against Open and Commercial Models

MAGI-1 has been evaluated in both human benchmarks and automated testing. In terms of motion fidelity, adherence to instructions, and semantic continuity, it clearly surpasses open models like Wan-2.1 and HunyuanVideo, and competes head-to-head with closed solutions like Sora, Kling, Hailuo, and even Google’s VideoPoet.

In the Physics-IQ benchmark, which measures the models’ ability to understand and predict physical behavior in dynamic environments, MAGI-1 achieves leading scores thanks to its autoregressive nature, outperforming all current models in both spatial and temporal accuracy.

Highlighted Results (I2V):

| Model | Physics IQ ↑ | Spatial Coherence ↑ | Spatio-Temporal Coherence ↑ | MSE ↓ |

|---|---|---|---|---|

| MAGI-1 (I2V) | 30.23 | 0.203 | 0.151 | 0.012 |

| Kling 1.6 | 23.64 | 0.197 | 0.086 | 0.025 |

| VideoPoet | 20.30 | 0.141 | 0.126 | 0.012 |

| Sora | 10.00 | 0.138 | 0.047 | 0.030 |

Narrative Control and Conditional Generation: A Strong Point

One of MAGI-1’s significant advancements is its fragment prompt system, which allows for independent control of the narrative in each video block without losing overall coherence.

This makes it possible to:

- Define distinct events in different parts of the video.

- Apply smooth transitions between scenes.

- Perform real-time edits from text or images.

Additionally, the system supports t2v (text to video), i2v (image to video), and v2v (video continuation) tasks, expanding its capabilities for multiple creative and commercial flows.

Training, Distillation, and Performance

Sand AI has released both the full version of MAGI-1 (24B) and a lighter version (4.5B), as well as distilled and quantized versions optimized for use on RTX 4090 or H100/H800 GPUs.

During training, a progressive distillation algorithm with varying sampling rates and a non-classifier guidance system was employed, ensuring faithful alignment with prompt instructions and fast inference without quality loss.

Open Source and the Democratization of AI Video

Unlike other industry leaders, Sand AI has chosen a philosophy of total openness: the model, inference code, configurations, and documentation are all available on GitHub under the Apache 2.0 license, with support for Docker and Conda.

This strategy reduces barriers to entry for researchers, creators, and startups looking to experiment with realistic video generation without relying on closed commercial APIs.

The New Standard in AI Video?

If DeepSeek set the pace in open-source language models, MAGI-1 seems poised to do the same in the audiovisual realm. The backing of figures like Kai-Fu Lee (director of Microsoft Research Asia) and the growing interest in the GitHub repository suggest that this is not just another experiment, but a solid commitment to compete on a global scale.

"👀 You won’t believe this is AI-generated

🧠 You won’t believe it’s open-source

🎬 You won’t believe it’s FREE" – MAGI-1 video model just humiliated commercial video tools.

MAGI-1 is more than just a generative AI model: it is a powerful, flexible, and free visual infrastructure, arriving at a time when video generation appears to be the next major battleground in artificial intelligence. Against closed proposals like Sora or Gemini, MAGI-1’s transparency and technical quality could make it the new benchmark for open-source visual generation.

Source: Artificial Intelligence News