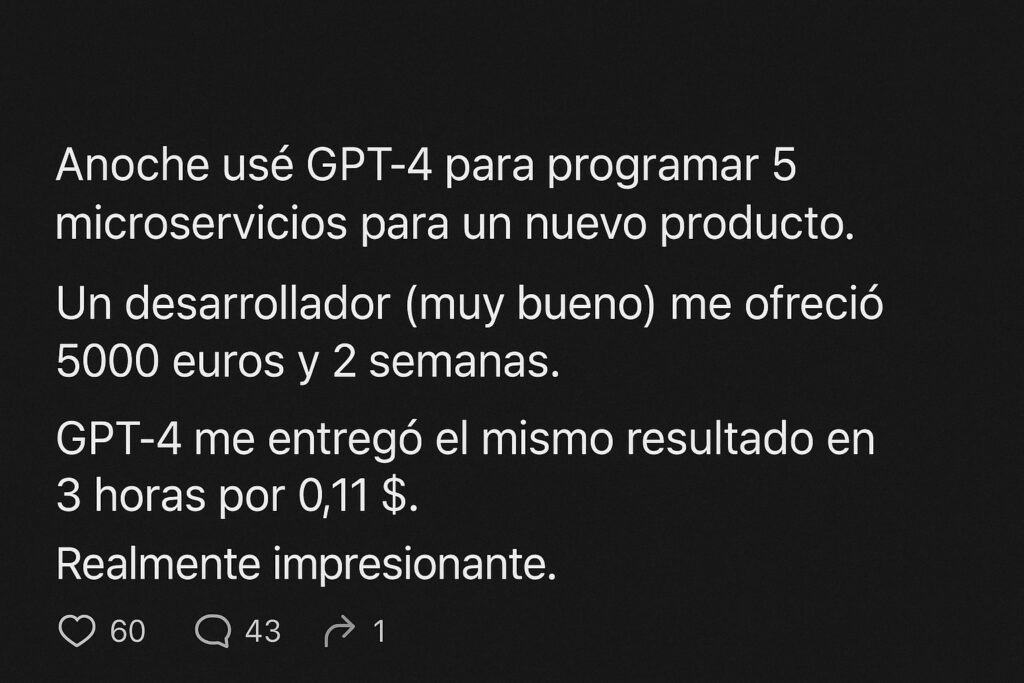

In a recent social media post, a user celebrated using GPT-4 to program five microservices for a new product in just three hours at a cost of $0.11. In comparison, a human developer—described as “very good”—had offered to do the same job for €5,000 with a two-week deadline. The initial reaction from many was one of amazement and excitement: is this the end of traditional development?

However, the critical response came quickly. Accompanying the post, another professional shared a damning list of more than 30 technical questions that are rarely raised in these types of comparisons. Questions that reveal an uncomfortable truth: code is not just functionality, it also includes security, context, maintenance, and accountability.

How much does “free code” really cost?

In the real world, writing code that “works” is not enough. It’s just the beginning. A microservice, no matter how minimal, must undergo a battery of checks if it wants to meet the standards of a serious production environment:

- Does it properly validate inputs? Or could it crash the server with a malformed request?

- Does it comply with privacy regulations like GDPR?

- Does it have useful logs and alert configurations? Can it be monitored and debugged if something goes wrong?

- Is it protected against injections (SQL, commands, etc.)?

- What impact would it have if that microservice fails for an entire day?

- Who will maintain it in six months? Does it have documentation? Is it versioned?

These questions are not rhetorical. They are what separates “lab code” from a professional solution that can scale, be maintained, and stand the test of time.

GPT-4 is a tool, not a replacement

Generative AI can write useful, even excellent, code in the hands of experts. But that result is not free: it requires understanding, validation, testing, integration, and follow-up. The cost is not only in the writing time, but in everything that comes after: quality assurance, security, maintainability, legal compliance, and technical sustainability.

Thinking GPT-4 can completely replace a developer is as naive as assuming a calculator can replace an engineer.

Technical debt: the hidden cost of shortcuts

Adopting AI-generated solutions without oversight may seem like a short-term saving, but it opens the door to technical debt, security failures, and a dangerous dependency on code that is not understood. In production, unexplained code is a looming risk. What happens if it fails and no one knows how to fix it?

This type of situation is not a remote hypothesis: it happens every day in companies that have prioritized speed over reliability.

Conclusion: automate, yes; abdicate, no

Automating part of the work with AI is an extraordinary opportunity, but it cannot become an excuse to abandon the basic principles of professional development. A developer who uses GPT-4 to speed up repetitive tasks and focus on high-level decisions is being efficient. Those who copy and paste without understanding what they are doing are playing with fire.

Artificial Intelligence is not here to replace professionals, but to empower them. But like any powerful tool, it requires judgment, responsibility, and technical knowledge to avoid backfiring.

References: LinkedIN