Google has taken another step forward in the evolution of artificial intelligence with the launch of Gemma 3, a new collection of open models designed to operate efficiently on a single GPU or TPU. With optimized performance, a context window of 128,000 tokens, and support for over 140 languages, Gemma 3 positions itself as the most advanced and accessible solution for developers and businesses.

A model designed for efficiency and scalability

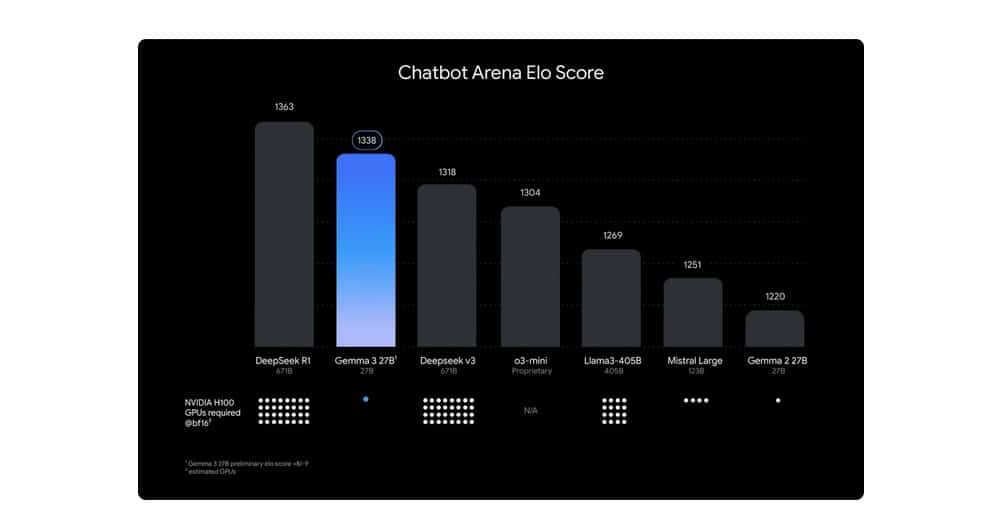

Unlike other AI models that require expensive hardware infrastructures, Gemma 3 is capable of delivering superior performance in resource-limited environments. Thanks to its optimized architecture, it outperforms similar models such as Llama 3, DeepSeek-V3, and o3-mini in terms of efficiency, allowing its implementation in local servers, workstations, and portable devices.

The improvements introduced in this new version expand information generation and analysis capabilities, making it easier to create more sophisticated applications without increasing implementation costs.

Key Innovations of Gemma 3

- Category-leading performance

- Outperforms similar models in terms of efficiency and speed.

- Can run on a single NVIDIA GPU, TPU, or environments with AMD ROCm™ processors.

- Advanced processing capabilities

- Extended context window of 128,000 tokens, allowing real-time handling of large volumes of information.

- Advanced reasoning capabilities over text, images, and short videos.

- Greater compatibility and flexibility

- Support for over 140 languages.

- Compatibility with popular development frameworks like Hugging Face, TensorFlow, PyTorch, JAX, and Keras.

- Integration with Google AI Studio, Kaggle, and Vertex AI for easier experimentation and customization.

- Optimization for diverse hardware

- Quantized versions that reduce model size and consumption of resources without sacrificing accuracy.

- Enhanced performance in environments with NVIDIA GPUs, Google Cloud TPUs, and CPUs with Gemma.cpp.

A responsible approach to AI development

Google has implemented rigorous safety assessments in the development of Gemma 3, ensuring that the model meets ethical and regulatory standards. As part of this effort, ShieldGemma 2 has also been launched, a protection system for images that allows for detection of sensitive content and categorization into areas such as violence, explicit material, and security risks.

Expansion of the Gemmaverse ecosystem and support for research

The growth of the Gemma model ecosystem has been exponential, with over 100 million downloads and 60,000 variants created by the community. To encourage the development of new applications, Google has announced the Gemma 3 Academic Program, which offers up to $10,000 in Google Cloud credits to researchers looking to develop innovations based on this model.

A new era for efficient and accessible AI

With Gemma 3, Google reinforces its commitment to a more accessible, efficient, and powerful artificial intelligence, removing barriers to entry for businesses, developers, and researchers. Thanks to its optimization for various hardware environments and its ease of integration with leading AI tools, Gemma 3 marks a turning point in the democratization of artificial intelligence.

Now available on Google AI Studio, Hugging Face, and Vertex AI, Gemma 3 is ready to redefine the future of generative AI.