Here’s the translation to American English:

In the world of supercomputing and artificial intelligence (AI), networks play a crucial role in the efficiency and scalability of systems. InfiniBand HDR (High Data Rate) has become the best choice for high-performance data centers, thanks to its low latency, high bandwidth, and data processing optimization. With the growing demand for cloud processing, AI, and machine learning, InfiniBand continues to surpass Ethernet and other technologies in HPC (High-Performance Computing) environments.

InfiniBand: The Backbone of Supercomputing

InfiniBand is a network communication standard designed to provide ultra-fast and reliable interconnection in high-performance computing systems. Its use has grown significantly in recent years, making it the preferred choice in the world’s leading supercomputers. According to the Top500 list from November 2023, InfiniBand continues to dominate the market, with 189 systems based on this technology, especially highlighted in the top 100 spots.

Key advantages of InfiniBand in supercomputing:

- Extreme bandwidth: HDR reaches up to 200 Gbps and NDR up to 400 Gbps, allowing for smoother and faster communication between servers.

- Low latency: While Ethernet can have latencies of several microseconds, InfiniBand reduces this time to less than 100 nanoseconds, ideal for AI and HPC.

- CPU offload: Its architecture optimizes CPU usage through RDMA (Remote Direct Memory Access) and GPUDirect technology, facilitating direct access to GPU memory without CPU intervention.

- Lossless network: Thanks to efficient flow control, it avoids packet retransmission and optimizes data center performance.

The Latest Innovations: HDR and NDR

Data transfer speeds in InfiniBand have evolved rapidly. Currently, HDR is the dominant technology in high-performance data centers, while NDR (Next Data Rate) with 400 Gbps is gaining traction in advanced infrastructures. The next generation, XDR (Extreme Data Rate), will reach 800 Gbps, further enhancing real-time data processing capabilities.

| Technology | Maximum Speed | Main Application |

|---|---|---|

| SDR | 8 Gbps | Basic HPC |

| DDR | 16 Gbps | Intermediate HPC networks |

| QDR | 40 Gbps | Distributed computing |

| FDR | 56 Gbps | AI clusters |

| EDR | 100 Gbps | Advanced enterprise networks |

| HDR | 200 Gbps | Data centers and supercomputing |

| NDR | 400 Gbps | Large-scale AI infrastructures |

| XDR | 800 Gbps | Next-gen supercomputing |

InfiniBand vs. Ethernet: Which is the Better Option?

While Ethernet remains the standard network in traditional data centers, its performance in HPC and AI applications is limited by higher CPU consumption and greater latency. InfiniBand, on the other hand, has been designed to handle large volumes of data with minimal latency and efficient resource utilization.

Technical comparison between InfiniBand and Ethernet:

| Feature | InfiniBand | Ethernet |

|---|---|---|

| Bandwidth | Up to 400 Gbps (NDR) | Up to 400 Gbps |

| Latency | Less than 100 ns | 2-10 μs |

| CPU consumption | Low (RDMA) | Higher (TCP/IP) |

| Reliability | Lossless network | Possible packet loss |

| Network Management | Subnet Manager (integrated SDN) | VLAN, STP, ARP |

| Cost | High | Low |

| Applications | HPC, AI, Supercomputing Clusters | Enterprise networks, Cloud, Virtualization |

The significant difference lies in latency and CPU consumption. InfiniBand allows for interconnecting server clusters with near-zero latency, which is essential for AI and large-scale machine learning model training. Ethernet, while less expensive and more flexible, cannot match InfiniBand’s performance in HPC.

InfiniBand Applications in Artificial Intelligence and HPC

The adoption of InfiniBand has been driven by companies and research centers that require high computing power and high data transfer speeds. Some of the most notable applications include:

- Training AI models: Platforms like OpenAI and NVIDIA Selene rely on InfiniBand to train advanced machine learning models with large datasets.

- Scientific supercomputing: Institutions such as the Barcelona Supercomputing Center (BSC) use InfiniBand for research in astrophysics, biomedicine, and climate modeling.

- Enterprise data centers: Companies like Microsoft Azure have integrated InfiniBand into their cloud infrastructure to improve service efficiency.

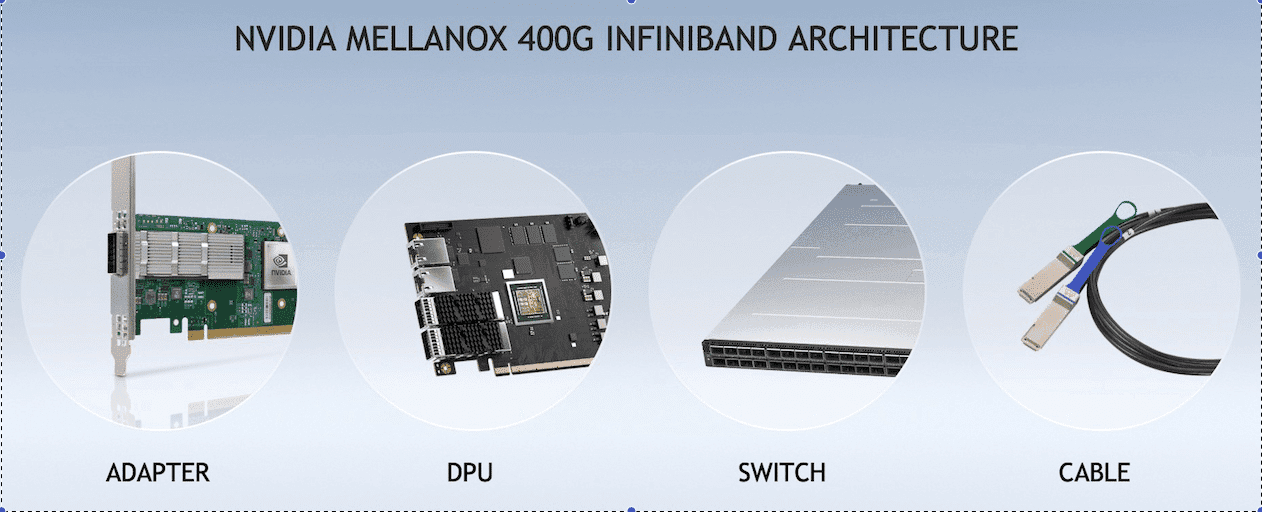

The HDR Infrastructure of InfiniBand: Switches and Network Cards

The latest hardware advancements have allowed for the expansion of InfiniBand in supercomputing environments. NVIDIA, one of the leading manufacturers of hardware for AI and HPC, has developed HDR and NDR switches and network cards optimized for these workloads.

InfiniBand HDR Switches

The HDR switches allow interconnecting thousands of nodes with optimized performance. Models such as the NVIDIA QM8700-HS2F and QM8790-HS2F offer up to 40 QSFP56 ports at 200 Gbps, with a latency of less than 130 ns.

| Model | Ports | Link Speed | Form Factor |

|---|---|---|---|

| QM8700-HS2F | 40 x QSFP56 | 200 Gbps | 1RU |

| QM8790-HS2F | 40 x QSFP56 | 200 Gbps | 1RU |

InfiniBand HDR Network Interface Cards (NIC)

The InfiniBand HDR SmartNICs enable maximizing the speed and low latency of the network. Models such as the MCX653106A-ECAT support up to 200 Gbps, with support for RDMA and GPUDirect.

| Model | Ports | Maximum Speed | Interface |

|---|---|---|---|

| MCX653106A-ECAT | Dual | 200 Gbps | QSFP56, PCIe 4.0 |

The Future of InfiniBand: Where Are We Heading?

With the advent of exascale supercomputing, InfiniBand will continue to evolve to support even more intensive workloads. The next generation XDR (800 Gbps) will enable running complex simulations and AI training with unprecedented speed.

Trends indicate that InfiniBand will become the standard technology for high-performance networks, displacing Ethernet in HPC environments. Its combination of speed, low latency, and CPU optimization makes it essential for the future of computing.

Conclusion

InfiniBand HDR has proven to be the ideal solution for supercomputing, AI, and high-performance data centers. While Ethernet remains the most versatile option for conventional networks, InfiniBand is the only alternative capable of handling the exponential growth of AI and advanced computing.

As technologies like XDR and NDR continue to advance, next-generation data centers will increasingly rely on InfiniBand to achieve optimal performance and limitless scalability.

via: FS