The growing demand for artificial intelligence has led to a race to provide more powerful and flexible infrastructures. In this context, DigitalOcean has announced the launch of its new Bare Metal systems based on the NVIDIA HGX H200 supercomputing platform, specifically designed for advanced AI workloads.

This new offering allows developers, startups, and companies focused on artificial intelligence to accelerate model training, optimize real-time inference, and improve operational efficiency, all without the hidden costs or complexity associated with large cloud providers.

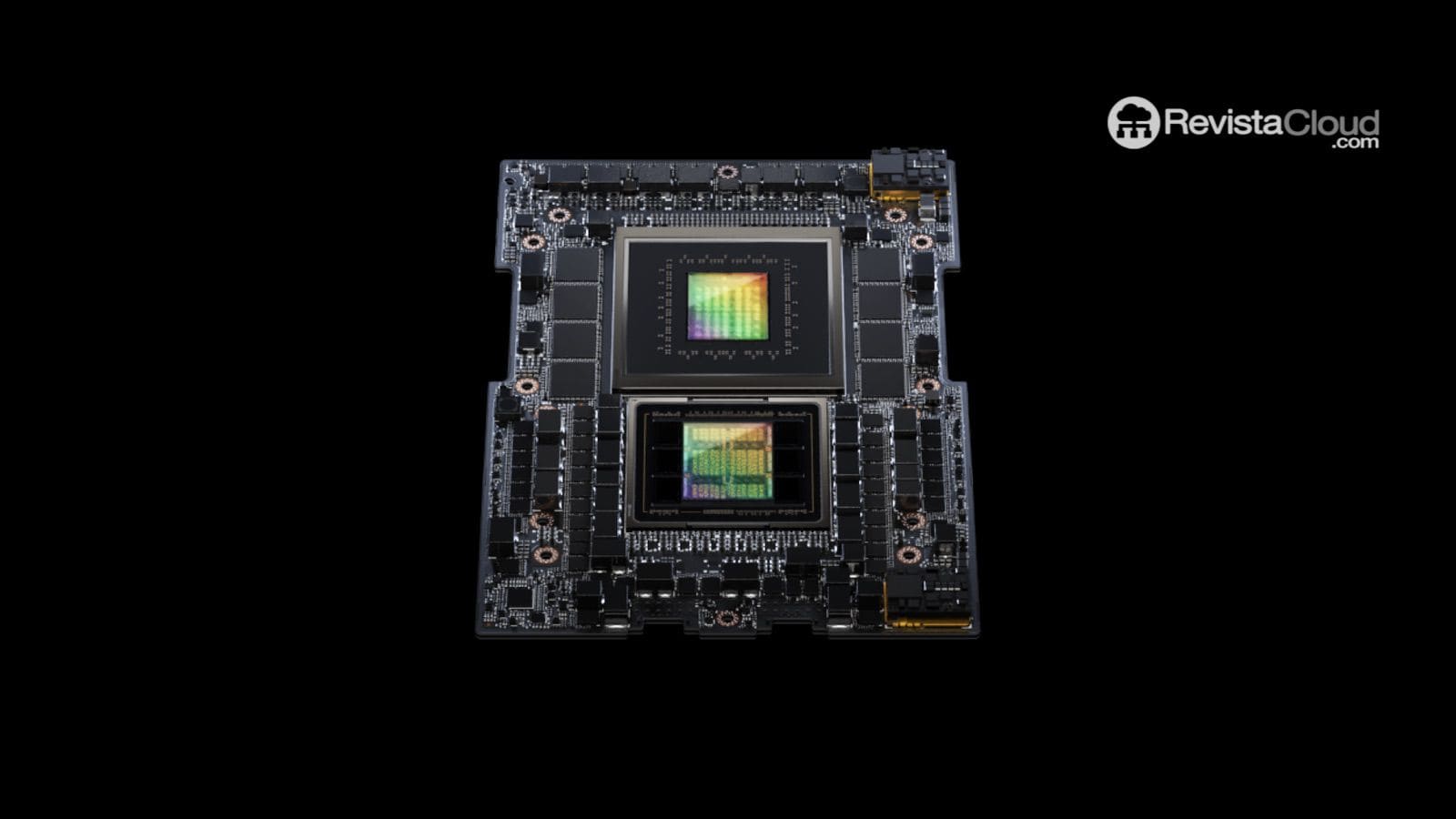

Unprecedented Power with NVIDIA H200 GPUs

The key to this infrastructure lies in the use of 8 NVIDIA HGX H200 GPUs, which are capable of delivering cutting-edge performance for tasks that require high computational power. These graphics cards incorporate 141 GB of HBM3e memory and a bandwidthBandwidth is the maximum transfer capacity. of 4.8 TB/s, allowing for the handling of large-scale AI models with significantly lower latency.

Some of the main advantages of the NVIDIA H200-based hardware include:

- Faster model training: the ability to handle larger batches of data and improve memory efficiency, reducing training times.

- Optimized model tuning: fine-tuning foundational models with lower computational resource consumption and improved latency.

- Enhanced real-time inference: executing transformer models more quickly and energy-efficiently.

This configuration makes the Bare Metal servers a scalable and efficient solution for developers and companies working with generative AI models, natural language processing, or computer vision.

Flexibility for AI projects without the constraints of traditional cloud

One of the main differentiators of this new offering is the flexibility it provides to users. The Bare Metal systems can be used as individual machines or as multi-node clusters, allowing for the creation of customized AI infrastructures with full control over hardware and software environment.

This capability is especially relevant for:

- Training large-scale language models (LLMs), where hardware efficiency is key to reducing processing times.

- Developing generative AI models, such as those used in content creation applications, virtual assistants, or automated data analysis.

- Optimizing proprietary models, providing an unrestricted environment for experimentation and fine-tuning algorithms.

In contrast to large cloud providers, DigitalOcean eliminates hidden fees and complex billing models. Notably, the European company Stackscale (Grupo Aire) also offers bare-metal servers with Nvidia Tesla T4, L4, and L40S GPUs for big data, language models, and inference. Additionally, server deployment is estimated to take 1 to 2 days, facilitating the rapid scalability of AI projects without long waits or unforeseen costs.

The New Era of High-Performance Computing for AI

The launch of these servers marks a significant step in democratizing access to high-performance hardware, allowing more businesses and developers to leverage the capabilities of artificial intelligence without relying on centralized solutions with infrastructure constraints.

As AI continues to evolve, the availability of powerful and accessible hardware will be a determining factor in the sector’s competitiveness. With this new offering of Bare Metal Servers with NVIDIA HGX H200, DigitalOcean strengthens its position as a key ally for the AI ecosystem, providing an optimized solution for companies looking to scale their projects efficiently and independently.

For those interested in exploring this infrastructure, DigitalOcean has already enabled capacity reservation, allowing developers and companies to begin leveraging this new standard of performance in artificial intelligence.