NVIDIA recently unveiled its B200 chip for artificial intelligence (AI) applications, a piece of technology that promises to transform the landscape of advanced computing. Announced in March of this year, the B200 is presented as a true technological beast with features that exceed the expectations of even the most optimistic in the industry.

The B200 chip boasts impressive specifications, including 208,000 million transistors, the latest generation Blackwell architecture, and a maximum performance of 20 petaFLOPS in FP4 operations when used with liquid cooling. Furthermore, it supports a memory map of up to 192 GB of VRAM and achieves a bandwidth of 8 TB/s, setting new standards in processing capacity for AI tasks.

Despite these remarkable specifications, the U.S. Department of Commerce, under the direction of Gina Raimondo, has imposed restrictions on NVIDIA, limiting the sale of this GPU in China due to its high performance. However, NVIDIA has confirmed that mass production of the B200 chip will begin in the fourth quarter of 2024, with the first deliveries expected by the end of this year.

The manufacturing of the B200 chip has not been without difficulties. NVIDIA has admitted facing significant challenges in the production processes, leading the company to redesign certain layers of the chip to improve its performance. "We were forced to introduce a change to the Blackwell GPU mask to improve production performance," NVIDIA explained in an official statement.

However, these adjustments seem to have paid off. According to preliminary data published by NVIDIA, the B200 chip quadruples the performance of its predecessor, the H100 GPU based on the Hopper microarchitecture. In tests conducted with MLPerf 4.1, the B200 showed a performance of 10,755 tokens per second in inferences and 11,264 tokens per second in offline reference tests, highlighting its unparalleled capabilities in the market.

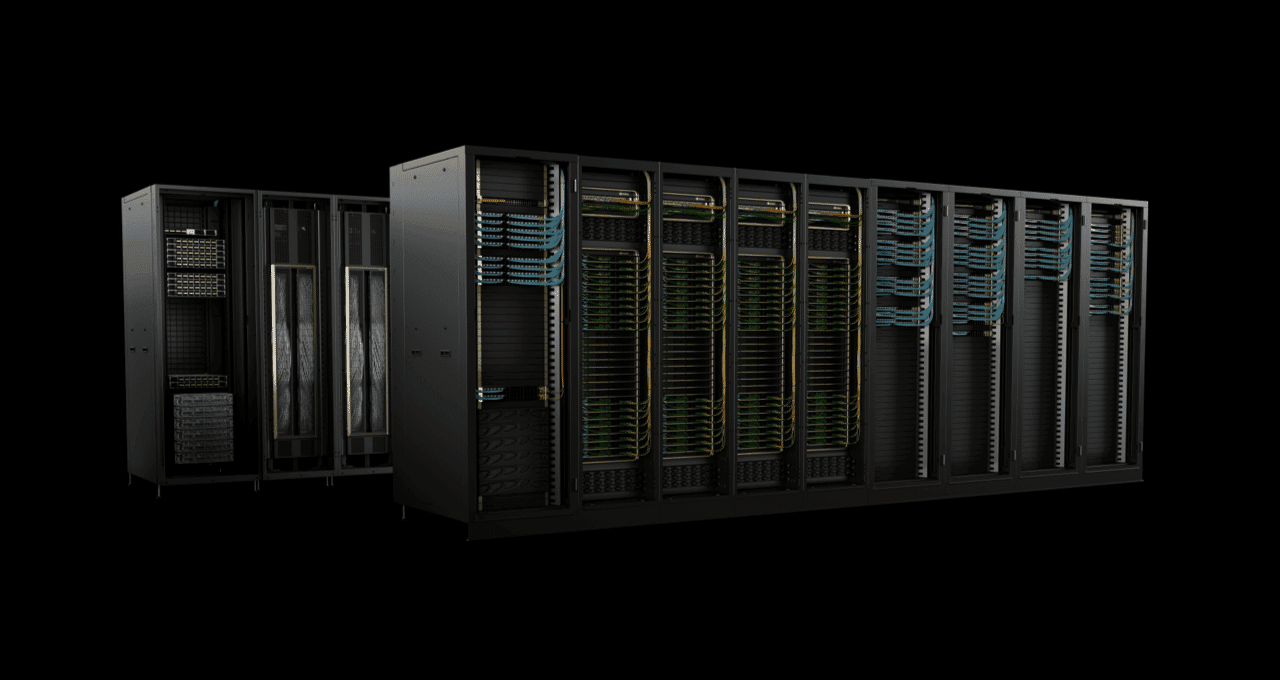

The B200 will be integrated into the NVIDIA DGX B200 platform, which offers revolutionary performance with its configuration of eight interconnected Blackwell GPUs using the fifth generation of NVIDIA NVLink. This platform is capable of handling large language models, recommendation systems, and chatbots, providing a three-fold increase in training performance and a 15-fold increase in inference performance compared to previous generations.

The DGX B200 is equipped with 1440 GB of GPU memory and achieves a performance of 72 petaFLOPS in training and 144 petaFLOPS in inference. With an energy consumption of approximately 14.3 kW, the platform includes Intel Xeon Platinum processors, NVMe storage, and robust network support, including InfiniBand and Ethernet up to 400 Gb/s.

In summary, NVIDIA’s B200 chip represents a monumental advancement in AI technology, albeit not without its production challenges. With its unprecedented power, it promises to set new standards in data processing and artificial intelligence, while competitors in the industry, including AMD and Huawei, are sure to prepare to respond to this formidable offering from NVIDIA.

For more information: Nvidia B200