Intel has unveiled the Intel® Gaudi® 3 AI accelerator, which provides 4x greater computing power for BF16, a 50% increase in memory bandwidth, and double the network bandwidth for massive system scaling compared to its predecessor. This represents a significant leap in performance and productivity for training and inference of AI on popular large language models (LLM) and multimodal models. Building on the proven performance and efficiency of the Intel® Gaudi® 2 AI accelerator – the only market alternative with MLPerf scoring for LLM – Intel offers customers a choice with community-based open-source software and standard Ethernet networks to scale their systems with greater flexibility.

Justin Hotard, Executive Vice President and General Manager of Intel’s Data Centers and AI Group, stated: “In the evolving AI market landscape, a significant gap in current offerings persists. Feedback from our customers and the market at large underscores the desire for a greater variety of options. Enterprises are assessing considerations such as availability, scalability, performance, cost, and energy efficiency. Intel Gaudi 3 stands out as the Generative AI alternative that presents a compelling combination of performance per price, system scalability, and time-to-value advantage.”

Currently, companies in critical sectors such as finance, manufacturing, and healthcare are looking to rapidly expand access to AI and transition from generative AI projects from experimental phases to large-scale implementations. To manage this transition, drive innovation, and achieve revenue growth goals, companies require open, cost-effective, and more energy-efficient solutions and products that meet return on investment (ROI) and operational efficiency needs.

The Intel Gaudi 3 accelerator will meet these requirements and offer versatility through community-based open-source software and industry-standard Ethernet networks, helping companies flexibly scale their AI systems and applications.

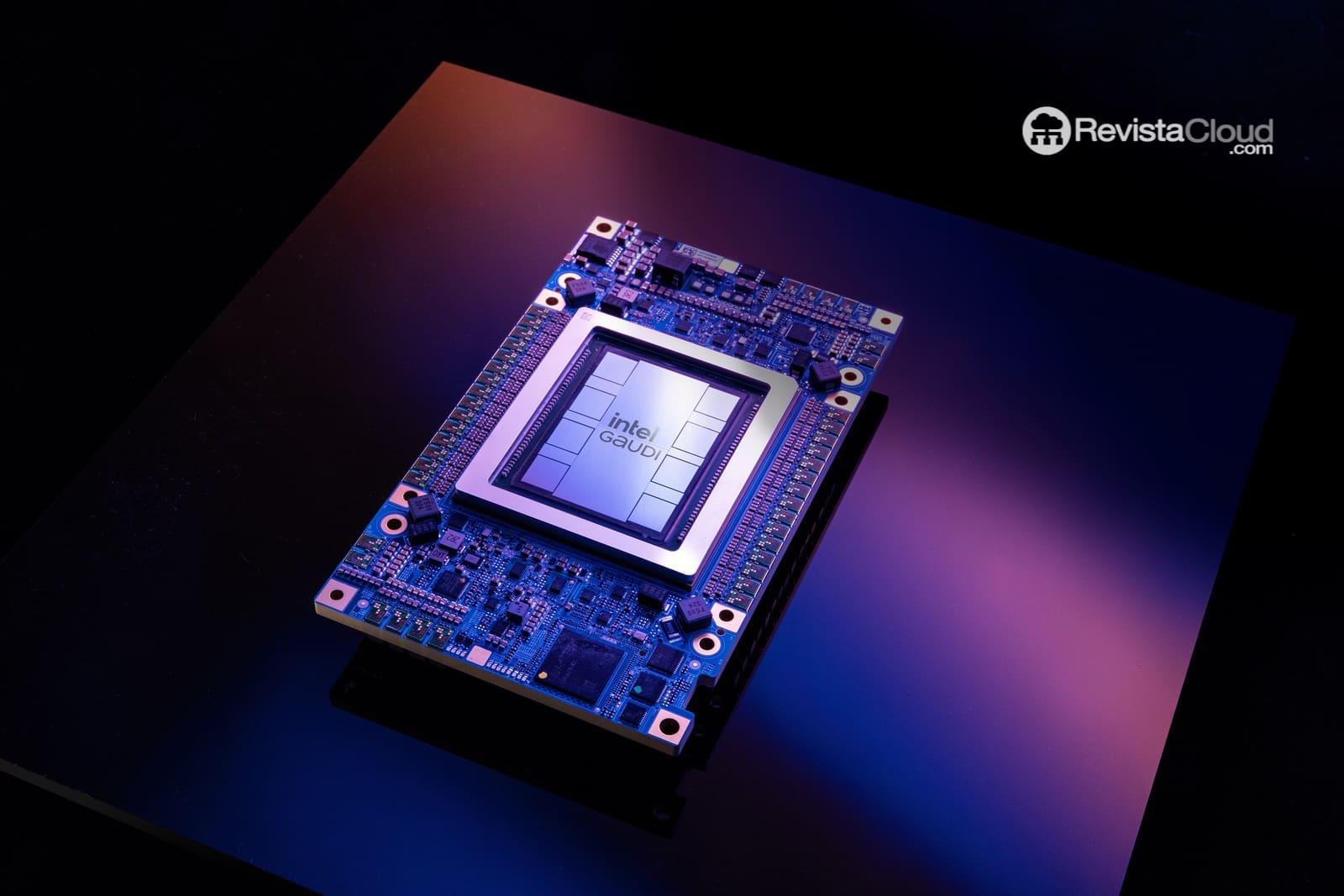

The custom architecture of Intel Gaudi 3, designed for efficient large-scale AI computing, is manufactured on a 5-nanometer process and offers significant advancements over its predecessor. It is designed to enable parallel activation of all engines, with the Matrix Multiplication Engine (MME), Tensor Processing Cores (TPC), and Network Interface Cards (NIC), providing the acceleration needed for fast and efficient deep learning computing at scale.

via: [Intel](https://www.intel.com/content/www/us/en/newsroom/news/vision-2024-gaudi-3-ai-accelerator.html)