The global semiconductor industry enters 2026 with a sense of a new era. It’s not just about new chips, but about a reshuffling of value: money and technological priority are shifting toward Artificial Intelligence infrastructure, and in this movement, memory—including DRAM, HBM, and enterprise storage—goes from being a “supporting” component to a key factor in performance and profitability.

This is the central thesis of SK hynix‘s analysis of the 2026 market: the expansion of AI is driving an upward cycle in memory that some banks and analysts are already calling a “supercycle”. The South Korean company aims to position itself at the center of this trend through its leadership in HBM3E and its preparations for the next wave, HBM4. The tone of the message is revealing: the debate is no longer if AI will transform the market, but which part of the supply chain will capture the growth.

A market approaching “one trillion” and memory gaining weight

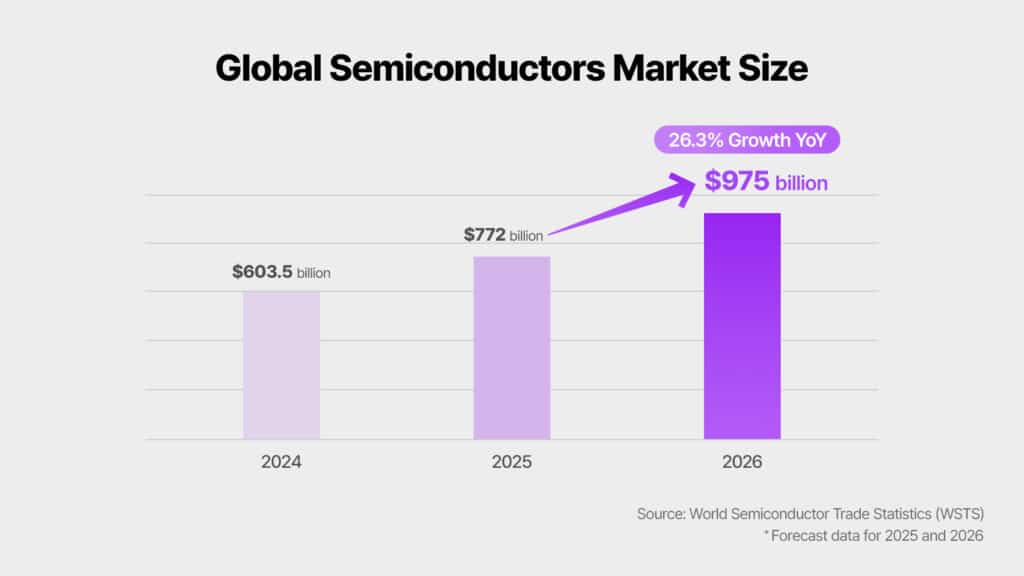

Industry forecasts point to a strong growth in 2026. The organization World Semiconductor Trade Statistics (WSTS) estimates that the global semiconductor market will grow more than 25% year-over-year and reach approximately $975 billion, just shy of the trillion-dollar (in the Western sense of “trillion”). In this scenario, memory and logic stand out as leading categories, with projected increases of more than 30%.

The key point is not just the market size, but its composition. AI is changing how servers are “filled”: more DRAM per system, more HBM per accelerator, and, in parallel, growth in enterprise storage (eSSD) to handle datasets, checkpoints, vectors, and the entire cycle of training and inference. Some estimates place the memory market for 2026 above $440 billion, with particular traction in servers and data centers.

Translating to infrastructure language: as training and inference scale up, so does the system’s “appetite” for close, fast, and efficient memory. And this demand is not just met with more units; it requires new generations, higher stacking, and better packaging.

The “supercycle” of memory: prices, demand, and a structural shift

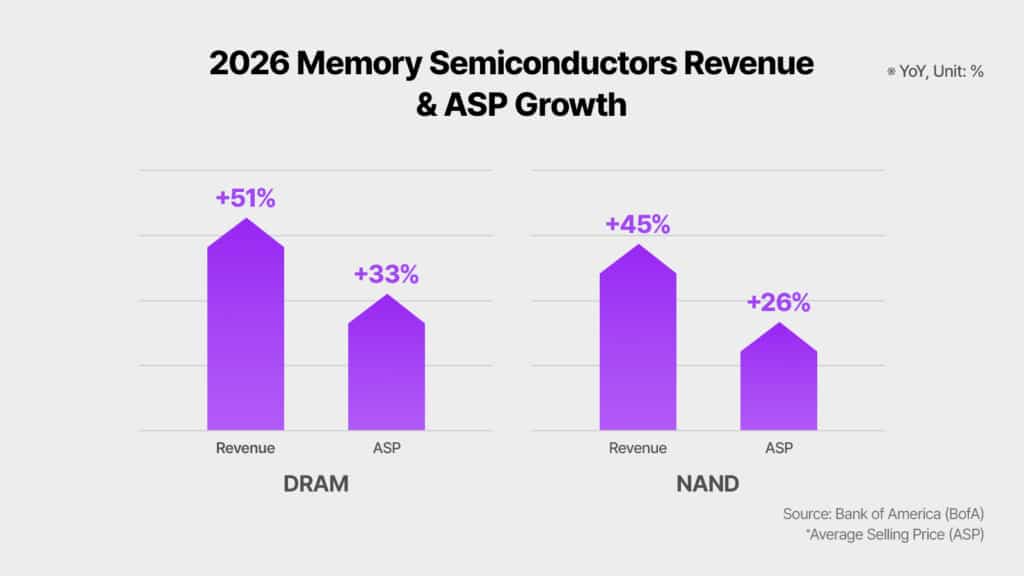

The term “supercycle” has been circulating since 2024 to describe the memory boom. In SK hynix’s analysis, Bank of America compares 2026 to the boom of the 1990s and projects a 51% year-over-year jump in global DRAM revenues and a 45% increase in NAND, along with average price rises (ASP) of 33% and 26%, respectively. These forecasts are based not only on demand but also on supply shifts. When manufacturers and clients prioritize high-value products linked to AI, some production capacity is channeled toward that goal, disrupting the balance of the rest of the market.

Meanwhile, Reuters recently highlighted a context of supply tensions and rising prices in memory associated with the AI boom, with investors viewing this cycle as a prolonged upswing. This background supports the idea that the market is not experiencing a temporary rally but a multi-year dynamic.

HBM, the new center of gravity in AI

Within memory, the real keyword is HBM (High Bandwidth Memory). SK hynix’s analysis summarizes this market shift with a compelling comparison: some forecasts suggest that the HBM market size in 2028 could surpass the total DRAM market of 2024. This is a significant statement. It indicates that the center of profit and bargaining power is moving toward the component that directly feeds GPUs and AI ASICs with the necessary bandwidth.

Along the same lines, Bank of America estimates that the HBM market could reach $54.6 billion in 2026, a 58% increase over the previous year. Goldman Sachs, based on its own analysis, predicts a particularly strong acceleration in the demand for HBM for AI chips based on ASICs, with an expected growth of 82% and approximately one-third of the market. The takeaway is clear: AI infrastructure is diversifying beyond general-purpose GPUs, pushing memory solutions toward more customized options.

HBM3E will continue to dominate in 2026, while HBM4 gains ground

The generational transition won’t be instantaneous. Experts cited in the analysis expect HBM3E to remain the “flagship” product of the HBM market during 2026. The practical reason is that it aligns with the generation of AI accelerators and platforms being widely deployed this year, both in large clouds and in proprietary developments by tech giants.

Meanwhile, HBM4 will gradually increase its presence. SK hynix’s strategy appears to be a “dual-track” approach: maintain leadership and volume in HBM3E while building a robust development and supply system for HBM4.

SK hynix’s leadership: market share, clients, and execution capacity

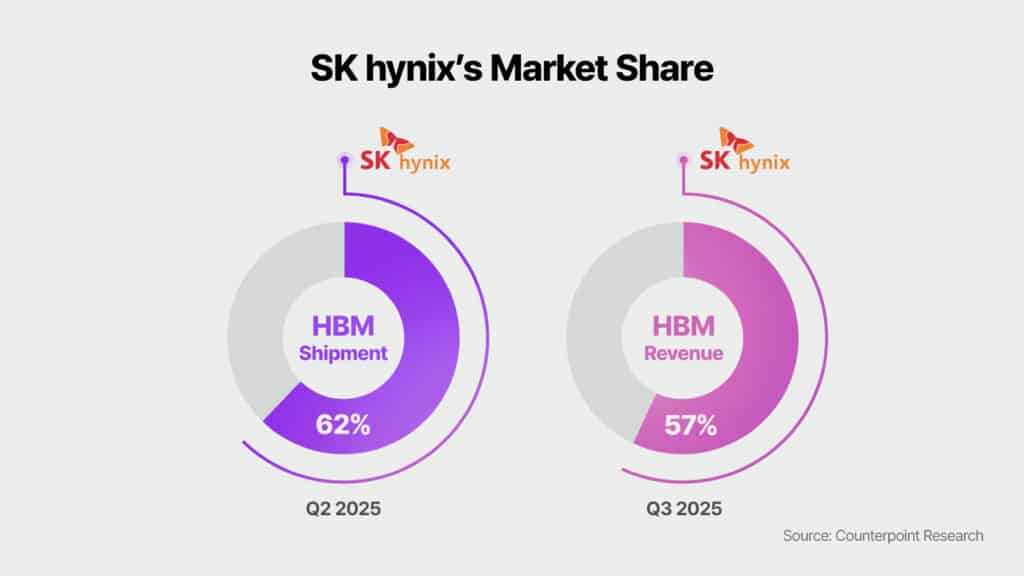

SK hynix’s competitive thesis relies on two pillars: current market share and industrial readiness. According to Counterpoint Research data cited in the analysis, SK hynix led HBM shipments in Q2 2025 with a 62% share and accounted for 57% of HBM revenue in Q3. Goldman Sachs, also referencing the same source, believes the company will maintain its dominant position in HBM3/HBM3E at least until 2026, keeping its total HBM share above 50%.

Additionally, UBS emphasizes SK hynix’s relationships with major clients and positions it as the leading supplier of HBM3E for certain platforms, while projecting an ambitious scenario: near 70% market share in HBM4 for NVIDIA’s Rubin platform in 2026. It’s important to interpret this as a forecast—it highlights how the market anticipates continued leadership amid technological advancements.

On the industrial execution front, the analysis credits SK hynix with a unique position owing to its capacity to supply HBM3E and simultaneously prepare HBM4 “reliably.” This includes milestones and investments: the company claims to have secured a mass production system for HBM4, enhanced technological packaging collaboration with TSMC, progressed the Cheongju M15X fab, and established dedicated structures like a specialized HBM organization and a Global AI Research Center.

Risks: how long does the cycle last and what could disrupt it?

The report admits that, alongside optimism, cautious perspectives persist. Risks include potential price corrections post-2026 due to increased competition and capacity expansion, as well as supply chain uncertainties and regulatory or geopolitical tensions.

The key lies in balancing two forces: on one hand, AI drives fairly inelastic, long-term demand; on the other, industry accelerates investments to capture margins. In HBM, the high technological barrier also tends to slow down rapid leadership shifts, but it does not eliminate the risk of adjustments when the market “catches its breath” after a period of intense tension.

A 2026 of transition: HBM3E as the standard and HBM4 as the next step

SK hynix’s market outlook can be summarized as follows: 2026 will be a transition year featuring three overlapping dynamics. First, the expansion of AI infrastructure will continue to increase memory and storage’s share of overall spending. Second, HBM3E will become the de facto standard in servers and AI data centers. Third, HBM4 will start to enter the scene, setting the stage for medium- and long-term growth.

In this landscape, memory shifts from being just a “commodity” to a strategic factor. If the supercycle materializes, value will not only come from increased manufacturing but also from timely delivery, reliability, and advanced packaging, precisely when AI demands the next leap.

Frequently Asked Questions

What is the difference between HBM3E and HBM4 in AI servers, and why does it matter in 2026?

HBM3E is expected to be the dominant memory in 2026 because of its compatibility with current platforms, while HBM4 represents the next-generation which will gradually gain market share. The practical difference lies in capacity, bandwidth evolution, and the adoption timeline for new platforms.

Why is there talk of a “memory supercycle” when AI is growing?

Because AI increases memory requirements per server (DRAM and HBM) and boosts demand for enterprise SSDs for datasets and models. This demand, combined with supply constraints and a focus on high-value products, can sustain several years of growth and price pressure.

What does it mean if the HBM market could surpass the “traditional” DRAM market in a few years?

It implies that the critical component fueling AI accelerators (HBM) could become the main value driver within memory, overtaking the traditional dominance of conventional DRAM.

What risks could slow down memory growth for AI after 2026?

Analysts mention potential price corrections due to increased competition and capacity, as well as geopolitical and regulatory uncertainties. However, HBM’s technical complexity tends to prevent abrupt shifts in leadership in the short term.

via: news.skhynix